Content-Heavy Dagstuhl Blog for Conversational Agent as Trustworthy Autonomous System by Ricardo Usbeck

14 October 2021, by Ricardo Usbeck

Photo: dagstuhl.de, Public Domain

After we already announced that Ricardo took part in the Dagstuhl Seminar (https://www.inf.uni-hamburg.de/en/inst/ab/sems/news/2021/2021dagstuhl.html), we wanted to give you more insights into what happened.

The main questions revolved around what is trust and how can we measure it? More than 40 international experts shared their ideas around Conversational Agents and how we can unpack the dimensions of trust. Other topics involved the evolution of trust during multimodal interaction. Which factors build trust faster? Is trust measurable at all? We recognized that people come with different priors and have different perceptions of the trustability of things.

Break-Out Groups

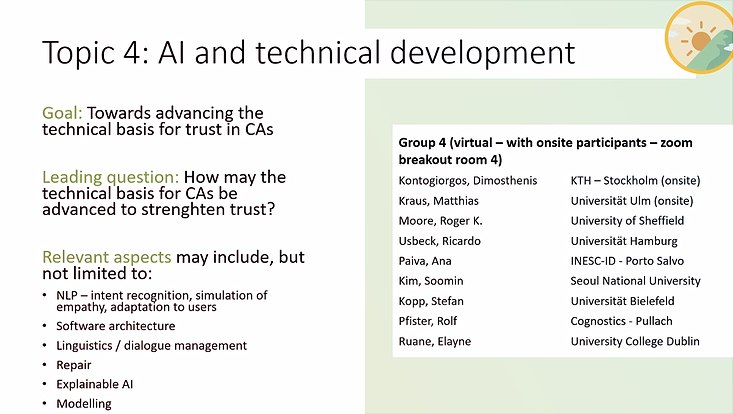

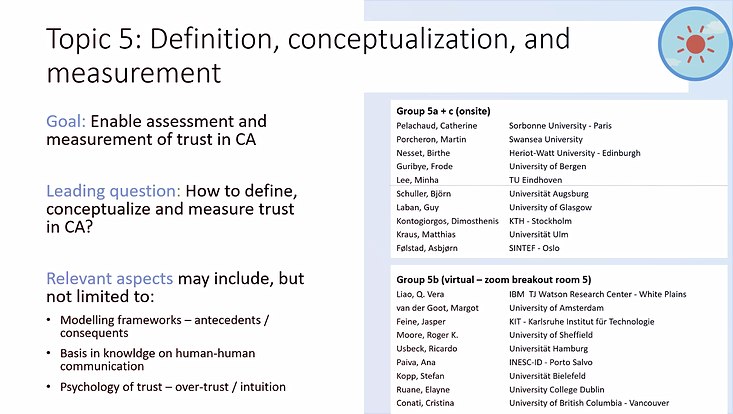

Thus, next to more than 20 highly interesting talks (see below), Ricardo also took part in two break-out groups (see Figures) which met throughout the whole week. One discussed the needed technical developments to strengthen trust for Conversational Agents (Topic 4) while the other (Topic 5) was about definitions, concepts, and measurements of trust in agents. The outcomes are two enormous reports and graphics which will be placed inside the Dagstuhl report.

Talks

The talks were between 15 min and 45 min and covered such a large range of topics - from love robots to embodied agents that can lie to us - that it was an honor and privilege to listen alone. Here comes now a very short summary of the most memorable talks to Ricardo. Note, he missed some talks.

Stefan Schaffer (DFKI) - To trust or not to trust? What is the use case?

Stefan presented several real-life use cases. The first interesting use case was on speech assistants for a visually impaired housekeeper in an elderly care home. The caretaker is responsible for medications and detergents. A trustworthy system helps the caretaker to stay longer in the job and even coworkers want to have the system. Another use case shows how a system helps to advise travel agencies to handle agitated soccer fans by transferring reliable information to a central instance.

Soomin Kim (Seoul National University) - Designing Conversational Agents for Dyadic and Group Interactions

Soomin presents three different projects. She highlights that bots can increase the engagement of groups and individuals in surveys and debates as compared to written or standard forms. Machine agents are better than having no moderator at all. But the best result could be achieved if humans and machines work together.

Dimosthenis Kontogiorgos (KTH Royal Institute of Technology) - Measuring Understanding in Interactions with Embodied Conversational Interfaces: Theory, Studies and Computation

Dimosthenis showed super interesting experiments for conversation interactions of three persons interacting with inaccurate instructions observed with helmet cameras. Speakers want to give complex instructions while listeners prefer to receive instructions in smaller bits. An important step to understand how machines should instruct humans.

Jonathan Grudin (Microsoft) - Unanticipated Challenges

Jonathan discusses that a definition of trust is hard to come up with. We also saw that in our breakout group. However, discussing how to achieve trust is easier. Thus, he introduces 9 categories of trust species that could apply to Conversational Agents. That is, Jonathan talks about companion killers, i.e. things that agents do that lead to users breaking up with a virtual agent or companion. Next to GDPR, understanding and memorizing are the most important skills. So, when did we start to learn from online companions? Probably since the start of Tay and Zoe - two famously failed bots - and the bad behavior that they learned from their community. Kills happen in human-in-the-loop systems whenever humans experience unexpected behavior.

Zhou You (Columbia University – New York) - Self-disclosure

Deep Learning-based recognition of self-disclosure shows that high levels of self-disclosure lead to higher levels of cognitive/emotional disclosure and longer, more detailed answers. Conversations with higher bot disclosure levels are more engaging and perceived warmer. However, note, disclosure does not mean “I am a bot” but the bot shows facts, emotions, and opinions as a human would do. Opposite to what a purely factual chatbot would do. More information on the interesting topic of self-disclosure can be found in her paper https://arxiv.org/abs/2106.01666. This talk certainly hit some sweat spots as the discussion went on for hours and days in a positive way.

Oliver Bendel (University of Applied Sciences and Arts Northwestern Switzerland) - Chatbots and Voice Assistants from the Perspective of Machine Ethics and Social Robotics

Oliver presented various topics on machine love and animal-friendly machines. But also social machines which are not social and ethical machines which are not ethical. One of the main insights was that chatbots do not care about users and thus are inadequate in several dimensions. To proof his point, he created immoral (e.g. ”Liebot” - a bot that always lies) and moral machines (e.g. “Goodbot” - a bot that always tells the truth) to drive the field of machine ethics forward. His latest machine, the “Bestbot” combines the idea of “Goodbot” and the technical design of ”Liebot” and some technical extensions. Face Recognition is used to detect emotions and talk to them. The most interesting insight is: “There are bots which should never leave the lab”. Find out more in his blog https://www.maschinenethik.net/

Guy Laban (University of Glasgow) - Establishing long-term relationships with conversational agents - lessons from prolonged interactions with social interactions

Even while Covid, Guy was able to put social robots in the homes of participants (n=40) via Zoom. While interactions were artificial and superficial in the beginning, people started warming up after some time in this crisis. They then had interactions every two or three days. The sense of agency went up with experience. That is, people talked to the bot - an embodied chatbot - more and longer. More sessions lead to more comforting responses.

David C. Kellermann (UNSW Sydney) - Question Bot for Microsoft Teams

David had the best presentation. He called in from Sydney where he teaches huge engineering classes from home. His team developed a bot integrated into MS Teams to help students navigate course requirements, schedules, assignments, events, and other frequently asked questions. And just after some training of the bot, his 30+ teaching assistants felt a large portion of communication shifting to the bot instead of them. The source code can be found in his repository: https://github.com/unsw-edu-au/QBot/blob/master/Documentation/demo.md

Michelle Zhou (Juji, Inc.,) - Democratizing Conversational AI: Challenges and Opportunities of No-Code, Reusable AIBuld

Michelle gave us a tour through the no-code environment https://juji.ai/login. One major takeaway from her talk is that good conversation designs need good guidelines for laypersons. Especially on corner cases such as handling relevant but “null” inputs, arbitrary interruptions, and how to maintain context. Thus, it is important to interpret incomplete input in context and do some chatbot profiling. You can read more about this in her paper https://aclanthology.org/2020.cl-1.2.pdf.

Cosmin Munteanu (University of Toronto) - Designing Inclusive Conversational Agents that Older Adults Can Trust

When designing agents for older adults, it is important to cater for an aging society. We can not ignore users’ mental models since that would lead to a lack of trust in a conversation system. OIder adults often embrace virtual agents if they fit their mental model and the virtual agent is “anthropomorphic enough”.

Benjamin Cowan (University College Dublin) - In human-likeness we trust? The implications of human-like design on partner models and user behavior

Benjamin spoke about speech recognition performance and how voice influences our perception of an agent's trustworthiness. He also noted that there still is something like a social embarrassment while being used publicly. He highlighted that Roger Moore’s work on appropriate voices influenced a lot of his work. Finally, and in general, people mistrust agents with biased opinions but agents need a persona scaffold to be trusted.

Of course, there were many more talks and the above selection is subjective to the attention span of Ricardo. Please have a look at https://www.dagstuhl.de/de/programm/kalender/semhp/?semnr=21381 for more information.

Summary Session and Networking

On Friday, in the closing session, we revisited all break-out groups and their works. Heavy discussions ensued on repair. While most repair is simply conversational, currently, the consequences of the repair method and errors committed within the repair can influence trust either not at all or lead to a break in trust. That is, even repair is not always useful or desirable. One thing, all agreed on, is that agents should have a memory since people have. Otherwise, trust erodes. In general, many issues around trust are still open-ended. Thus, we as SEMS are offering you to work on this topic within a thesis. Contact us!

And stay tuned for the full Dagstuhl Report.