Personality Perception via Zoom

Context

Zoom meetings have become increasingly popular almost inevitable ever since the COVID pandemic. This platform for virtual interactions has aided in quick and easy communication between people sitting in different corners of the globe. However, virtual communications still suffer from the drawback of not being able to interpret the interaction partner’s behaviours entirely. Studies have shown that we use non-verbal and para verbal behavioural cues to make an impression of our interaction partners. This project aims to bridge this gap by attempting to automatically predict personality scores for individuals using their behavioural cues via Zoom.

Aim of the Project

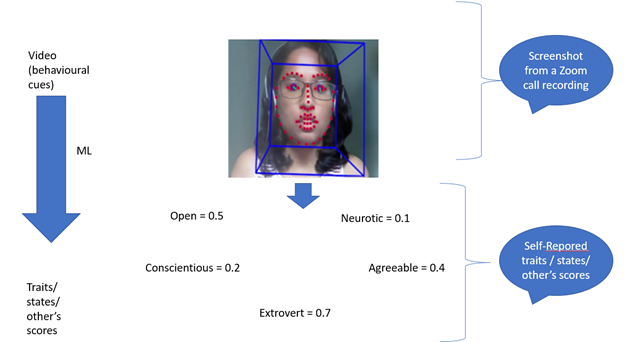

This study explores the association between behavioural attributes (non-verbal, para verbal) in a dyadic online interaction and psychological attributes (traits or states) in self- and other perceptions. Specifically, we employ computer vision (CV) and machine learning (ML) approaches to the automated analyses of behaviours and investigate interrelations with participants’ self-reported / friends-family-reported personality traits and states. We investigate whether automatic behavioural analyses of participants’ non-and para verbal cues in video recordings of a dyadic online interaction using computer vision and machine learning approaches allows prediction of participants’ self-reported personality traits and states. Computer vision analyses focus on hand and upper body movements as well as facial expressions.

Project Approach

We measure the following dimensions of traits in our study: Big-Five (OCEAN), public-private Self-awareness and Narcissism. The study starts with the participants filling out a questionnaire about self, the scores of which will be used to train an ML model. Then the participants participate in an online Zoom session where they perform several tasks based on the instructions from our experimenters, during which their videos will be recorded. These videos will be used by CV to extract facial, body pose and para verbal (voice related) features.

Project Partners

https://www.inf.uni-hamburg.de/en/inst/ab/hci.html

https://www.psy.uni-hamburg.de/en/arbeitsbereiche/sozialpsychologie.html

Contact

Funding

This project has been funded by grants from the DFG, BMBF, and BMWi. This work was supported by a grant from the Landesforschungsforderung of the City of Hamburg to Juliane Degner and Frank Steinicke as part of the Research Group Mechanisms of Change in Dynamic Social Interactions, LFF-FV79.