Virtual Group Meetings

Context

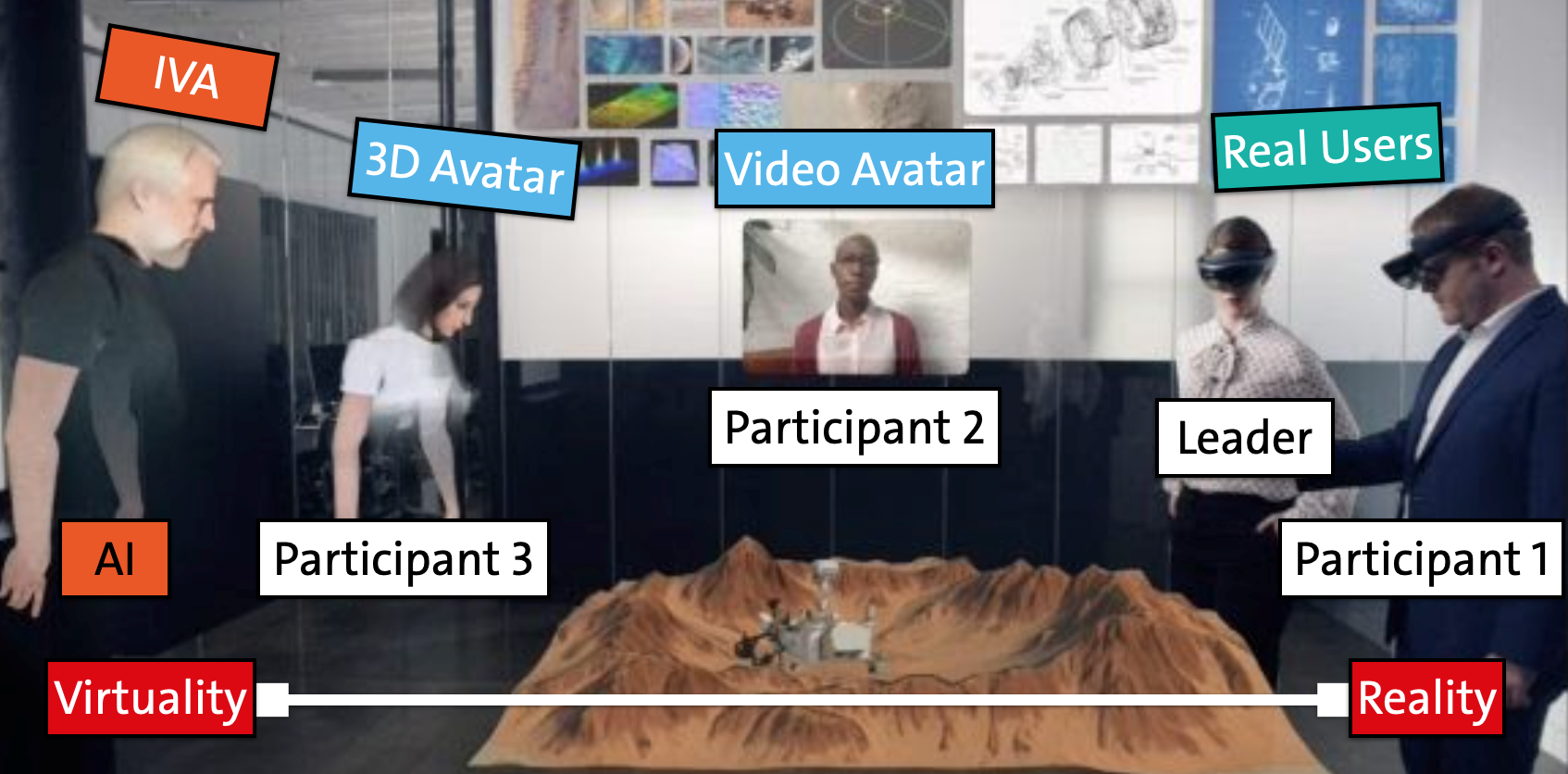

The number of virtual meetings in standard video conference platforms such as Zoom, Microsoft Teams, or Google Meet, has gradually increased over the last decade and experienced a peak since 2020 when COVID-19 emerged. Since then, virtual group meetings have influenced the work and everyday lives of millions – if not billions – of people. For instance, the daily users of the video conference platform Zoom increased from 10 million in December 2019 to 300 million in April 2020. As a consequence, in a short period, companies as well as universities all over the world had to adjust their habituated workflows, and come up with new approaches to ensure the continuation of essential communication and collaboration. Video conferences offer a simple, but effective opportunity to meet and communicate with others using a webcam-based virtual meeting, but only approximating face-to-face (F2F) communication. Due to limited or missing non-verbal behavioral cues like full-body language, which carry important social signals, the perception of affective states such as the emotions, moods, and sentiments and personalities of participants or the entire group is often limited in virtual meetings. This hampers intragroup communication and cooperation. Immersive extended reality (XR) meetings, often discussed in the context of the “Metaverse” or “Spatial Computing”, have enormous potential for alleviating the concerns of traditional 2D virtual group meetings and for enabling more natural forms of remote collaboration. Embodied avatars can provide novel meeting experiences with high spatial, co- and social presence. As illustrated in the Figure below, the level of immersion into a virtual meeting can be increased by XR technology, for example, by providing 3D displays and spatial audio in a conventional low immersive video conference, hybrid immersive meetings with realistic virtual humanoid representations (e.g., 2D or 3D avatars) in augmented reality (AR) or augmented virtuality (AV), or fully immersive virtual reality (VR) meetings.

Advances in artificial intelligence (AI) can further enhance the immersive meeting experience, for example, with background blur or replacements, noise suppression, or meeting bots for automatic translations and transcriptions. The rise of AI/XR technologies also leads to new forms of hybrid group meetings.

As illustrated the Figure above, these new forms include (i) hybrid immersive meetings in which attendees use different technological means to participate (e.g., via 2D video avatars such as participant 2 using a regular personal computer with a web camera or via a 3D avatar such as participant 3 using an immersive XR HMD), (ii) hybrid team meetings, which contain different constellations of meeting participants (e.g., represented as 2D/3D avatars with AI-generated and -controlled intelligent virtual agents (IVAs) as anthropomorphic representation of a meeting bot), and, (iii) hybrid spatial meetings with different spatial distributions between co-located (such as participant 1 and the leader) and remote meeting participants. In immersive XR meetings, users can interact with other group members in a shared 3D space via virtual avatars. However, such anthropomorphic virtual representations can also be used to represent meeting bots via so-called intelligent virtual agents (IVA)

Virtual Group Meeting Research Infrastructure

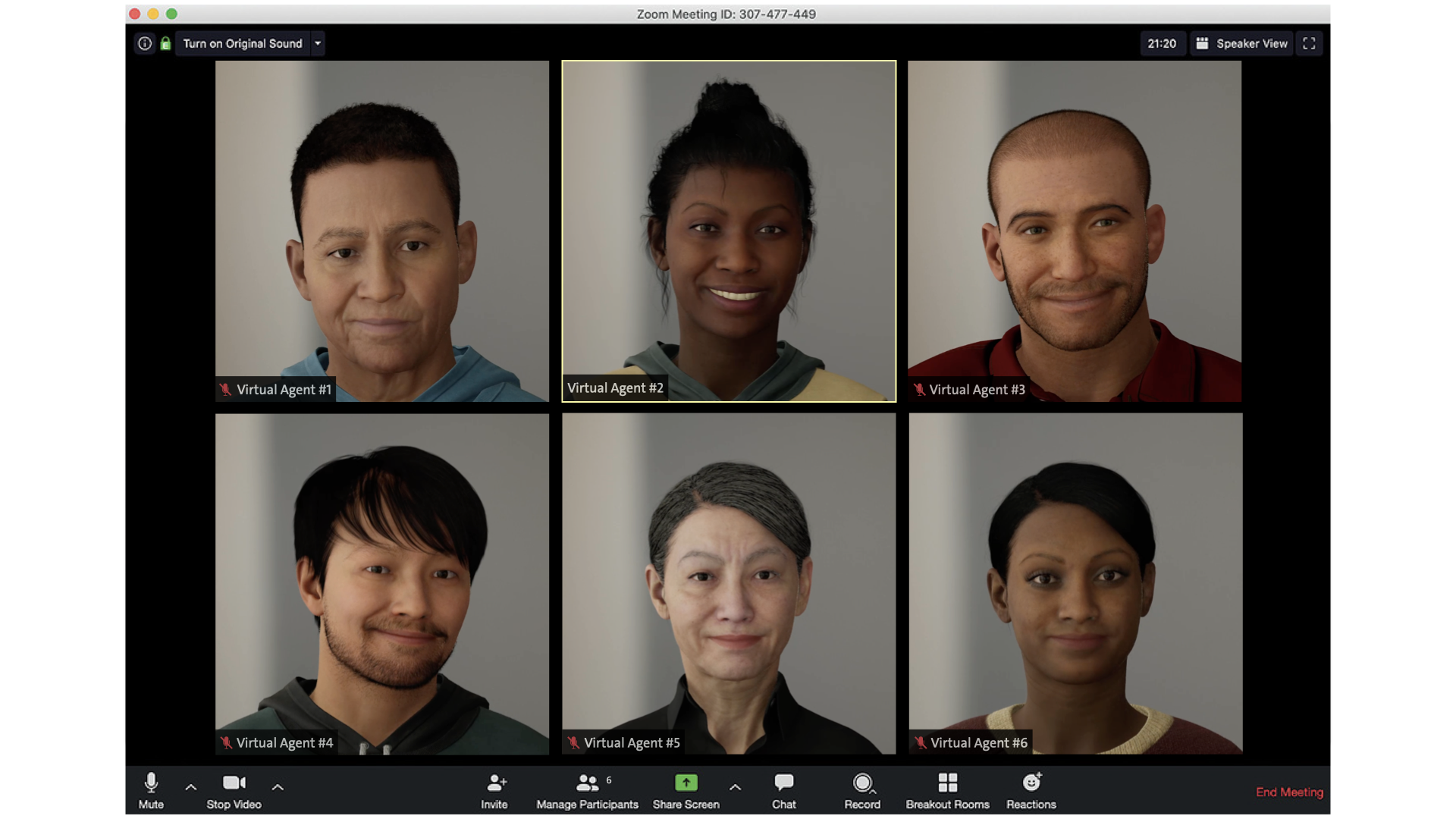

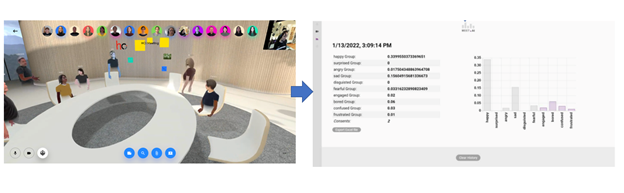

To study different forms of virtual group meetings, we have implemented a modular, flexible soft- and hardware infrastructure, which enables us to experiment with different meeting formats. For example, these meetings can be in Zoom with intelligent virtual agents or in 3D environments with embodied agents. The following Figure shows some example meeting formats, which we implemented in the past:

To facilitate collecting our own datasets, we have developed a modular video conferencing system tailored for conducting user studies and detailed analyses. The system addresses the limitations of existing platforms by allowing moderators to control which streams are sent to specific participants, thereby enabling a wide range of experimental setups. Using our own system ensures both flexibility and robustness in handling audio and video streams. We provide code and instructions in the Github.

We can also pose user experience/ psychology related questions to the study participants when we collect our own dataset. The reliability of our results will also be better in this case. Thus ‘data collection’ is the primary goal after which we plan to work on the analytics and other aspects as mentioned above. As in previous projects we aim to provide the new datasets on our sustainable research platform.

Audiovisual Analyses of Virtual Group Meetings

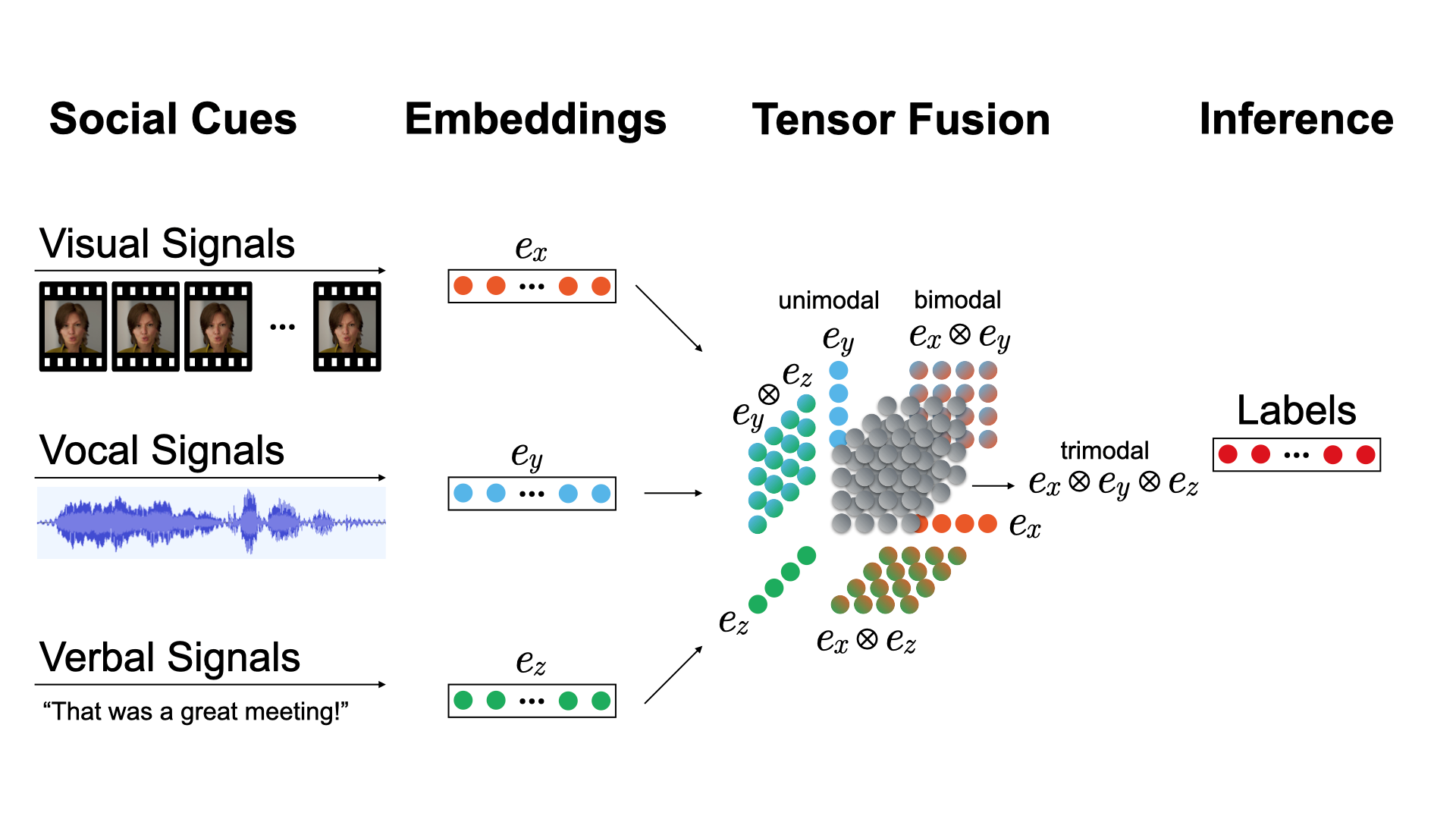

We have developed different tools, which can automatically analyze the audiovisual content from a meeting. Complex multimodal signals shape interactions in meetings as they involve the ``three Vs of communication'': (i) vocal, (ii) visual, and (iii) verbal. This includes spoken words, text, images, as well as non-verbal behavior.

In particular, group meetings heavily rely on non-verbal cues to transport information through multiple channels including the use of visual cues such as kinesics (e.g., body language, gestures, and posture) or oculesics (e.g., gaze focus and direction, eye movement and contact), as well as paralinguistic elements, which are non-verbal aspects of speech such as tone, loudness, or tempo. Additionally, non-verbal communication involves physical appearance, haptics, chronemics (i.e., the use of time in non-verbal communication), and extralinguistic aspects, which surround the communicative act by providing the context, purpose, and setting of the communication. Social signals denote the interpretation of such social cues. Separating social cues (i.e., actions) from social signals (i.e., their interpretations) is essential for understanding social interactions.

The multimodal nature of social signals in virtual group meetings introduces additional challenges to the automated analysis of individual and group affect, cognition, and behavior. While supervised DL approaches can link and weight modalities, generative approaches can capture the joint distribution across modalities and flexibly compensate lossy data. Previous work in this context has focused on limited aspects such as sentiment analysis, and typically does not account for intra- and inter-modality dynamics directly. Instead, the vast majority of multimodal fusion is either based on (i) early fusion (i.e., feature-level), in which features are integrated by concatenating the representations of each modality, or (ii) late fusion (i.e., decision-level), in which the integration is performed after the decision (e.g., classification or regression) by averaging, weighting or voting schemes. While early fusion learns to exploit the correlation and interactions between low-level features of each modality in a single model, noisy and lossy data significantly reduces the outcome quality. Late fusion, on the other hand, uses different models for each modality allowing more flexibility and robustness, but ignores the low-level interaction between modalities.

The following Figure illustrates how we can fuse multimodal tensors with three types of subtensors: (i) unimodal, (ii) bimodal and (iii) trimodal:

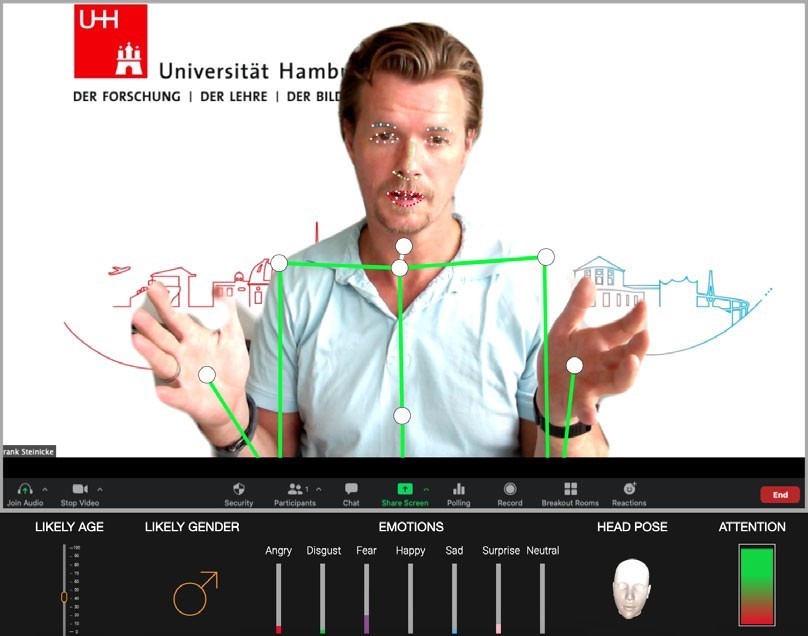

This analyses helps then to interpret social signals during virtual group meeting as illustrated in the following figures:

Downloads

The virtual group meetings infrastructure can be partly downloaded from our GitHub:

Project Partners

https://www.inf.uni-hamburg.de/en/inst/ab/hci.html

https://www.psy.uni-hamburg.de/en/arbeitsbereiche/sozialpsychologie.html

https://www.psy.uni-hamburg.de/arbeitsbereiche/arbeits-und-organisationspsychologie

Contact

Prof. Dr. Frank Steinicke, Sebastian Rings

Publications

Allen, J. A., & Lehmann-Willenbrock, N. (2023). The key features of workplace meetings: Conceptualizing the why, how, and what of meetings at work. Organizational Psychology Review, vol. 13, no. 4, pp. 355–378.

Lehmann-Willenbrock, N., Rogelberg, S. G., Allen, J. A., & Kello, J. E. (2017). The critical importance of meetings to leader and organizational success: Evidence-based insights and implications for key stakeholders. Organizational Dynamics, vol. 47, no. 1, p. 32.

Schmidt, S., Rolff, T., Voigt, H., Offe, M., & Steinicke, F. (2024). Natural expression of a machine learning model’s uncertainty through verbal and non-verbal behavior of intelligent virtual agents. In Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology, pp. 1–15.

Schmidt, S., Zimmermann, S., Mason, C., & Steinicke, F. (2022). Simulating Human Imperfection in Temporal Statements of Intelligent Virtual Agents. In CHI 2022: ACM Conference on Human Factors in Computing Systems, pp. 1–15.

Lehmann-Willenbrock, N. (2024). Dynamic interpersonal processes at work: Taking social interactions seriously. Annual Review of Organizational Psychology and Organizational Behavior, vol. 12.

Steinicke, F., Lehmann-Willenbrock, N., & Meinecke, A. (2020). A First Pilot Study to Compare Virtual Group Meetings using Video Conferences and (Immersive) Virtual Reality. In ACM Symposium on Spatial User Interaction, pp. 1–2.

Lehmann-Willenbrock, N., Hung, H., & Keyton, J. (2017). New frontiers in analyzing dynamic group interactions: Bridging social and computer science. Small Group Research, vol. 48, no. 5, pp. 519–531.

Grabowski, M., Lehmann-Willenbrock, N., Rings, S., Blanchard, A., & Steinicke, F. (2024). Group dynamics in the metaverse: A conceptual framework and first empirical insights. Small Group Research, pp. 763–804.

Grabowski, M., Rings, S., Lehmann-Willenbrock, N., & Steinicke, F. (2023). Exploring virtual student meetings in the metaverse: Experiential learning and emergent group entitativity. In Application of the Metaverse in Education, A. Hosny, Ed. Springer.

Lehmann-Willenbrock, N., & Hung, H. (2024). A multimodal social signal processing approach to team interactions. Organizational Research Methods, vol. 27, no. 3, pp. 477–515.

Freiwald, J., Schenke, J., Lehmann-Willenbrock, N., & Steinicke, F. (2021). Effects of Avatar Appearance and Locomotion on Co-Presence in Virtual Reality Collaborations. In Proceedings of Mensch und Computer (MuC).

Rings, S., & Steinicke, F. (2022). Konferenzbeitrag - Aufsatz in Konferenzband in: Proceedings - 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops, VRW 2022, 333-337, 5 S. Institute of Electrical and Electronics Engineers Inc. (2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)).

DOI: 10.1109/vrw55335.2022.00075

Rings, S., Kruse, L., Grabowski, M., & Steinicke, F. (2024). Konferenzbeitrag - Aufsatz in Konferenzband in: A Metaverse for the Good: The European Metaverse Research Network Proceedings.

Funding

This work was supported by a grant from the Landesforschungsforderung of the City of Hamburg as part of the Research Group Mechanisms of Change in Dynamic Social Interactions, LFF-FV79 as well as the Cross-Disciplinary Lab Computational Human Dynamics.