Journal Article on Speaking Activity Localisation without Prior Knowledge

23 November 2023

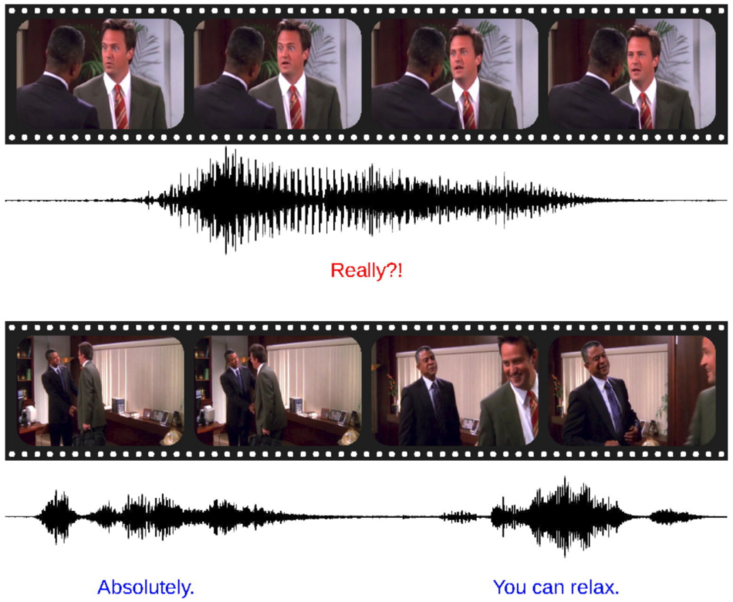

Our journal article “Whose Emotion Matters? Speaking Activity Localisation without Prior Knowledge” is available online in Neurocomputing. In this study, we address the longstanding lack of reliable audio-visual information for multimodal emotion recognition in multi-party conversations in the wild. Employing automatic speech recognition, connectionist-temporal-classification segmentation for forced alignment, and active speaker detection, we effectively realigned auditory and visual data from MELD, a benchmark in-the-wild multimodal dataset for emotion recognition in multi-party conversations. Comparative analyses reveal that facial expressions extracted from the realigned dataset are more reliable and valuable for emotion recognition than the visual information used by state-of-the-art models.

The link to the article on the publisher's website (open access) can be found here

The link to the (public) repository containing the realignment code as well as the already generated realignment data can be found here