Thesis Offers

FAQ

Which courses should I have taken before starting a thesis at WTM?

Our research covers artificial neural networks, machine learning, data preprocessing and analysis, experiment design, and human-robot interaction.

Bachelor students should have preferably attended the lectures Data Mining or Grundlagen der Wissensverarbeitung, the Praktikum Neuronale Netze, our Bachelor project, and the Proseminar Artificial Intelligence.

Master students should have preferably attended the lectures Bio-Inspired AI, Neural Networks, Knowledge Processing, Research Methods, Intelligent Robotics, and our Master project. Additional attendance of specific lectures of the groups Computer Vision, Language Technology, and Signal Processing are a plus.

Prerequisites for a successful thesis are a reasonable level of programming skills and a proper mathematical background in order to understand the computational principles of network models and to successfully built on them.

Who are my reviewers?

For Bachelor students:

First reviewer: Prof. Wermter or any WTM group member with a doctoratal degree

Second reviewer: any WTM group member with a doctoral degree or doctoral candidate being experienced both on the research area and in advising students.

For Master students:

First reviewer: Prof. Wermter

Second reviewer: any WTM group member with a doctoral degree

When should I make the registration?

Every thesis needs to be registered at the Academic office (Studienbüro). The concrete date is arranged by the student but should be in agreement with the advisor.

What is a thesis exposé?

A thesis exposé is a document summarizing the thesis topic, the thesis goal(s) and the expected contributions. It helps the students to focus on their topic and helps clarifying on the expected achievements of a thesis, as the time and the scope differ between a Bachelor- and a Master thesis.

As a guideline, the following sections should be included:

- Motivation of the topic, Related Work (based on your literature research)

- Research question(s) derived from it

- Selected methods used to address the research question(s), tools and software

- For HRI experiments: description of the scenario

- Expected results and contributions

- Time plan: Implementation phase and writing phase; expected final thesis draft

Your advisor will give you further help and specific feedback.

Why and how to conduct a literature research and which tools are available for it?

In your thesis you should be able to show that you are aware of the most important publications and recent state-of-the-art in your selected research area. This allows you to carve out possible limitations of known methods and the novelty or improvement of your approach, which you then have to also discuss in your thesis.

Your advisor will give you some initial papers, which you can use as a basis for further literature search. Common sources are Google Scholar or specific journal or publisher webpages (e.g. IEEE Xplore, ACM, Elsevier).

For a reaonable overview of your papers you will repeatedly reference in your thesis, it is helpful to use a literature manager. We have created a list of popular tools

https://www.inf.uni-hamburg.de/en/inst/ab/wtm/teaching/hints-seminar.html

The department library offers 'tips and tricks' of a successful literature research on a regular basis. Check your mailbox for the latest announcement or look up the dates here:

https://www.inf.uni-hamburg.de/inst/bib/service/training.html

What is a thesis outline and how to structure a thesis?

A thesis consists roughly of the following sections:

- Introduction

- Motivation

- Related Work

- Chapter Guidance

- Methods

- Data description

- Experimental setup (for HRI: description of scenario, experimental protocol)

- Network architecture and configurations

- Results and Evaluation

- Discussion

- Summary

- Discussion of your approach (pro and contra)

- Discussion of your work connected to your "Related Work"

- Future Work

- Conclusion

Your advisor will help you on the concrete structure.

How many pages the thesis should have?

We get this question very often but still we can not give you a definite number. A more theoretical thesis with formulas can be written more densely than an experimental thesis, as the description of the scenario, the robot or figures from the interaction already need space. Rather than looking for quantity is to focus on the quality, i.e. correct literature citations, high-resolution pictures, usage of a grammar and spell checker etc. Delivering drafts of individual chapters to your advisor will give you the chance to get valuable feedback in time, which in turn will give you more confidence on your whole thesis document.

Where and when do I register for the thesis defense (Oberseminar)?

Every student defends her or his thesis in an "Oberseminar" slot of our group. Our Oberseminar is scheduled Tuesdays, 2:15pm. The date must be scheduled within 6 weeks after handing in the thesis to the Studienbüro. Make sure that your reviewers will have enough time to read your thesis before the defense.

For a specific date, please write an email with the subject "Oberseminar Your_Name" to hafez@informatik.uni-hamburg.de (jirak"AT"informatik.uni-hamburg.de,)and cc in your reviewers.

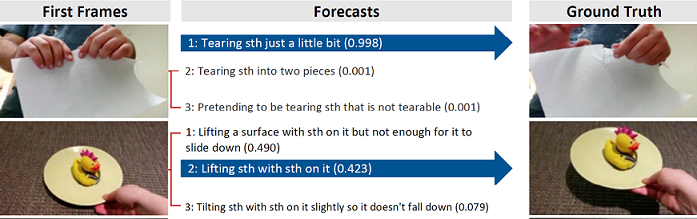

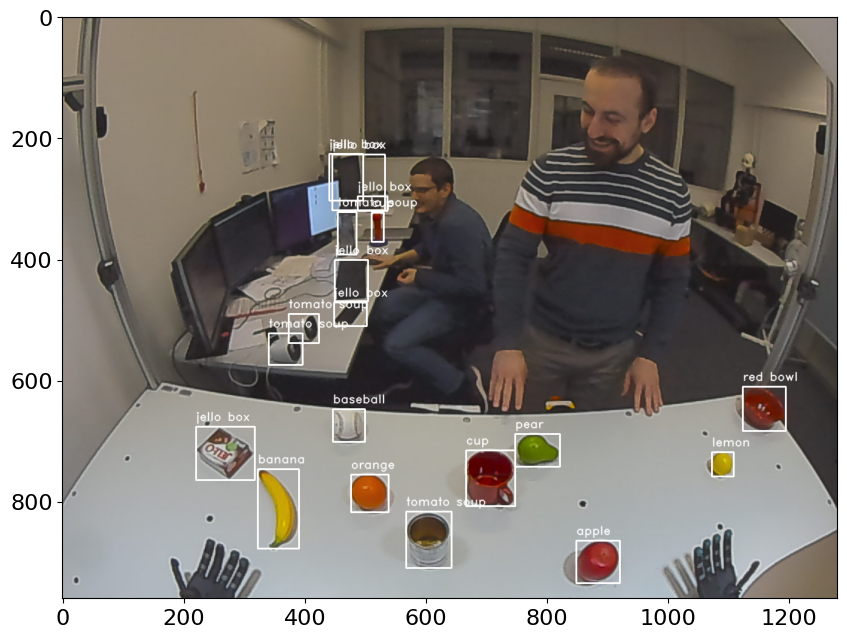

Open Vocabulary Object Detection

Object detectors like Mask R-CNN and YOLO are very good at locating and classifying known objects within images. In order to be successful though, these detectors generally need to be explicitly trained on a fixed number of classes with many (tens of) thousands of input samples. While the detectors are easy to use and train, obtaining and/or curating the appropriate training dataset for a particular set of objects is generally prohibitive and time-consuming. Open vocabulary object detectors can overcome this need for specialised training datasets, by locating objects in the image and classifying them with the use of language models. This allows novel objects to be detected simply by specification of their class in the form of (possibly descriptive) text. This thesis topic aims to construct an open vocabulary object detector, possibly based on YOLOv8 [1] and CLIP [2], while trying to learn from design decisions made by ViLD [3], CORA [4], and POMP [5].

Useful skills:

- Expert Python 3 programming skills (including experience working on/with large open-source libraries)

- Extensive experience using PyTorch and related libraries

- Interest in self-driven/independent study where you can implement and academically publish your ideas

Reference:

[1] YOLOv8: https://github.com/ultralytics/ultralytics

[2] CLIP: https://github.com/openai/CLIP

[3] ViLD: https://github.com/tensorflow/tpu/tree/master/models/official/detection/projects/vild

[4] CORA: https://github.com/tgxs002/CORA

[5] POMP: https://github.com/amazon-science/prompt-pretraining

Contact:

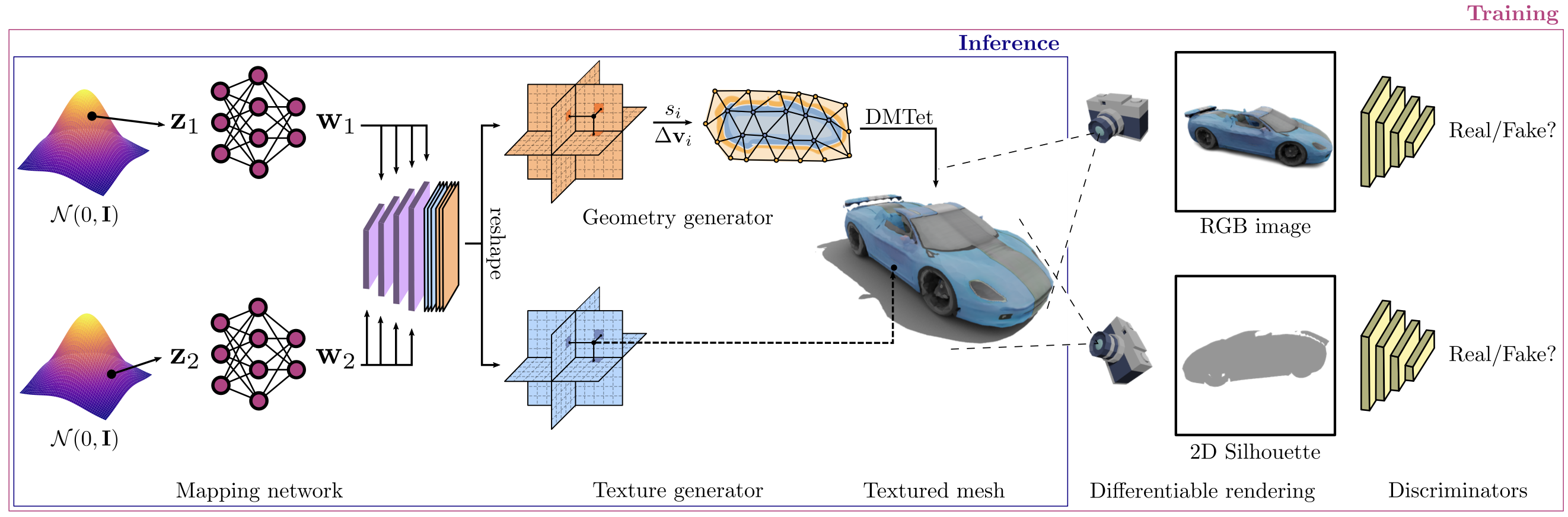

Image-prompted Textured 3D Model Generation

Recent works have made astounding progress toward generative models that can produce high quality 3D textured models. One example of such a model is Get3D [1], which is able to synthesise new models of a particular kind (whatever kind it was trained on, e.g. cars) based on a latent space input. Get3D was trained on subsets of the ShapeNet dataset [2], which provides many CAD models of different object classes. The task of this thesis is to extend the generative pipeline, ideally starting from the pretrained Get3D model, to allow image prompting, potentially first based on ShapeNet and then later based on images, e.g. from the ApolloCar3D dataset [3]. The aim is that a cropped and background-removed photo of a car can ultimately be used to generate a closely matching 3D textured car model, as well as estimate the orientation/viewpoint of the car in the photo.

Useful skills:

- Expert Python 3 programming skills (including experience working on/with large open-source libraries)

- Extensive experience using PyTorch and related libraries

- Interest in self-driven/independent study where you can implement and academically publish your ideas

Reference:

[1] Get3D / Image: https://github.com/nv-tlabs/GET3D

[2] ShapeNet: https://shapenet.org

[3] ApolloCar3D: https://apolloscape.auto/car_instance.html

Contact:

Gesture Recognition for Robot-Human Communication Using Transformers

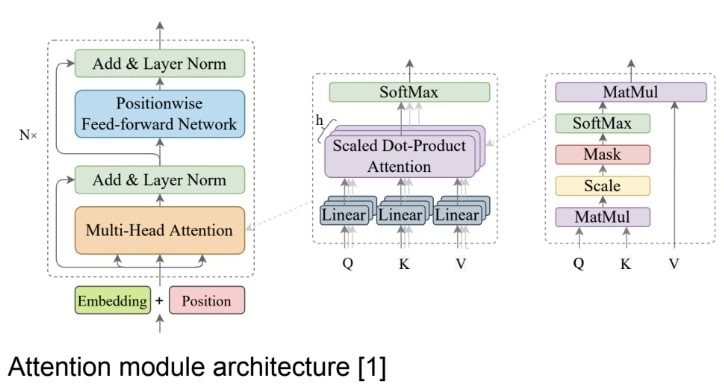

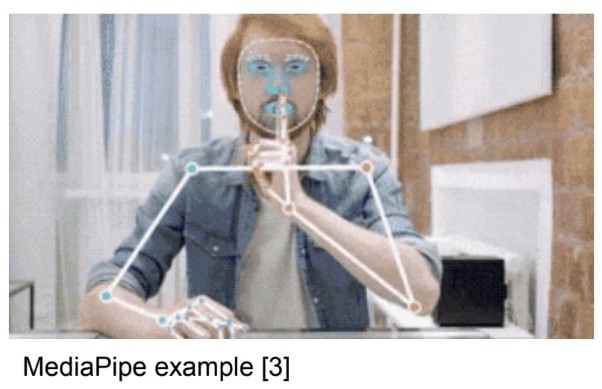

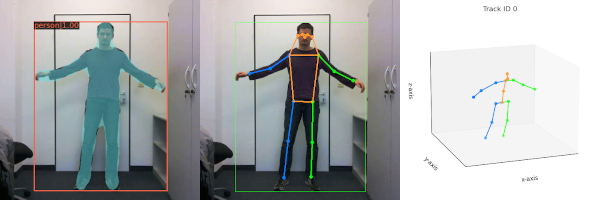

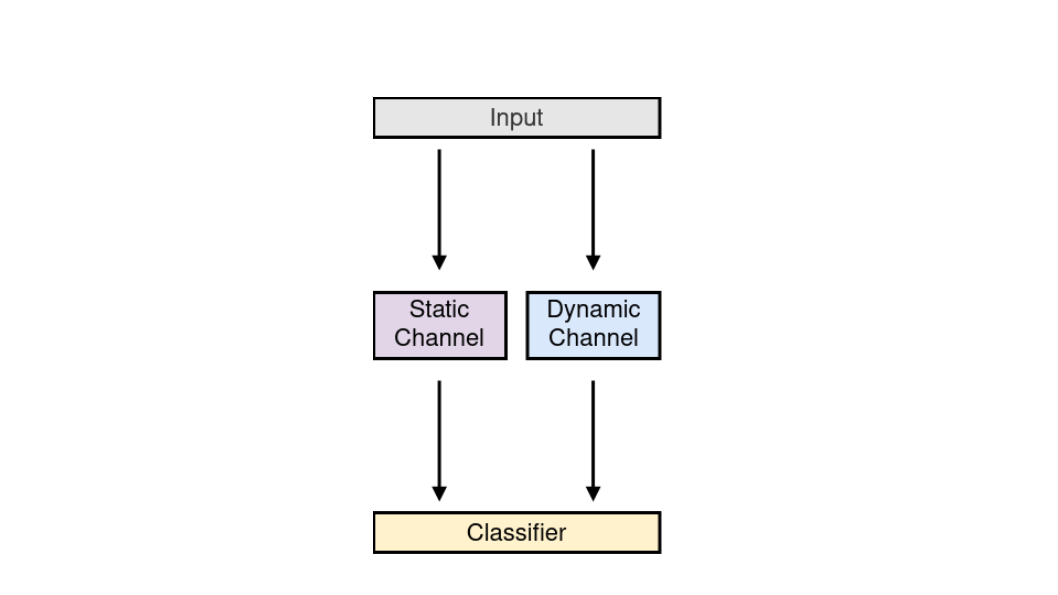

Transformers are currently state-of-the-art models for natural language processing and they have started to make a good impression in the computer vision field as well [1]. This research project aims at designing a novel architecture for exploiting the capabilities of attention-based models such as transformer networks and provide a robust methodology for improving human-robot interaction through the recognition of gestures as a way of communication. Here at WTM we have developed Snapture [2], an architecture that enables learning static and dynamic gestures. This architecture is based on convolutional neural networks and LSTMs. Stepping on this research we want to move now towards exploring the potential of transformer architectures. One possible way to exploit a transformer architecture for gesture recognition is by pairing it with a strong feature extractor such as MediaPipe [3], a general purpose machine learning open source project developed by Google with robust pose estimation capabilities

Goals:

- Design a novel deep neural network architecture based on transformers for gesture recognition

- Implement, train and evaluate the new architecture using a standard benchmark for gesture recognition

- Possibly create and make publicly available a new dataset for gesture recognition in the context of human-robot communication

Useful skills:

- Proficiency in Python coding

- Interest in human-robot communication

- Experience programming and training deep neural networks

Reference:

[1] Z. Cao , Y. Li, B-S. Shin. "Content-Adaptive and Attention-Based Network for Hand Gesture Recognition" [PDF]

[2] H. Ali, D. Jirak, S. Wermter (2022) "Snapture - A Novel Neural Architecture for Combined Static and Dynamic Hand Gesture Recognition" [PDF]

[3] MediaPipe

Contact:

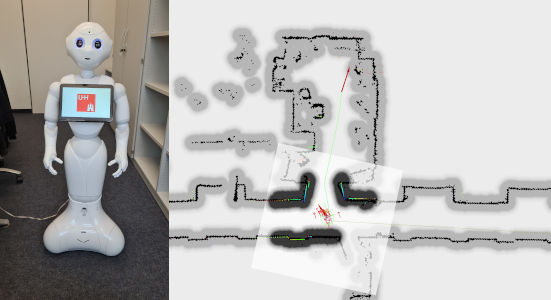

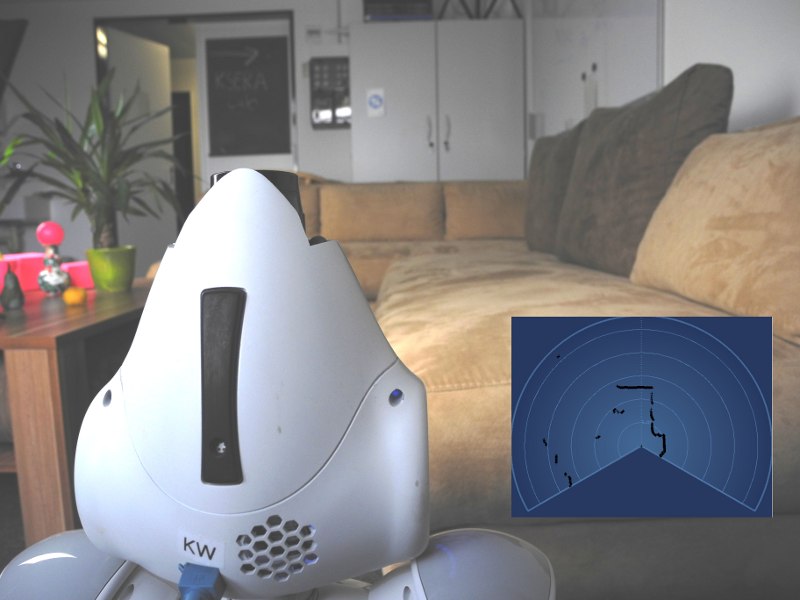

Localisation and Navigation of Humanoid Pepper Robot

The ability to navigate through a semi-dynamic environment is an important skill for a humanoid robot. This skill extends its range and field of applicability for human-robot interaction scenarios. In recent work, we have fitted a 2D lidar sensor [1] to one of our Pepper robots [2], and established localisation and navigation abilities within simple office scenarios. The assembled navigation stack uses Adaptive Monte Carlo Localisation (AMCL) for localisation [3], and a mix of global and local path planners for navigation. The use of a neural method for the purposes of navigation is to be investigated, possibly inspired for instance by Neural SLAM [4], or neural lidar odometry methods such as CAE-LO [5], PSF-LO [6], or the probabilistic trajectory estimator used in [7].

As a reference navigation approach, a new and more robust localisation and navigation stack should be developed, which applies a Simultaneous Localisation and Mapping (SLAM) method, and concurrently registers the output thereof against a known 2D reference map. The navigation strategy needs to allow path planning to targets that are outside the current SLAM map, but within the more comprehensive reference map. As an extension goal, any need for manual localisation initialisation should be alleviated using a global matching strategy between local laser scans and the reference map. The performance of the final navigation stack should be experimentally compared to the existing one.

Goals:

- Apply and tune a SLAM method for 2D robot localisation on the Pepper robot

- Implement a path planning/navigation strategy that efficiently utilises the omnidirectional capabilities of the Pepper robot in order to drive to a global pose goal while avoiding obstacles

- Possibly develop a robust global localisation initialisation strategy

Useful skills:

- High proficiency in object-oriented C++ and Python coding

- Prior experience with the Robot Operating System (ROS) middleware and PyTorch

- Interest in navigation and mapping using mobile robots

- Experience working with Raspberry Pi and/or robotic systems

Reference:

[1] YDLidar G2 Sensor Datasheet

[2] SoftBank Robotics Pepper robot

[3] D. Fox, W. Burgard, F. Dellaert, and S. Thrun (1999), “Monte carlo localization: Efficient position estimation for mobile robots” [PDF]

[4] J. Zhang, L. Tai, M. Liu, J. Boedecker, and W. Burgard (2020), "Neural SLAM: Learning to Explore with External Memory" [PDF]

[5] D. Yin, Q. Zhang, J. Liu, X. Liang, Y. Wang, J. Maanpää, H. Ma, J. Hyyppä, and R. Chen (2020), "CAE-LO: LiDAR Odometry Leveraging Fully Unsupervised Convolutional Auto-Encoder Based Interest Point Detection and Feature Description" [PDF]

[6] G. Chen, B. Wang, X. Wang, H. Deng, B. Wang, and S. Zhang (2021), "PSF-LO: Parameterized Semantic Features Based Lidar Odometry" [PDF]

[7] D. Yoon, H. Zhang, M. Gridseth, H. Thomas, T. Barfoot (2021), "Unsupervised Learning of Lidar Features for Use in a Probabilistic Trajectory Estimator" [PDF]

Contact:

Multi-agent Planning with Large Language Models

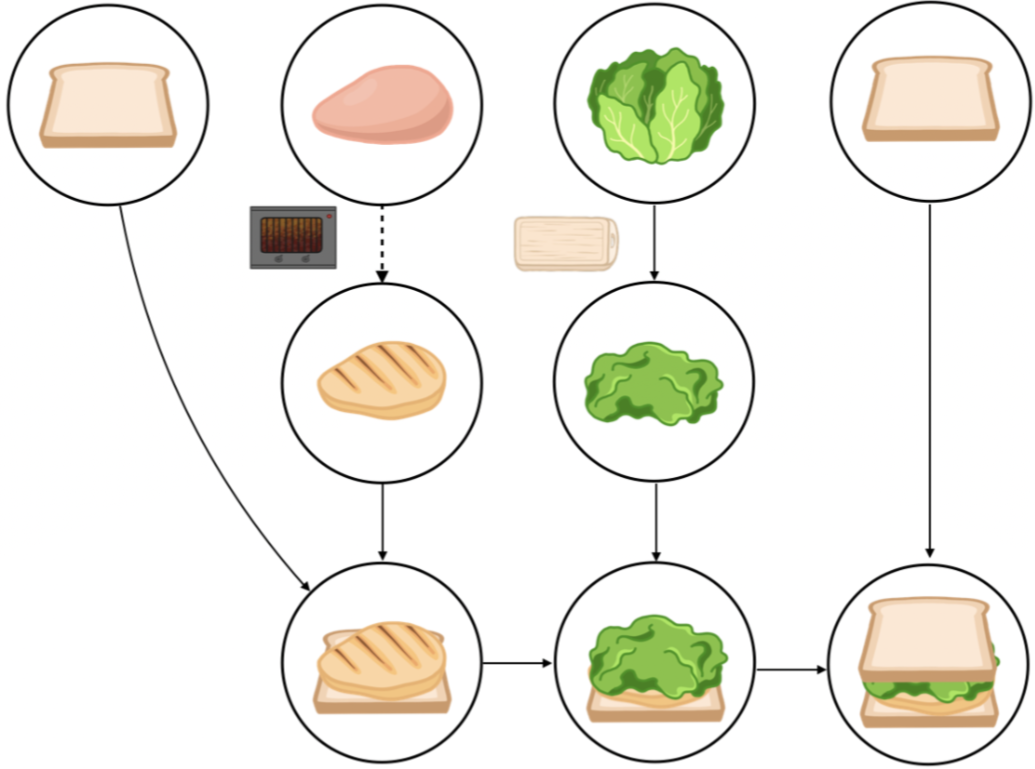

While large language models (LLMs) show promise in high-level task planning, most current methods lack asynchronous planning capabilities for multi-agents. In our recent paper [1], we propose LLM+MAP, a framework that utilizes Planning Domain Definition Language (PDDL) with LLM to let our NICOL humanoid robot perform long-horizon bimanual tasks. There is great potential to extend our framework to various planning domains. ROBOTOUILLE [2] is a benchmark to evaluate asynchronous planning through tasks that take time, like cooking meat for burgers or sandwiches or filling up a pot with water to cook soup. In this topic, we will explore to apply LLM+MAP on diverse tasks in this benchmark dataset. The work on this topic is supported by the OpenAI Researcher Access Program in 2025 via sufficient API tokens.

While large language models (LLMs) show promise in high-level task planning, most current methods lack asynchronous planning capabilities for multi-agents. In our recent paper [1], we propose LLM+MAP, a framework that utilizes Planning Domain Definition Language (PDDL) with LLM to let our NICOL humanoid robot perform long-horizon bimanual tasks. There is great potential to extend our framework to various planning domains. ROBOTOUILLE [2] is a benchmark to evaluate asynchronous planning through tasks that take time, like cooking meat for burgers or sandwiches or filling up a pot with water to cook soup. In this topic, we will explore to apply LLM+MAP on diverse tasks in this benchmark dataset. The work on this topic is supported by the OpenAI Researcher Access Program in 2025 via sufficient API tokens.

Useful skills:

- Python programming skills (including experience working on/with large language models)

- Interest in self-driven/independent study where you can implement and academically publish your ideas.

References:

[1] Chu, Kun, Xufeng Zhao, Cornelius Weber, and Stefan Wermter. "LLM+ MAP: Bimanual Robot Task Planning using Large Language Models and Planning Domain Definition Language." arXiv preprint arXiv:2503.17309 (2025)

[2] Gonzalez-Pumariega, Gonzalo, Leong Su Yean, Neha Sunkara, and Sanjiban Choudhury. "Robotouille: An Asynchronous Planning Benchmark for LLM Agents.”, ICLR 2025

Contact:

General Purpose Human Pose Estimation

Human pose estimation is a field that has seen many advances in the past years, and is important in human-robot interaction scenarios for a greater robot situational awareness. We wish to focus on the detection and wholistic understanding, from monocular images, of human body parts and keypoints in both 2D and 3D. This is a broad topic that offers a multitude of research directions for a prospective thesis, which in distant future work would seek to be united. The following individual possible goals are considered:

-

Can a feature backbone successfully be shared for human bounding box detection and 2D keypoint detection?

Bounding box detection and keypoint detection are both time intensive processes, and performing both concurrently in a single network offers computational savings, as well as the sharing of knowledge, i.e. the visual understanding of human body parts [1] [2] [3]. Refer to new research in this direction [9]. -

Can 3D keypoint detection performance be improved by including the backbone feature maps as further inputs?

3D keypoint estimators typically only receive 2D keypoint detection coordinates as input [4], thereby not allowing the network to learn from any visual context that can provide hints regarding limb depth and/or positional ambiguity. -

Can 2D keypoint detection performance in the wild be improved by training on multiple united datasets?

Single datasets can lack the complete diversity required to train networks that are also effective in the wild [5]. -

Can a more wholistic understanding of the appearance of the human body be learnt by concurrently training multiple related tasks?

Examples of candidate tasks in addition to 2D keypoint estimation include instance segmentation, body part segmentation [6], dense pose estimation [7], and more if considering 3D pose estimation [8]. Related idea [10]. -

Can temporal information be used to improve 2D keypoint detection robustness for video sequences?

It is common for 3D keypoint estimators to use sequences of multiple frames in order to improve their estimation quality in the face of ambiguities [4]. Can 2D keypoint detectors also benefit in order to help deal with body part occlusions?

Useful skills:

- Expert Python 3 programming skills (including experience working on/with large open-source libraries)

- Extensive experience using PyTorch and related libraries

- Interest in self-driven/independent study where you can implement and academically publish your ideas

Reference:

[1] Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, "YOLOX: Exceeding YOLO Series in 2021", arXiv preprint arXiv:2107.08430, 2021. [PDF]

[2] D. Bolya, C. Zhou, F. Xiao, Y. Lee, "YOLACT++: Better Real-time Instance Segmentation", arXiv preprint arXiv:1912.06218, 2020. [PDF]

[3] Z. Huang, L. Huang, Y. Gong, C. Huang, X. Wang, "Mask Scoring R-CNN", arXiv preprint arXiv:1903.00241, 2019. [PDF]

[4] D. Pavllo, C. Feichtenhofer, D. Grangier, M. Auli, "3D human pose estimation in video with temporal convolutions and semi-supervised training", arXiv preprint arXiv:1811.11742, 2019. [PDF]

[5] J. Lambert, Z. Liu, O. Szener, J. Hays, V. Koltun, "MSeg: A Composite Dataset for Multi-domain Semantic Segmentation", arXiv preprint arXiv:2112.13762, 2021. [PDF]

[6] W. Wang, Z. Zhang, S. Qi, J. Shen, Y. Pang, L. Shao, "Learning Compositional Neural Information Fusion for Human Parsing", arXiv preprint arXiv:2001.06804, 2020. [PDF]

[7] R. Güler, N. Neverova, I. Kokkinos, "DensePose: Dense Human Pose Estimation In The Wild", arXiv preprint arXiv:1802.00434, 2018. [PDF]

[8] A. Mertan, D. Duff, G. Unal, "Single Image Depth Estimation: An Overview", arXiv preprint arXiv:2104.06456, 2021. [PDF]

[9] D. Maji, S. Nagori, M. Mathew, D. Poddar, "YOLO-Pose: Enhancing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity Loss", arXiv preprint arXiv:2204.06806, 2022. [PDF]

[10] C. Wang, I. Yeh, H. Liao, "You Only Learn One Representation: Unified Network for Multiple Tasks", arXiv preprint arXiv:2105.04206, 2021. [PDF]

Contact:

Multi-modal Gesture Recognition

In human-human communication, gestures are essential components of non-verbal communication and have been identified as social cues in human-robot interaction (HRI). Co-speech gestures are delivered with speech and can enhance specific word meanings. The examples for co-speech gestures range from static poses like "OK" to dynamic hand movements while giving a public talk. While the recognition of those gesture forms is inevitably necessary to foster natural HRI scenarios, most research in gesture recognition has focused only on a separate aspect of gestures, either hand pose or dynamic gesture recognition. We recently tackled the integration of both modalities introducing a novel neural network architecture called "Snapture" [1]. Snapture is a modular framework that learns both a specific finger pose and the arm movements involved in the gesture expression. Snapture is based on convolutional neural networks (CNN), which learn hand poses and long short-term memory networks (LSTM) to model the temporal sequences. We showed the robustness of the architecture using the Montalbano co-speech benchmark dataset [2]. The Snapture framework offers a multitude of extensions for a prospective Master thesis:

-

Improvement of the threshold-based activation of the static hand recognition channel for the flexible detection of hand poses in a sequence.

-

Analysis of the neuronal network activations (e.g. grad-Cam or embeddings) and integration of attention mechanisms to understand the working principles of the Snapture architecture.

-

Integration of facial expressions in alignment with co-speech gestures to add an affective dimension to the gesture expression context.

-

Optional (depending on the Covid19 situation): Implementation of HRI co-speech scenarios with a humanoid robot.

The Snapture framework will be made available at the start of the Master thesis. We require a profound knowledge of artificial neural networks, their learning principles, and network performance evaluation. Programming experience in Python is mandatory. Experiences with gesture recognition both in human-human and human-robot interaction is a plus.

Useful skills:

-

Profound knowledge of artificial neural networks

-

Programming experience with Python

-

Interest in gesture recognition

Reference:

[1] Hassan Ali: Snapture-A Hybrid Hand Gesture Recognition System, 2021

[2] https://chalearnlap.cvc.uab.es/dataset/13/description/

Contact:

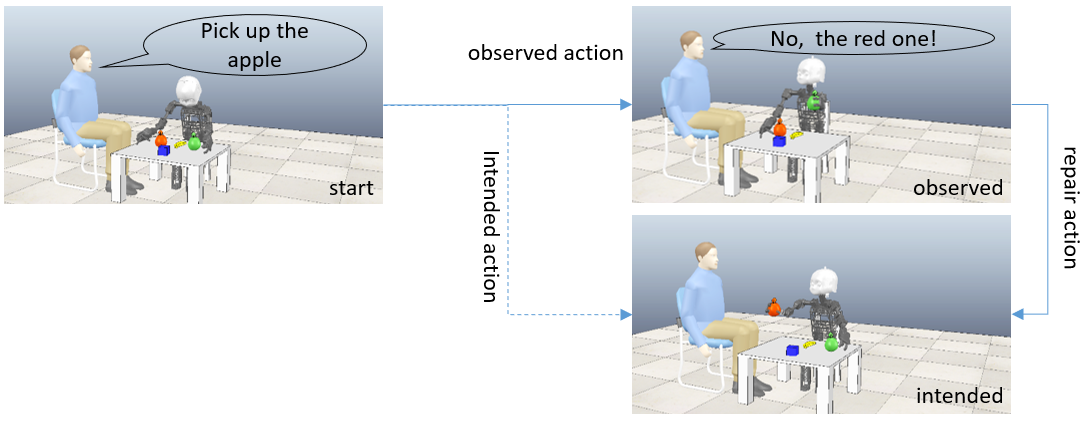

Compositional Object Representations in Multimodal Language Learning

In recent work [1], we have tested the capability of a robot to tell about what it is doing. It learnt to produce phrases like "push red pylon", even if it has never before seen a red pylon, but only pylons of other colors and other red objects. Moreover, additional irrelevant object within the robot's field of view do not disturb much. However, the performance drops drastically, if there are not many color and object combinations in the dataset (while keeping the dataset of constant size). In this thesis, we want to tackle this difficult case for generalization. We will investigate whether unsupervised pretraining (either with a small number of color and object combinations, or with a larger number, but always without labels) would help. We plan also to consider importing models for preprocessing that are pretrained on large vision-language datasets, such as ViLBERT [2]. We will validate the success of the extended model on the difficult multi-object cases in our earlier experiments [1].

Goals:

- Develop a model which describes an action performed in a scene on one of several objects

Useful skills:

- Neural networks

- Interest in 3D robot simulator CoppeliaSim

References:

[1] Generalization in Multimodal Language Learning from Simulation

Aaron Eisermann, Jae Hee Lee, Cornelius Weber, Stefan Wermter, IJCNN 2021

[2] ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks

Lu et al., NeurIPS 2019

Contact:

Continual Deep Reinforcement Learning

Reinforcement Learning is cutting edge research [1,2]. You will extend existing work in goal-conditioned RL where a simulated robot learns to interact with its environment. You will extend the approach so that the robot is able to learn continuously like a human, without any interruption, to learn a number of multiple goals that it determines itself using a curious behavior model.

We will provide you with Python/Pytorch code, and, depending on your own preferences and previous experience, you will have to ability to extend the code to develop novel methods for agents that learn the dynamics of their environment by exploring them like a child. One possibility is to participate in the REAL challenge [3]

Goals:

- Enhance existing goal-conditioned reinforcement learning methods in order to use them for continual curiosity-driven learning.

- Apply your approach to the REAL challenge [3].

Literature:

[1] Röder*, F., Eppe*, M., Nguyen, P. D. H., & Wermter, S. (, May). Curious Hierarchical Actor-Critic Reinforcement Learning. International Conference on Artificial Neural Networks (ICANN). http://arxiv.org/abs/2005.03420

[2] Levy et al. (2019) Learning Multi-Level Hierarchies with Hindsight (http://arxiv.org/abs/1712.00948)

[3] Cartoni, E. et al. (2019). REAL-2019 : Robot open-Ended Autonomous Learning competition. Conference on Neural Information Processing Systems (NeurIPS), 142–152. (http://proceedings.mlr.press/v123/cartoni20a.html, https://www.aicrowd.com/challenges/real-robots-2020)

Code and data:

Contact:

Human-robot dialog systems with deep reinforcement learning

Robots should be able to understand human language [1]. Moreover, they should be able to perform a natural language dialog with humans. Dialogs are commonly modeled in a framework based on dialog states and dialog acts that is potentially compatible with recent advances in reinforcement learning, specifically with goal-conditioned reinforcement learning [2,3]. The task for this thesis work is to identify and to evaluate in how far dialog systems can be realized with different existing goal-conditioned reinforcement learning methods.

We will provide you with Python code, and, depending on your own preferences and previous experience, you will extend the code based on an initial virtual discussion and brainstorming session. The details are subject to negotiation during an initial meeting.

Goals:

- Enhance existing goal-conditioned reinforcement learning methods in order to use them for robotic dialog systems.

- Develop a method to represent dialog states and dialog acts appropriately

- Evaluate the performance of the methods using state-of-the-art benchmark datasets

- Possibly integrate the learning of human-robot dialog with action execution by the robot.

Literature:

[1] Zhao, R. (2020) Deep Reinforcement Learning in Robotics and Dialog Systems https://edoc.ub.uni-muenchen.de/26684/1/Zhao_Rui.pdf

[2] Röder*, F., Eppe*, M., Nguyen, P. D. H., & Wermter, S. (2020). Curious Hierarchical Actor-Critic Reinforcement Learning. International Conference on Artificial Neural Networks (ICANN). http://arxiv.org/abs/2005.03420

[3] Eppe (2019) From Semantics to Execution: Integrating Action Planning With Reinforcement Learning for Robotic Causal Problem-Solving (https://www.frontiersin.org/articles/10.3389/frobt.2019.00123/full)

Code and data:

- https://github.com/knowledgetechnologyuhh/goal_conditioned_RL_baselines

- https://www.repository.cam.ac.uk/handle/1810/294507

- https://research.fb.com/publications/learning-end-to-end-goal-oriented-dialog/

- https://github.com/Maluuba/frames

Contact:

Dr. Burhan Hafez, Dr. Cornelius Weber, Prof. Dr. Stefan Wermter

Gaze Prediction for Robot Control

Attending to certain regions indicates an interest in acting towards them. This behavior is present in many animals that rely heavily on eyesight for survival. Among humans, gaze also shapes our linguistic and behavioral tendencies, necessitating its understanding for our social normality. Moreover, gaze serves a dual function in social scenarios: we perceive others' eye movements and signal our own intention through gaze [1]. Allowing robots to replicate human-like gaze patterns would potentially elevate their social acceptance and lead to a better understanding of attention and human eye movement.

To mimic the human gaze, we must distinguish between the gaze patterns of different individuals. Trajectory-based [2,3] and generative [4] approaches have tackled this issue by learning individual trajectories and generating future frames given single observers, respectively. However, such approaches disregard auditory stimuli, which has proven positively influential in saliency prediction models [5]. Lathuilière et al. [6] introduced an audiovisual reinforcement-learning-based gaze control model, conditioned on maximizing the number of speakers within the robot's view. Your goal is to extend a trajectory-based or generative approach by integrating auditory input into the purely visual-based approaches. Alternatively, you may instead resort to treating the problem as one of gaze control. This can be achieved by utilizing audiovisual reinforcement-learning approaches, with the task of mimicking human gaze patterns, using datasets providing the fixations of multiple observers while watching videos. Finally, you will embody your model in a robot, either in a simulated or physical environment (or both).

Goals:

- Develop or enhance a gaze prediction algorithm with multimodal input

- Conceptualize and develop a soft-realtime gaze control approach for smooth and realistic robotic actuation

- Evaluate the approach on a robot of your choice (e.g., iCub, Pepper, NICO)

Useful skills:

- A good background in neural networks

- Previous experience with Python and one or several deep learning frameworks (e.g., Keras and Tensorflow, PyTorch)

- Experience with middleware (e.g., YARP, ROS) is desirable

- Interest in motor control, human eye movement, and computer vision

References:

[1] Cañigueral, Roser, and Antonia F. de C. Hamilton. "The role of eye gaze during natural social interactions in typical and autistic people." Frontiers in Psychology 10 (2019): 560.

[2] Huang, Yifei, et al. "Predicting gaze in egocentric video by learning task-dependent attention transition." Proceedings of the European Conference on Computer Vision - ECCV. (2018): 754-769.

[3] Xu, Yanyu, et al. "Gaze prediction in dynamic 360 immersive videos." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition - CVPR. (2018): 5333-5342.

[4] Zhang, Mengmi, et al. "Deep future gaze: Gaze anticipation on egocentric videos using adversarial networks." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition - CVPR. (2017): 4372-4381.

[5] Tsiami, Antigoni, Petros Koutras, and Petros Maragos. "STAViS: Spatio-temporal audiovisual saliency network." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition - CVPR. (2020): 4766-4776.

[6] Lathuilière, Stéphane, et al. "Neural network based reinforcement learning for audio–visual gaze control in human–robot interaction." Pattern Recognition Letters 118 (2019): 61-71.

Contact:

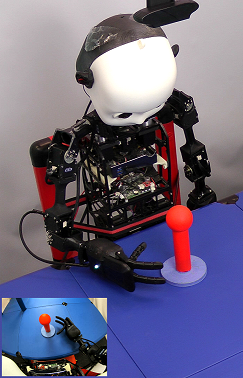

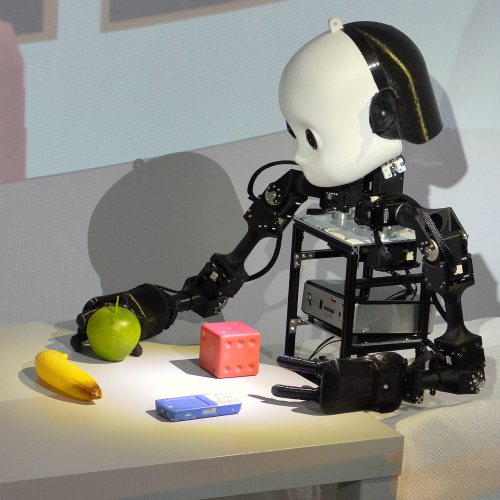

Self-Supervised Learning of Execution Horizon for Real-Time Model-Based Robot Control

Deep reinforcement learning (RL) has shown great success in learning complex robotic tasks from no prior knowledge, utilizing deep neural networks to learn a policy—a mapping from sensory input to motor output that optimizes task performance. One problem when using deep RL for real-world robot control, however, is the inevitable sensorimotor delays that limit how quickly a control sequence can be performed. Such delays are mainly due to the time needed for incorporating feedback into an ongoing motor movement, which is done by closed-loop (feedback) control. This is important to enable the robot to continuously update its policy based on the most recent observations. In contrast, humans can adaptively transition between fast open-loop and slow closed-loop control based on task characteristics, constraints, or the accuracy required. For example, most pointing, reaching and grasping movements have an initial, typically fast, open-loop phase followed by a closed-loop phase at the end of movement where sensory feedback is used to correct planning errors and adjust the movement trajectory. Humans also compensate for the sensorimotor delays in the nervous system by predicting ahead using a learned predictive model of the world. In deep RL, model-based approaches similarly learn a model of the environment's dynamics and use it for planning. One popular approach that performs multi-step lookahead planning with the model is model-predictive control (MPC) [1,2,3]. Despite its effectiveness, it remains unknown how MPC can be used to overcome sensorimotor delays and support human-like adaptive transitions between open-loop and closed-loop control.

Goals:

- Develop a self-supervised learning approach that uses the offset between planned and executed trajectories to adaptively set the execution horizon in MPC and enable sufficiently fast real-time control.

- Evaluate the approach on the NICO robot [4].

Useful skills:

- Good knowledge of deep neural networks.

- Experience in Python programming and at least one deep learning framework (e.g., Keras, Tensorflow, PyTorch).

- Familiarity with deep reinforcement learning.

- Interest in robotics and human motor control.

References:

[1] F. Ebert, C. Finn, S. Dasari, A. Xie, A. X. Lee, and S. Levine. Visual foresight: Model-based deep reinforcement learning for vision-based robotic control. CoRR, abs/1812.00568, 2018. [PDF]

[2] M. Henaff, W.F. Whitney, and Y. LeCun. Model-based planning with discrete and continuous actions. arXiv preprint arXiv:1705.07177, 2017. [PDF]

[3] M.B. Hafez, C. Weber, M. Kerzel, and S. Wermter. Curious meta-controller: Adaptive alternation between model-based and model-free control in deep reinforcement learning. Proceedings of the International Joint Conference on Neural Networks (IJCNN), pages 1–8, 2019. [PDF]

[4] M. Kerzel, E. Strahl, S. Magg, N. Navarro-Guerrero, S. Heinrich, and S. Wermter. NICO– Neuro-Inspired COmpanion: A developmental humanoid robot platform for multimodal interaction. Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pages 113–120, 2017. [PDF]

Contact:

Dialogue Generation with Visual Reference

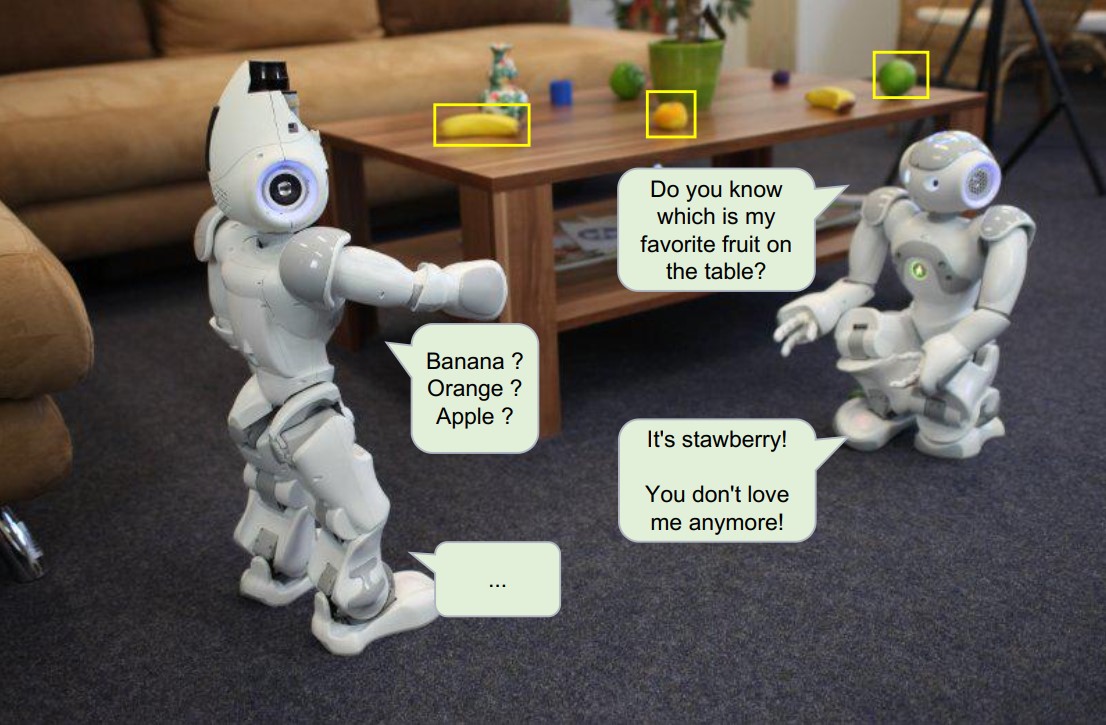

The power of computational natural language processing can only be unleashed when meaningful dialogues can be generated. The aim of this project is to implement a minimal model of dialogue about a visual scene. On the example of a "Guess Which" game we will implement a dialogue about several objects in a visual scene: an "OracleBot" chooses one designated object, and a "LearnerBot" needs to guess which object is chosen, which it can only infer via a questioning strategy, such as asking about which color, size, shape, etc. the object has that the OracleBot has in mind. The LearnerBot model uses a mixed strategy of reinforcement learning (to learn the question strategy) and supervised learning (for the answer). The model is based on a model of sequential visual processing that delivers an answer from accumulated visual impressions [1], but we will transfer it to the visual-linguistic domain [2].

Goals:

- The development of a neural network model for the LearnerBot in the GuessWhich game.

Useful skills:

- Programming experience with Python

- Familiarity with deep learning and recurrent neural networks

- Interest in natural language processing

- Experience with image processing or visual simulation environments

References:

[1] V. Mnih, N. Heess, A. Graves, K. Kavukcuoglu (NeurIPS 2014), Recurrent Models of Visual Attention

[2] End-to-end Optimization of Goal-driven and Visually Grounded Dialogue Systems

Contact:

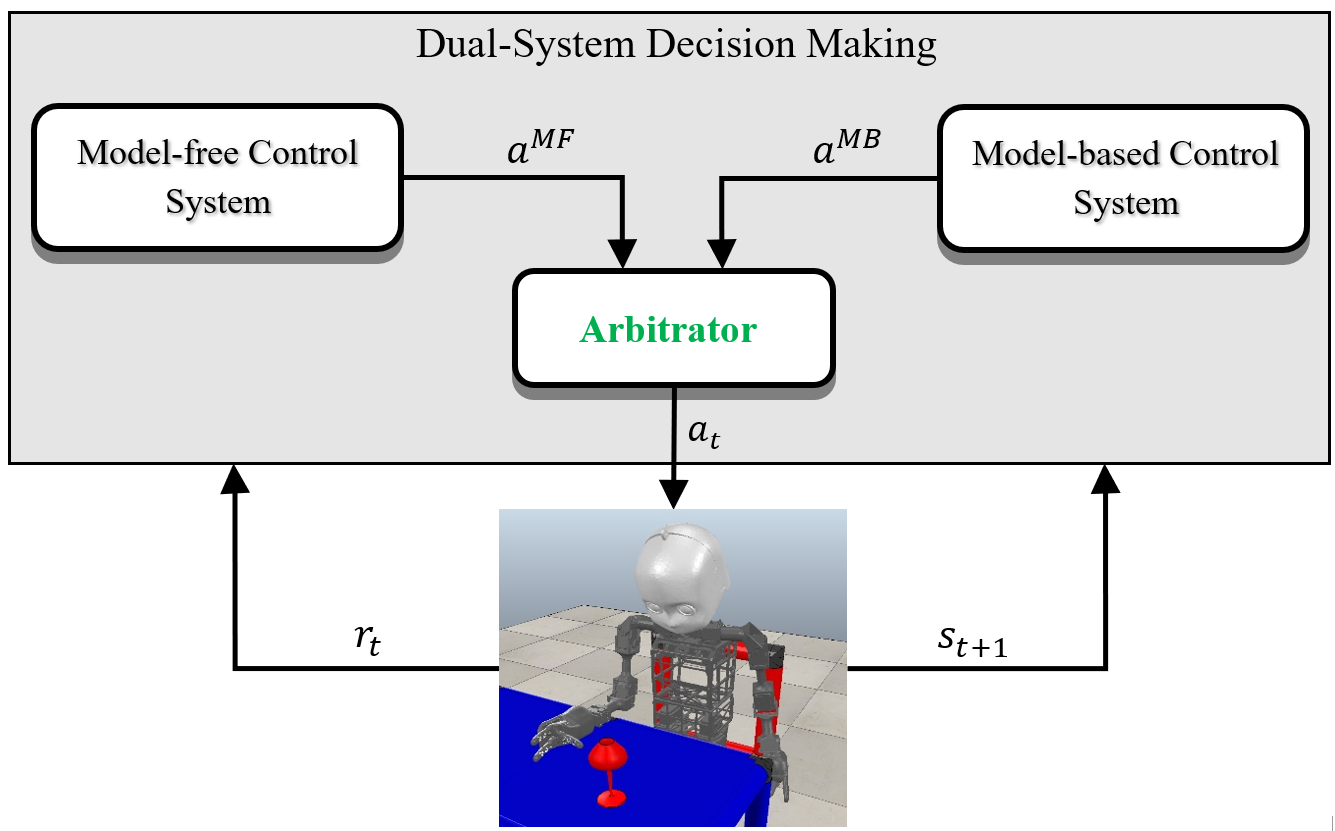

Dual-System Decision-Making for Efficient Robot Learning

Cognitive and behavioral studies have presented different hypotheses on how model-free (habitual) and model-based (goal-directed) systems control human decision-making, providing neural and behavioral evidence [1,2]. However, it remains unclear how and when managing the tradeoff between the two systems can improve robot skill learning. This thesis aims to develop a learning approach that uses reward prediction error and state prediction error generated by model-free and model-based learning systems, respectively, to arbitrate between the two systems during action selection and efficiently learn a robotic grasping policy.

Goals:

- Develop a meta-control learning approach that arbitrates between model-free and model-based control to improve exploration.

- Evaluate the approach on the NICO robot [3] and compare it to dual-system approaches that use prediction-error signals from only the model-free system (e.g., [4]) or the model-based system (e.g., [5]).

Useful skills:

- Good knowledge of deep neural networks.

- Experience in Python programming and at least one deep learning framework (e.g., Keras, Tensorflow, PyTorch).

- Familiarity with deep reinforcement learning.

- Interest in robotics and human motor control.

References:

[1] M. Keramati, P. Smittenaar, R. J. Dolan, and P. Dayan. Adaptive integration of habits into depth-limited planning defines a habitual-goal–directed spectrum. Proceedings of the National Academy of Sciences, 113(45):12868–12873, 2016. [PDF]

[2] S. W. Lee, S. Shimojo, and J. P. ODoherty. Neural computations underlying arbitration between model-based and model-free learning. Neuron, 81(3):687–699, 2014. [PDF]

[3] M. Kerzel, E. Strahl, S. Magg, N. Navarro-Guerrero, S. Heinrich, and S. Wermter. NICO– Neuro-Inspired COmpanion: A developmental humanoid robot platform for multimodal interaction. Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pages 113–120, 2017. [PDF]

[4] F. S. Fard and T. P. Trappenberg. Mixing habits and planning for multi-step target reaching using arbitrated predictive actor-critic. Proceedings of the International Joint Conference on Neural Networks (IJCNN), pages 1–8, 2018. [PDF]

[5] M. B. Hafez, C. Weber, M. Kerzel, and S. Wermter. Improving Robot Dual-System Motor Learning with Intrinsically Motivated Meta-Control and Latent-Space Experience Imagination. Robotics and Autonomous Systems, 133 (2020): 103630. [PDF]

Contact:

Neural network learning with a laser robot head

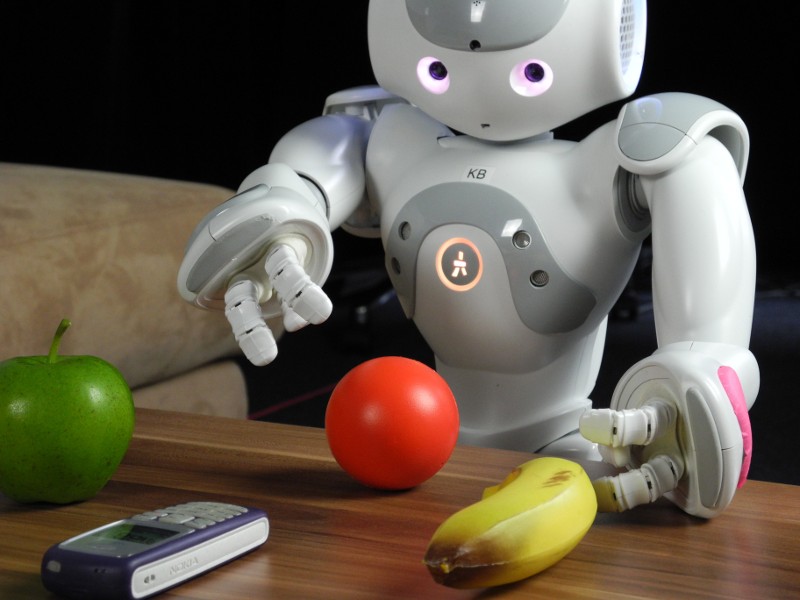

Being able to cover an indoor environment up to 4 meters, the laser head of NAO provides a compact and accurate method to obtain a 2D obstacle map for the use of humanoid robot navigation. Moreover, the laser data is integrated in NaoQi and can be easily retrieved by simple commands. This kind of laser head has been initially developed as a stair climbing robot, an obstacle avoidance robot, etc. In this project, we propose to use the laser sensor for moving object prediction in an ambient environment. While there is previous research [1] of dynamic collision avoidance or moving object tracking, we propose to realize these two aspects in a humanoid robot with neural networks technologies.

Being able to cover an indoor environment up to 4 meters, the laser head of NAO provides a compact and accurate method to obtain a 2D obstacle map for the use of humanoid robot navigation. Moreover, the laser data is integrated in NaoQi and can be easily retrieved by simple commands. This kind of laser head has been initially developed as a stair climbing robot, an obstacle avoidance robot, etc. In this project, we propose to use the laser sensor for moving object prediction in an ambient environment. While there is previous research [1] of dynamic collision avoidance or moving object tracking, we propose to realize these two aspects in a humanoid robot with neural networks technologies.

Goals:

- Construct a 2D map with laser data in a real indoor environment.

- For sub-task (i): Identify and track moving objects in the map, e.g. with a recurrent neural network.

- For sub-task (ii): Build a collision avoidance model with neural network technologies.

Useful skills:

- Programming experience, preferably Python, C/C++.

- Keen interest in artificial intelligence and bio-inspired methods.

- Collaboration with a technician for the laser head is possible.

Contact:

Prof. Dr. Stefan Wermter, Dr. Cornelius Weber, Junpei Zhong

Scene Dependent Speech Recognition

In the context of assistive robots, verbal user instructions to a robot often refer to objects that are visible in the immediate surround. Robot speech recognition could therefore benefit from visual context by biasing recognition towards words that denote seen objects. Task is to create such an audio-visual model and a methodology to train it.

Goals:

- Develop a novel speech recognition architecture with additional visual input

- Find a dataset and develop a training methodology

- Evaluate the trained model

Useful skills:

- Programming experience with Python

- Familiarity with deep learning

- Interest in speech recognition and vision processing

References:

[1] https://pjreddie.com/darknet/yolo/

[2] https://missinglink.ai/guides/tensorflow/tensorflow-speech-recognition-two-quick-tutorials/

Contact:

Speech Enhancement

This is a follow-up work of our interspeech2020 paper [1] on target speech separation. We can explore the effectiveness of face and voice on de-noising (assuming additive noise sources) and de-reverberation (when we have multiplicative noise)..

Goals:

- Develop a model which separates a target speaker's voice from others using face information of that speaker

Useful skills:

- Programming experience with Python

- Familiarity with deep learning

- Interest in speech recognition

References:

[1] Multimodal Target Speech Separation with Voice and Face References

https://arxiv.org/abs/2005.08335

Contact:

Target Speaker Speech Synthesis

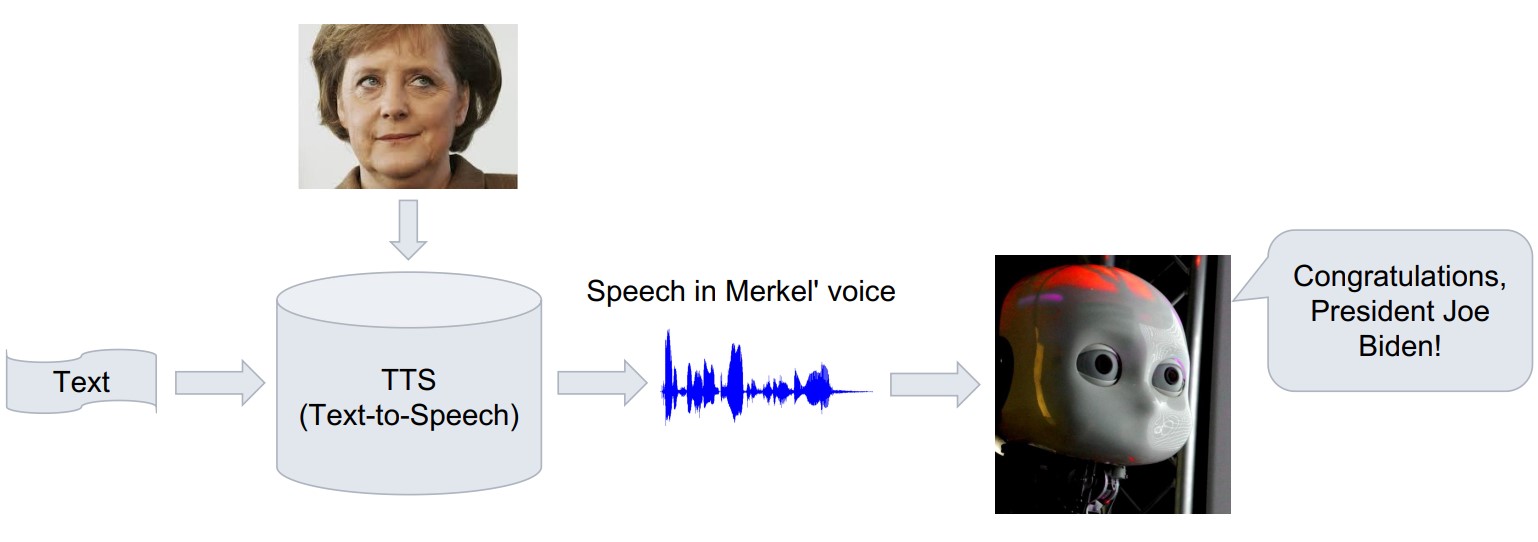

Conventional TTS (text-to-speech) models are trained on one female or male voice and can only generate one speaker voice. One method to synthesize multispeaker voices is adding a target speaker's voice embedding (the one we want) during decoding (Figure 1 in [2]).

Since there is a strong relationship between face and voice [1], we can use face embedding as input as an alternative 'speaker embedding' in above figure. Our initial results of this idea [3] show that we can synthesize a person's voice from his/her face image to a certain degree. The architecture is modular allowing to replace components by newer pretrained deep neural network components. On this basis, we want to generate voices that have an even better match to the faces, and possibly use emotions from the face image to generate emotions in the voice.

Goals:

- Exploit the modularity of the TTS architecture [3] to improve and extend its capabilities.

Useful skills:

- Programming experience with Python

- Familiarity with deep learning

- Interest in speech generation

References:

[1] Multimodal Target Speech Separation with Voice and Face References

https://www2.informatik.uni-hamburg.de/wtm/publications/2020/QWW20/INTERSPEECH_2020_wtm_web.pdf

[2] Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis

https://arxiv.org/pdf/1806.04558.pdf

[3] Hearing Faces: Target Speaker Text-To-Speech Synthesis From a Face. ASRU Dec. 2021 (accepted) (please contact us for the paper)

Contact:

Lip Reading by Social Robots to Improve Speech Recognition in Noisy Environments

In social interactions, humans can easily focus their attention on a single conversation by filtering the noise coming from the background and other simultaneous talking, usually, by processing visual stimuli to aid the auditory stimuli corrupted by such noise sources (Czyzewski et al., 2017). This selective attention is called the "cocktail party effect", and it is a desirable attribute for social robots designed to guide people in museums and social events. In this thesis, the cocktail party problem will be addressed by incorporating lip movements as a complementary input to an automatic speech recognition (ASR) system in order to improve real-time speech recognition performance in noisy environments. Moreover, a selective attention mechanism using visual and auditory stimuli will be designed to keep the robot's attention towards a given person during human-robot interactions (HRIs). Different audio-visual datasets for speech recognition will be used such as GRID (Cooke et al., 2006) and MV-LRS (Chung et al., 2016) so as to assess the system in controlled and uncontrolled scenarios. The GRID dataset is composed of short commands (e.g. "put red at G9 now") recorded in a well controlled indoor scenario, whereas the MV-LRS dataset consists of long and unconstrained sequences of recordings in studios and outdoor interviews from BBC television.

Goals:

- Development of ASR system that uses visual and auditory inputs for real-time speech recognition in noisy environments

- Improving human-robot interaction quality regarding the cocktail party effect

- For a Master Thesis: Development of a selective attention mechanism to keep the robot's attention directed at a speaker

Useful skills:

- An interest in computer vision, linguistics, bio-inspired approaches and HRI

- Experience in deep learning and robotic experimentation

- Programming skills in Python and deep learning frameworks (e.g. Keras, TensorFlow, and PyTorch)

References:

Chung, J. S., Senior, A., Vinyals, O., & Zisserman, A. (2017). Lip Reading Sentences in the Wild. IEEE Conference on Computer Vision and Pattern Recognition. Cooke, M., Barker, J., Cunningham, S., & Shao, X. (2006). An audio-visual corpus for speech perception and automatic speech recognition. The Journal of the Acoustical Society of America, 120(5), 2421-2424. Czyzewski, A., Kostek, B., Bratoszewski, P., Kotus, J., & Szykulski, M. (2017). An audio-visual corpus for multimodal automatic speech recognition. Journal of Intelligent Information Systems, 49(2), 167-192.

Contact:

Henrique Siqueira, Leyuan Qu, Dr. Cornelius Weber, Prof. Dr. Stefan Wermter

Curious robots with model-based reinforcement learning

Forward models are essential for human thinking and problem-solving in several ways. Forward models are, essentially, functions that map an action and an observation to a successor observation. For example, if an apple is laying on a table and you move your hand to push the apple strongly, then your internal forward model predicts that the apple will fall from the table. This is a useful basis for human curiosity: Humans have the desire to minimize the prediction error of their internal forward model. For example, if they are not sure about the outcome of an action they will perform the action to observe the result and update their causal forward model.

The thesis goal is to implement such a curiosity-mechanism based on the free-energy principle [1,2] and reinforcement learning. You will work with a simulated 3d robotic environment to realize your experiments, in close collaboration to our current research within the IDEAS project [3,4]. To evaluate your approach, you will investigate in how far the curiosity mechanism can be combined with a reinforcement-learning approach to perform robotic tool-use tasks.

The video shows our preliminary experiments on this subject: The black curve shows the "surprise" value, i.e., the prediction error of the robot's forward model. Note how the surprise peaks when the robotic arm hits an object.

Goals:

- Realization of a 3d robotic sandbox environment

- Implementation of the curiosity mechanism

- Evaluation and investigation of the hypothesis that the approach improves the learning performance of a problem-solving robotic agent.

Useful skills:

- Extensive programming skills in Python and deep learning frameworks (Keras, TensorFlow, PyTorch)

- Experience in 3d environments

- Experience in robotic experimentation

References:

[1] Friston, K. J., Daunizeau, J., Kilner, J., & Kiebel, S. J. (2010). Action and behavior: A free-energy formulation. Biological Cybernetics, 102(3), 227–260.

[2] Butz, M. V., Bilkey, D., Humaidan, D., Knott, A., & Otte, S. (2019). Learning, planning, and control in a monolithic neural event inference architecture. Neural Networks, 117, 135–144. https://doi.org/10.1016/j.neunet.2019.05.001

[3] Eppe, M., Magg, S., & Wermter, S. (2019). Curriculum goal masking for continuous deep reinforcement learning. International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), 183–188.

[4] Eppe, M., Nguyen, P. D. H., & Wermter, S. (2019). From semantics to execution: Integrating action planning with reinforcement learning for robotic tool use. Retrieved from http://arxiv.org/abs/1905.09683

Contact:

Explainable Neural State Machine with Spatio-Temporal Relational Reasoning

“Explainable Agent” refers to autonomous agents that can explain their actions and the reasons leading to their decisions. This will incite their users to understand their capabilities and limits, thereby improving the levels of trust and safety, and avoiding failures. The Neural State Machine (NSM) is an explainable agent that bridges the gap between neural and symbolic AI by integrating their complementary strengths for the task of visual reasoning [1]. Given an image, the NSM first predicts a probabilistic semantic graph that represents the underlying semantics and serves as a structured world model. The structured world model is then used to perform sequential reasoning over the graph. The NSM provides spatial relational reasoning through a probabilistic scene graph. However, the Graph R-CNN used in the NSM is a black-box model. Therefore, the users may not be able to understand the reasons and causes for the NSM outputs. In addition, the NSM is not able to link meaningful transformations of objects or entities of the semantic graph over time. The objective of this project is, therefore, to develop an explainable NSM with Spatio-temporal reasoning.

spatial relational reasoning [1]

temporal relational reasoning (http://relation.csail.mit.edu/)

Goal

- To improve the effectiveness of explanations by improving model interpretability of the NSM [2].

- To enhance the NSM with Temporal Relational Reasoning capability.

Useful skills:

-

Programming experience, preferably Python and frameworks such as PyTorch, Tensorflow and Keras,

-

Knowledge in deep neural networks.

Reference

[1] Hudson, D. A. & Manning, C. D. (2019). Learning by Abstraction: The Neural State Machine. CoRR, abs/1907.03950.

[2] Stefan Wermter (2000). Knowledge Extraction from Transducer Neural Networks. Journal of Applied Intelligence, Vol. 12, pp. 27-42. https://www2.informatik.uni-hamburg.de/wtm/publications/2000/Wer00b/wermter.pdf

Contact:

Prof. Dr. Loo Chu Kiong, Dr. Cornelius Weber, Dr. Matthias Kerzel, Prof. Dr. Stefan Wermter

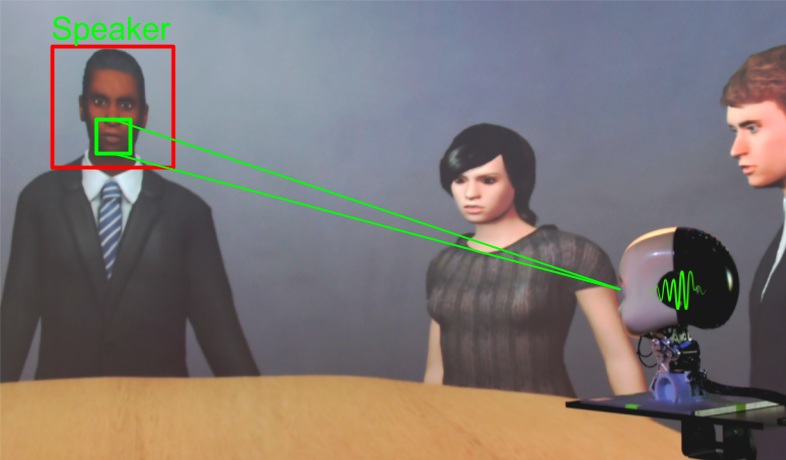

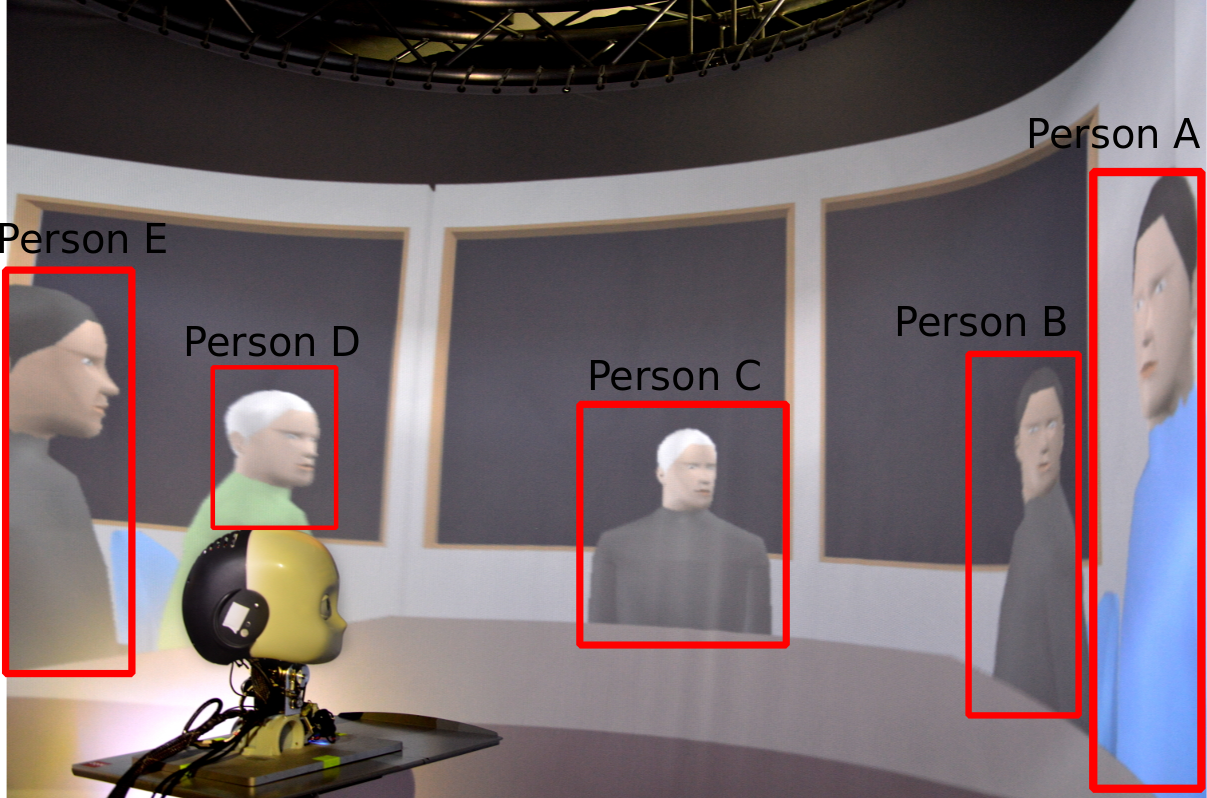

Person Recognition Using Multisensory Information for social Human-robot Interaction in the wild

This thesis aims to develop a system for person-in-discussion recognition in a natural multi-agent conversation scenario. Person recognition is a very important skill for social Human-robot interaction, and still a very difficult task. Convolution Neural Networks were used recently for person identification in the wild [1,2] but never evaluated in a multi-agent environment. In our scenario, various persons will be present, and the robot should learn, in an online fashion, to distinguish between them.

Recently, Parisi, Barros et al [3] proposes an architecture to facilitate this scenario, though its focus is in a controlled environment where sensory information from human agents are generated within a lab: i.e. avatar movements, artificial sound from speakers, etc. One possible direction of the thesis is extending the available architecture and validating its performance in the wild.

Goals:

- Develop a person-in-discussion recognition system based on multisensory information, using deep learning methods.

- Evaluate the use of different sensory characteristics, such as the face, body posture, gestures and sound sources for personal identification.

- Deployment of a robot control method for natural social Human-robot Interaction

Useful skills:

- Programming experience, preferably Python and/or C++.

- Knowledge of deep neural networks (DNN) and DNN frameworks like Tensorflow, Pytorch.

- Experience with robot middleware: ROS, YARP

Reference:

[1] Wu, Lin, Chunhua Shen, and Anton van den Hengel. “PersonNet: Person Re-identification with Deep Convolutional Neural Networks.” arXiv preprint arXiv:1601.07255 (2016).

[2] Li, Wei, et al. “Deepreid: Deep filter pairing neural network for person re-identification.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2014.

[3] German I. Parisi, Pablo Barros, Di Fu, Sven Magg, Haiyan Wu, Xun Liu, Stefan Wermter. “A neurorobotic experiment for crossmodal conflict resolution in complex environment.” IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 2018.

Contact:

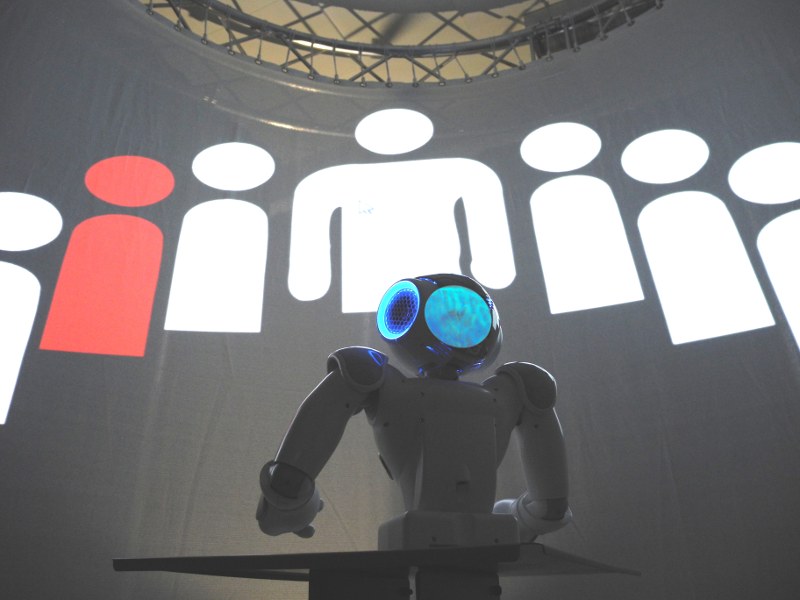

Realistic Turn-Taking in Virtual Multi-Agent Conversation

In humans and animals, responses to events in the environment depend critically on visual and auditory input integration. Our neuro-computational models of multimodal integration are embedded in robotic hardware and trained in controllable virtual reality robotic environments. The goal of this thesis is to develop a virtual environment that realizes a multi-agent conversation with realistic turn taking behaviour. The agents should be able to utilize both auditory cues (speaking up, interrupting each other) as well as visual cues (motion, gestures, head orientation) to signal turn taking. This task encompasses the design of individual virtual agents capable of expressing verbal and visual turn taking signals in the virtual robot experimentation platform V-REP and the development of a planning system that schedules turn taking for all agents to create a collaborative or conference like scenario. This thesis offer is part of the research project: “Vision- and action-embodied language learning”.

Goals:

- Realization of a virtual reality multi-agent conversation scenario

- Development of a framework for realistic conversational turn taking behaviour of virtual agents using visual and auditory cues.

Useful skills:

- Interest in linguistics, especially with the topic of turn taking.

- Experience with the virtual robot experimentation platform V-REP.

- Good programming skills in Python.

Contact:

Explaining Crossmodal Information Integration

Relying on crossmodal information processing in solving tasks often yields better performance than unimodal input information [1]. Problematically, deep neural network models can, for the most part, be regarded as black box models which hinders adoption and trust in the technology. Recently, various techniques have been developed to increase transparency of such models [2]. The task is to explore those methods and find a suitable one to explain crossmodal information processing.

Goals:

- Develop an explainable model that can account for relevant features of each modality that are necessary to solve the task

Useful skills:

- Knowledge of Neural Networks / Deep Learning

- Programming experience

- Ideally experience with PyTorch

[1] Arevalo et al., 2020, Gated multimodal networks, Neur. Comp. and Appl. 32. (https://doi.org/10.1007/s00521-019-04559-1)

[2] Das & Rad, 2020, Opportunities and Challenges in Explainable Artificial Intelligence (XAI): A Survey. (https://arxiv.org/abs/2006.11371)

Any further questions? Please contact tomweber"AT"informatik.uni-hamburg.de via E-Mail.

Contact:

Towards NICO Walking

Our humanoid robot NICO, once derived from a soccer robot, lost its walking ability, since the classical control algorithm cannot handle the added weight of its hardware extensions on head and arms. Later, a bio-inspired control algorithm based on a central pattern generator produced, however slow, walking of NICO in the CoppeliaSim simulator [1].

Goals:

- Generate natural walking of NICO. This shall be feasible due to (i) the new powerful TD-MPC reinforcement learning algorithm, which has been shown to control a humanoid robot [2], and (ii) the new fast MuJoCo physics engine in CoppeliaSim, which allows more efficient training.

Useful skills:

- Interest in reinforcement learning, first experiences are a plus

- Ability to adjust settings in CoppeliaSim to counter unwanted effects, such as slippage

- Understanding of physical behaviour, so to adjust the cost/reward function to lead to natural walking

References:

[1] Hierarchical Control for Bipedal Locomotion using Central Pattern Generators and Neural Networks

https://www2.informatik.uni-hamburg.de/wtm/publications/2019/AMW19/auddy_icdl_2019.pdf

[2] Temporal Difference Learning for Model Predictive Control

https://nicklashansen.github.io/td-mpc/

Contact:

Exploring objects like a child with the NICO robot

Children learn to the know the environment but also to think in and express natural language by direct interaction with objects and other strange things in the environment. While doing this they make use of effective strategies from clumsily touching objects up to exploring it's affordances by manipulating it.

In this project we want to development these strategies for our NICO robot as a 3D-model in the virtual environment V-REP as well as our real robot in the lab. Furthermore we want to measure the perceived effect on the robot and on the objects and let the robot learn about object features over a longer time of interaction. As a long term goal we plan to employ the result of the project in our research on embodied language acquisition through automated linguistic instructions during robot object interaction. For this several students could collaborate e.g. working on visually tracking the manipulated objects and forming representations for perceived interactions in architectures in GWR and MTRNN architectures.

Goals:

- Study into motor babling in infants and develop motion strategies for the NICO,

- Develop an interface for the motion characteristics,

- For a Master Thesis: Learn effective interaction with different objects in a neural architecture, e.g. recurrent and self-organising.

Useful skills:

- Interest in robotics, motion, haptics, and learning, Affinity for child development and cognitive psychology,

- Programming skills in Python,

- Experience in V-Rep or other simulators, and with the NICO robot.

Contact:

Dr. Matthias Kerzel, Dr. Cornelius Weber, Prof. Dr. Stefan Wermter

Extending NICO's Reach

Our NICO humanoid robot, when seated at a table, can only reach a small area in front of it, such as to grasp an object, because of its short arms. In order to extend its reaching range, in this project we plan to involve movements of its thighs, letting NICO tilt its upper body forward, thereby expanding its reaching horizon. Since the head with camera will move together with the torso, visuo-motor coordination will become more challenging compared to the normal case where the camera is kept in a fixed position.

Useful skills:

- Interest in reinforcement learning (RL), first experiences are a plus. We will aim for a sample-efficient RL algorithm (e.g. [1]), possibly aided by self- or unsupervised representation learning.

- Interest in 3D robot simulators (e.g. [2]), to model the NICO scenario.

References:

[1] Temporal Difference Learning for Model Predictive Control

https://nicklashansen.github.io/td-mpc/

[2] CoppeliaSim

https://www.coppeliarobotics.com/

Contact:

Neural Grasping NICO robot

To control the arm and position of the hand, a neural network controller has been successfully trained in simulation that can calculate the joint angles for a given set of coordinates and vice versa. The next steps would now be the extension of the neural model to include the rotation of the hand and to test the controller, which was trained in simulation, on the real robot.

Goals:

- Extend the neural controller to utilize all available joints of the arm, including rotation of the hand

- Successfully train the extended model in the simulator

- Test successfully trained neural networks on the real robot

- Analyse and evaluate controllers in terms of training performance and applicability

Useful skills:

- Interest in robots and neural networks.

- Programming experience, preferably Python or C++.

- Experience with 3D simulation environments and robots helpful but not necessary.

The topic is suitable to be a thesis at bachelor or master level.

Contact:

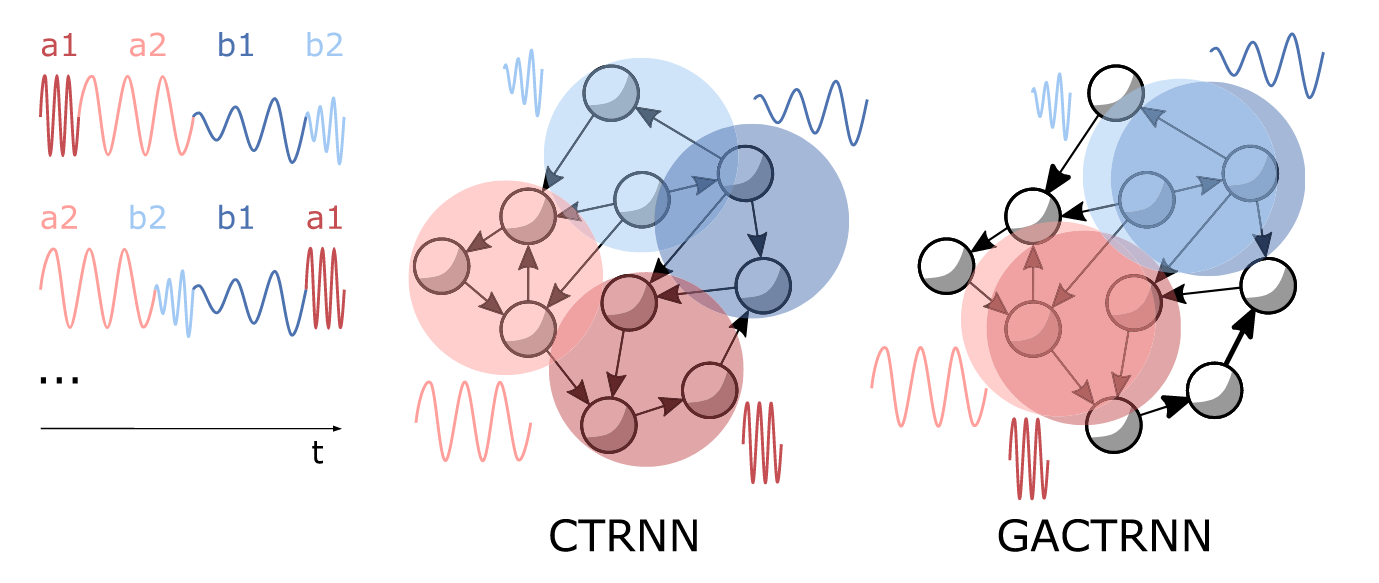

Probabilistic processing of sequences with multiple timescales

The human brain can deal incredibly well with sequential information on different timescales. For examples, we can understand speech quite well despite huge temporal differences on various frequencies between individual speakers and can act precisely in a highly dynamic world such as in playing table tennis. Thus, how can we realise the sequential information processing on various timescales in future artificial intelligence systems?

State-of-the-art AI approaches like deep convolutional neural networks and transformer networks are superseding human capabilities by far in some aspects like visual object recognition and language processing but only because they can be trained to capture subtle statistical details from vast data corpora. Yet they can’t capture stochastic characteristics that are omnipresent in all natural data.

For capturing stochastic details, Bayesian learning was suggested and studied for the brain. Transferring this to solve real-world tasks with AI systems is unfortunately not feasible because the optimal Bayesian model for a task is computationally intractable. But perhaps we can approximate the Bayesian process! Thus in this thesis project, we want to look into approximate-Bayesian training or training with variational inference to capture stochasticity in processing sequence with multiple timescales. Training with Dropout or Zoneout appears to behave approximate-Bayesian as they mimic a certain random process by with the training data is changed. Variational inference models a specific random generator that is changing the data and yet must get learned from a discriminator. As these approaches seem to work well in general, we could perhaps test them specifically on the timescale characteristics of our tasks!

Goals:

- Study the recent literature for different ideas how probabilistic characteristics of sequences can be modelled, e.g. in approximate-Bayesian training and training with variational inference.

- Modify and apply one recent computational model (e.g. from [1]) on a few different sequence learning tasks with real-word data (musik, speech, human hand drawings, robot actions, etc.) and analyse how the stochastic component contribute in solving such tasks.

Useful skills:

- Knowledge in neural networks

- Good background in stochastic or strong interest in learning probabilistic computing

- Some experiences with recent deep learning frameworks (tensorflow/pytorch) or a strong drive in learning to master one

The topic is suitable to be a thesis at master level.

References:

[1] Ahmadi, A., & Tani, J. (2019). Neural Computation, 31, 2025–2074.

[2] Heinrich, S., Alpay, T., & Nagai, Y. (2020). Neuroscience, 389, 161-174.

[3] Murata, S., Namikawa, J., Arie, H., Sugano, S., & Tani, J. (2013). IEEE Transactions on Autonomous Mental Development, 5(4), 298-310.

[4] Fortunato, M., Blundell, C., Vinyals, O. (2017). WiML 2017, arXiv:1704.02798

Contact:

Bio-inspired image stabilizing on a walking robot / RGBD-Image stabilization for SLAM

Intelligent robotics ranges from bio-inspired learning, vision, motor control to household assistance. Embodied applications in the real world face different kinds of challenges such as noisy sensory signals and system perturbations. For example, the shaking video taken from a walking robot’s camera is unpleasant to watch, however, when we walk, we perceive a stable environment. In this project, we will develop a bio-inspired digital image stabilization system to be used on small-sized humanoid robots such as NAO and Darwin-OP. The thesis can first address work on a video and then on a robot in real-time.

Intelligent robotics ranges from bio-inspired learning, vision, motor control to household assistance. Embodied applications in the real world face different kinds of challenges such as noisy sensory signals and system perturbations. For example, the shaking video taken from a walking robot’s camera is unpleasant to watch, however, when we walk, we perceive a stable environment. In this project, we will develop a bio-inspired digital image stabilization system to be used on small-sized humanoid robots such as NAO and Darwin-OP. The thesis can first address work on a video and then on a robot in real-time.

Goals:

- Implement a bio-inspired digital image stabilization system. The system should include features such as detection of ego-motion and compensate camera vibrations due to walking or other body movements.

- Optimize the model for the Darwin-OP or the NAO robot for off-board and onboard processing.

- Another route for this work could be to deliver an always-upright RGBD image (Image+Depth) from a Kinect/Xtion sensor on the robot, which can be input to a simultaneous localisation and mapping (SLAM) algorithm, since available SLAM algorithms only work on wheeled robots due to the shaking of humanoids.

Useful skills:

- Keen interest in hybrid intelligent systems, applied AI and robotics.

- Programming experience, preferably C/C++ and/or Python.

- Previous knowledge on digital signal processing techniques could be helpful. Deeper knowledge will be acquired during the thesis.

Contact:

Retina-Inspired Image Preprocessing for Mobile Robots

Vision is one of the most used perception modalities for robots. Although there has been an incredible advance on object recognition thanks to deep learning techniques, there are still challenging aspects of vision that need to be solved for applications on embodied applications. Some of these aspects are light-weight learning and recognition systems capable of running in real-time on embedded systems, improved response to high dynamic range (HRD) beneficial for scenes with substantial differences in illumination, and compression rates of the video stream from a robot to external processing units or for monitoring a robot or its surroundings. Although these technologies are being successfully applied in different technological applications, they still have to transition to most robot frameworks.

Thus, the aim of this thesis is to develop low-latency retina-based pre-processing of visual information to improve the high dynamic range (HRD), and image compression ratio for ROS. ROS is the de facto middleware for robot applications in academic research, and it is quickly gaining considerable traction in industrial applications. Hence, projects improving and extending its functionality could potentially have a huge and meaningful impact on the robotics community as a whole.

Goals:

The goal is to develop a ROS Node that processes images on a retina-inspired fashion, capable of the following:

- Compress images based on "rank-order coding mechanism", see section 1.3.3 of Escobar et al. 2021.

- Process the images to increase their high dynamic range, see Benzi et al. 2018.

- The user should be able to activate each capability independently when starting the ROS node.

- This should be optimized to run as fast as possible, 30 frames per second or higher.

Useful skills:

- Keen interest in hybrid intelligent systems, applied AI and robotics.

- Programming experience, preferably C/C++ and/or Python.

- Previous knowledge with the middleware ROS could be helpful but not indispensable since that knowledge will be acquired during the thesis.

References:

- Benzi, M., Escobar, M.-J., & Kornprobst, P. (2018). A Bio-Inspired Synergistic Virtual Retina Model for Tone Mapping. Computer Vision and Image Understanding, 168, 21–36. https://doi.org/10.1016/j.cviu.2017.11.013

- Escobar, M.-J., Alexandre, F., Viéville, T., & Palacios, A. G. (2021). Rapid Prototyping for Bio–Inspired Robots. In Rapid Roboting: Recent Advances on 3D Printers and Robotics (1st ed.). Springer International Publishing. https://www.springer.com/gp/book/9783319400013

Contact:

Indicative project topics in computing:

Bio-inspired auditory pathway

Robots need to be aware of their environment even if important things happen outside of their visual input field. A key factor is to move from simulation environments to embodied applications and architectures that present different kinds of challenges such as environmental noise and even ego-noise. In this project, we will develop a bio-inspired auditory model for our new iCub head. This system will mimic bottom-up auditory signal processing integrating known biological models into a single framework. The model will match time and degree of processing constraints and will act as the core architecture for developing future bio-constrained and bio-inspired models of sensory signal processing in the brain.

Robots need to be aware of their environment even if important things happen outside of their visual input field. A key factor is to move from simulation environments to embodied applications and architectures that present different kinds of challenges such as environmental noise and even ego-noise. In this project, we will develop a bio-inspired auditory model for our new iCub head. This system will mimic bottom-up auditory signal processing integrating known biological models into a single framework. The model will match time and degree of processing constraints and will act as the core architecture for developing future bio-constrained and bio-inspired models of sensory signal processing in the brain.

Please note that you don’t need to address all the following listed features and they can be chosen depending on your interest: The model should include features such as a cochlear model, auditory adaptation (dynamic background noise filtering), ego-noise filtering, signal onset and end detection, auditory input classification or segmentation, sound source localization, etc.

General goals:

- Implement a bio-inspired subcortical auditory pathway. The model should bring together already existing technical developments and develop bio-constrained algorithms not present in pure technical solutions of audio processing.

- Optimize model for real-time applications targeting the NAO and iCub robot (either off-board or on-board processing).

- The implementation should be done with re-use in mind, i.e. robust implementation, well-designed interface, good documentation, extensibility, possibly bindings for ROS or YARP.

Useful skills:

- Keen interest in hybrid intelligent systems, applied AI and robotics.

- Programming experience, preferably C/C++ and/or Python.

- Previous knowledge on digital signal processing is helpful but not indispensable.

Contact:

Creating a neuroscience-inspired learning interacting robot

In this exciting project we look at neuroscience-inspired architectures for controlling the behavior of a robot. While traditional robots have often been preprogrammed, our new approach will focus on learning robots which will be based on some neuroscience evidence. These navigation and movement concepts are transferred and further developed on a Nao robot which has some speech and vision capabilities. The NeuroBot will learn to associate actions with words and pointing gestures. We want to restrict the fixed manual programming of the robot and emphasize the adaptive autonomous learning in neural networks in combination with restricted instructions via words and simple pointing. Some of the previous and current robot platforms available are shown at http://www2.informatik.uni-hamburg.de/wtm/neurobots. Students who participated in our project "Human Robot Interaction" can also suggest a further topic for their theses.

In this exciting project we look at neuroscience-inspired architectures for controlling the behavior of a robot. While traditional robots have often been preprogrammed, our new approach will focus on learning robots which will be based on some neuroscience evidence. These navigation and movement concepts are transferred and further developed on a Nao robot which has some speech and vision capabilities. The NeuroBot will learn to associate actions with words and pointing gestures. We want to restrict the fixed manual programming of the robot and emphasize the adaptive autonomous learning in neural networks in combination with restricted instructions via words and simple pointing. Some of the previous and current robot platforms available are shown at http://www2.informatik.uni-hamburg.de/wtm/neurobots. Students who participated in our project "Human Robot Interaction" can also suggest a further topic for their theses.

Goals:

- Learning navigation from simple multimodal input

- Robotic vision: Object recognition and object manipulation

- Implementation of neural network algorithms for speech and pointing instructions for NeuroBot

- At later stage: vision and speech capabilities for the NAO

Requirements:

- Programming skills: C/C++, Python

- At least basic knowledge in neural network algorithms and natural language processing

- Willingness to work in robotic environment

The thesis can be written either in German or English. All topics could be tailored to be at a bachelor, master level, or phd student level. If you are interested contact us for discussion:

Contact:

Internet text mining agents based on hybrid and neural learning techniques

Unrestricted potentially faulty text messages arrive at a certain delivery point (e.g. email address or world wide web address). These text messages are scanned and then distributed to one of several expert agents according to a certain task criterion (e.g. language class, news group or semantic library class). Expert agents can dynamically accept or reject a message. Furthermore, the number of expert agents can vary dynamically over time. If the best possible expert agent is not available the next best choice has to be found in order to supply a best possible instant answer. This dynamic unrestricted message routing task is new and hybrid neural/symbolic techniques have not yet been examined for noisy dynamic internet message routing.

Unrestricted potentially faulty text messages arrive at a certain delivery point (e.g. email address or world wide web address). These text messages are scanned and then distributed to one of several expert agents according to a certain task criterion (e.g. language class, news group or semantic library class). Expert agents can dynamically accept or reject a message. Furthermore, the number of expert agents can vary dynamically over time. If the best possible expert agent is not available the next best choice has to be found in order to supply a best possible instant answer. This dynamic unrestricted message routing task is new and hybrid neural/symbolic techniques have not yet been examined for noisy dynamic internet message routing.

Goals:

- Development of hybrid neural and symbolic processing methods

- Implementation of an hybrid learning agent for text mining

Requirements:

- Programming skills: C/C++, Python, Matlab

- Knowledge in neural networks, symbolic processing, and mining techniques

- Scientifically sound evalution of conducted experiments

The thesis is suitable at the bachelor or master level.