Research Projects

Our objective is to research into artificial intelligence and knowledge technology for intelligent systems. However, our new approaches are often motivated by nature, e.g. from the brain, cognition and neuroscience. We want to study and understand nature-inspired, in particular hybrid neural and symbolic representations, in order to build next-generation intelligent systems. Such systems include for instance adaptive interactive knowledge discovery systems, learning crossmodal neural agents with vision and language capabilities, or neuroscience-inspired continually learning robots.

GREET (Generative Explainee-aware Explainability and Transparency in Proactive Cyber-Physical Eco-Environments)

GREET (Generative Explainee-aware Explainability and Transparency in Proactive Cyber-Physical Eco-Environments) is an interdisciplinary European MSCA training network of leading academic and industrial partners in Cyber-Physical System (CPS), AI, software engineering and cognitive interaction research. It aims at training young scientists, technologists, and entrepreneurs to advance Europe in the scientific and technological innovation in Generative AI-driven CPS, its proactivity and explainability. GREET combines research training with a range of academic and industrial placements, specialist research and knowledge transfer workshops. It will develop breakthrough CPS systems and services that feature generative cognition, explainee-aware generative explainability, and transparent proactivity in highly secured CPS eco-environments. GREET will deliver key insights into the science and models of the proposed CPS, setting up its scientific foundation, and equipping the Doctoral Network’s recruited Doctoral Candidates with skills to drive the next innovative steps in this key area of generative AI-driven CPS. These steps include building a sustainable innovation ecosphere and community, disseminating and exploiting this new CPS’s learning and understanding of the eco-internals, ecosurroundings and eco-dynamics in many vital societal and economic services, including Industry 5.0, smart city, healthcare, energy, emergency handling, social robotics and elderly care. The innovation perspective of these new CPS will be particularly important for handling services that involve humans and in critical situations, e.g., in emergency and hazardous environments.

Duration: 4 years

PI: Prof. Dr. Stefan Wermter, Dr. Cornelius Weber, Dr. Matthias Kerzel

Associate: to be named

Details:

GREET project

Lifelong Multimodal Language Learning by Explaining and Exploiting Compositional Knowledge (LUMO)

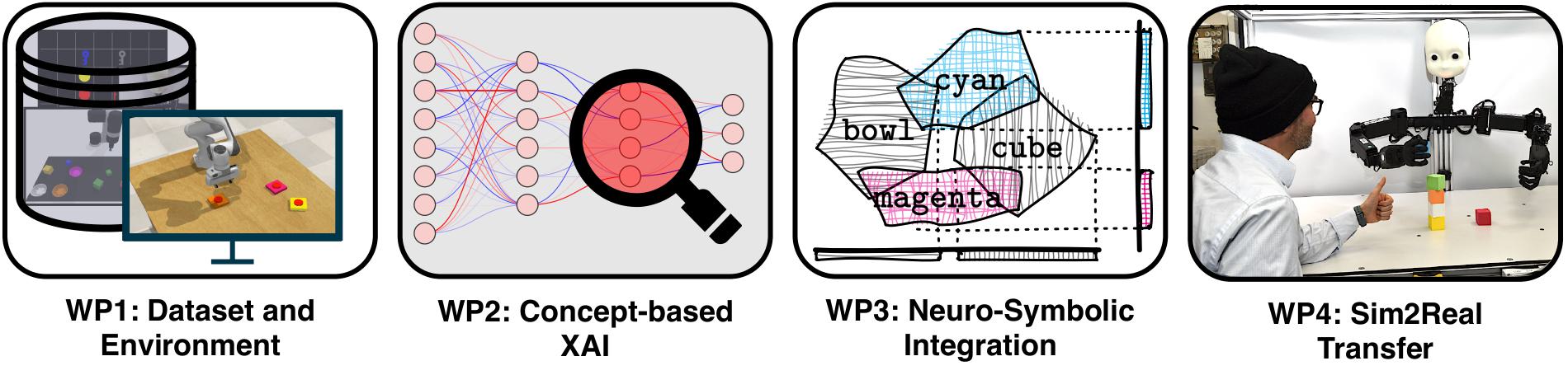

Learning and using language for understanding, conceptualizing, and communicating is a particular hallmark of humans. This has motivated the development of multimodal deep learning models that learn and think like humans. Existing multimodal models, however, have problems in a lifelong language learning setting, where they are confronted with changing tasks while having to retain previously learned knowledge. This has been considered a challenging obstacle to their application to real-world scenarios. The goal of the proposed project LUMO is to explore the important yet challenging research question of how to make multimodal models robust against task changes (or distribution shifts) by explaining and exploiting compositional knowledge. In devising such Lifelong Learning Multimodal Models (LLMMs), our first objective is to develop datasets and environments for two representative multimodal language learning tasks, i.e., vision and language integration and language-conditioned robotic manipulation, where our focus is on concepts, relations, and actions that can be combined in novel ways across changing tasks.

Our second objective is understanding why certain approaches lead to more robust LLMMs. We will address this by scrutinizing how concepts and relations emerge inside an LLMM using concept-based XAI methods. We also aim to understand the training dynamics of the formation of concepts and relations in an LLMM to elucidate compositional generalization on the one hand and catastrophic forgetting on the other.

Our third objective is to develop a neuro-symbolic approach which is tightly integrated with the model and to improve the model's lifelong learning performance. We observe that the inner interpretability not only helps us understand the reason for the robustness or brittleness of an approach, but has also the potential to debug spurious correlations in an LLMM. We hypothesize that the features of a concept form a region in the embedding space, such that one can apply symbolic constraints using vector space semantics to those regions to improve the robustness of an LLMM. The insights obtained from the research will be examined for real language-conditioned robotic manipulation scenarios, where we aim at a sim2real transfer, i.e., transferring skills from simulation to the real world.

PIs: Dr. Jae Hee Lee, Department of Informatics, Universität Hamburg

Prof. Dr. Stefan Wermter, Department of Informatics, Universität Hamburg

Details:

LUMO project

SWEET - Social aWareness for sErvicE roboTs

Abstract

To develop autonomous robots that are able to comply with social conventions and expectations, and avoid rejection from humans requires that robots must be aware of the social context in which they operate. To this extent, robots need to be endowed with high levels of reactivity, proactivity, responsiveness, and intelligibility. The Doctoral Network - Industrial Doctorates on Social aWareness for sErvicE roboTs (SWEET) aims at training a new generation of research and professional figures able to advance the development of socially aware robots capable of perceiving, interpreting, and responding to human emotions, intentions, and cultural differences. The training program will offer a diverse curriculum, encompassing theoretical knowledge, hands-on technical skills, and real-world application scenarios. The network's interdisciplinary approach includes various fields, such as artificial intelligence, machine learning, human-robot interaction, computer vision, and cognitive sciences. Doctoral candidates will be immersed in cutting-edge research and innovation, gaining insights from the experience of both industrial and academic acclaimed research groups. The network will place a strong emphasis on ethical considerations and responsible innovation, deploying socially aware robots aligned with societal values and promoting inclusivity.

Doctoral Candidates will also have the opportunity to participate in a unique coaching program for continuous professional development of their soft and leadership individual skills. Integration Milestones following a Scenario-Based Learning approach will provide co-working activities where collaborative design/implementation is fostered. The inter-sectoral collaboration between academia, user groups’ representatives, business developers, and robot manufacturers of the project will further strengthen the novelty and impact of the research and training, and that research results are economically, socially, and technically feasible.

Duration: 4 years

PI: Prof. Dr. Stefan Wermter

Associate: Dr. Cornelius Weber, Dr. Matthias Kerzel

Details:

SWEET project

TRAIL: TRAnsparent, InterpretabLe Robots

TRAIL is a new Doctoral Network project as Marie Skłodowska-Curie Action funded by the European Commission 1.3.2023-28.2.2027.

Abstract

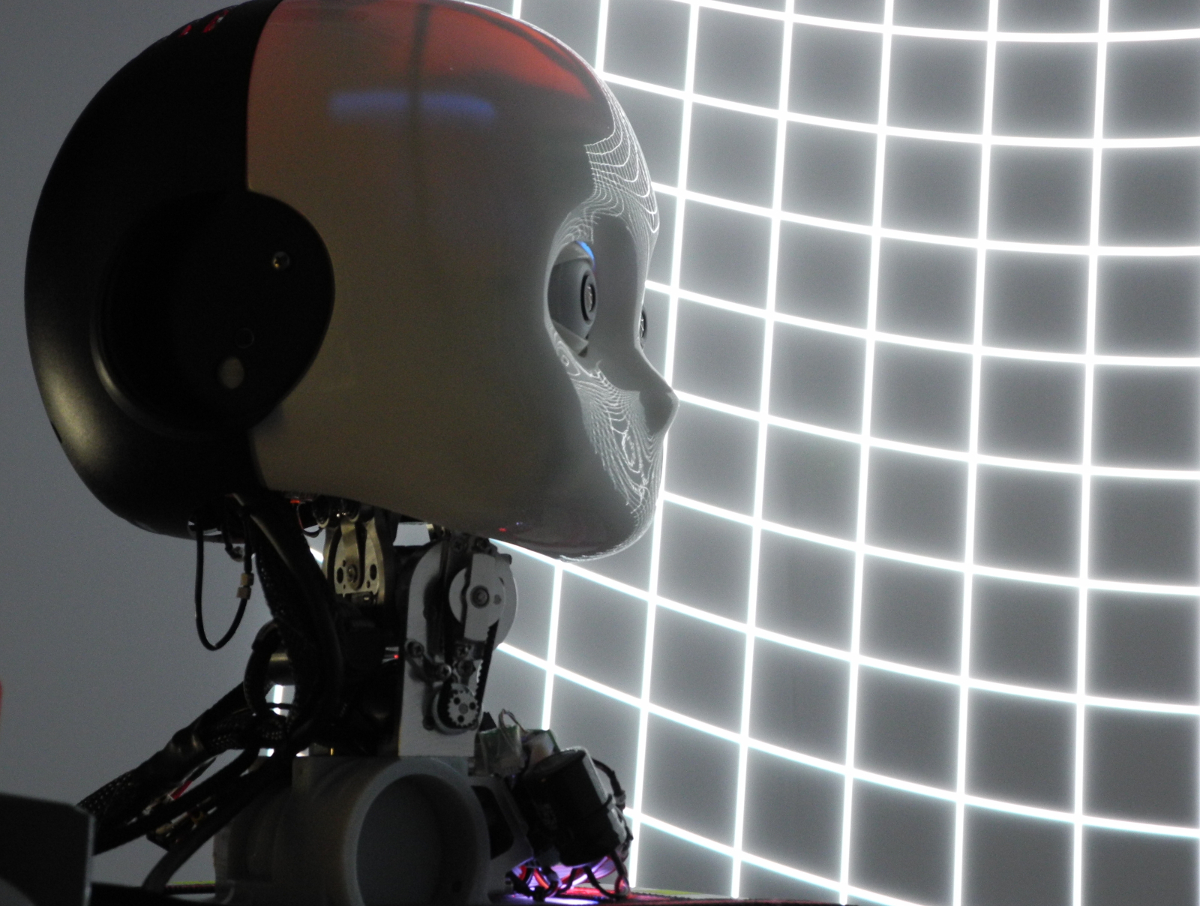

TRAIL strategically focuses on a novel, highly interdisciplinary and cross-sectorial research and training programme for a better understanding of transparency in deep learning, artificial intelligence and robotics systems. In order to train a new generation of Early Stage Researchers (ESR) to become experts in the design and implementation of transparent, interpretable neural systems and robots, we have built a highly interdisciplinary consortium, containing expert partners with long-standing expertise in cutting-edge artificial intelligence and robotics, including deep neural networks, computer science, mathematics, social robotics, human-robot interaction and psychology. In order to build transparent robotic systems, these new ESR researchers need to learn about the theory and practice of the principles of (1) internal decision understanding and (2) external transparent behaviour. Since the ability to interpret complex robotic systems needs highly interdisciplinary knowledge, we will start, on the decision level, to interpret deep neural learning and analyse what knowledge can be efficiently extracted. At the same time, on the behaviour level, the disciplines of human-robot interaction and psychology will be key in order to understand how to present the extracted knowledge as behaviour in an intuitive and natural way to a human user to integrate the robot into a cooperative human-robot interaction.

A scaffolded training curriculum will guarantee that the ESRs have not only a deep understanding of both research areas, but experience optimal skill training to be fully prepared for a successful research career in academia and industry. The importance and need of this research for the industry is clearly visible with the full commitment of 7 leading European and world-wide-operating robotics companies that together cover the majority of Europe’s robot market and a broad spectrum of AI applications.

A scaffolded training curriculum will guarantee that the ESRs have not only a deep understanding of both research areas, but experience optimal skill training to be fully prepared for a successful research career in academia and industry. The importance and need of this research for the industry is clearly visible with the full commitment of 7 leading European and world-wide-operating robotics companies that together cover the majority of Europe’s robot market and a broad spectrum of AI applications.

PI: Prof. Dr. Stefan Wermter

Associate: Theresa Pekarek Rosin, Sergio Lanza, Julia Gachot

Details:

TRAIL project

TERAIS - Towards Excellent Robotics and Artificial Intelligence at a Slovak university

Partners:

Cognitive Systems, Comenius University in Bratislava Slovakia

Knowledge Technology, Universität Hamburg, Germany

IIT Istituto Italiano di Tecnologia, Italy

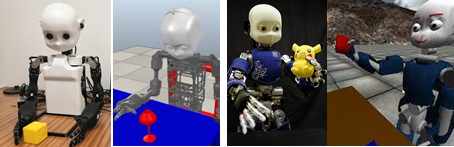

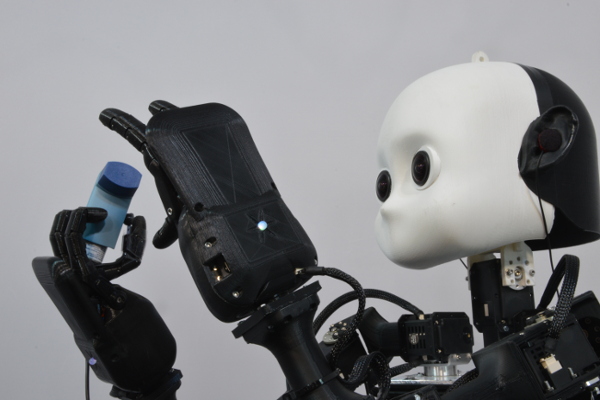

The TERAIS project aims at collaborating with the Department of Applied Informatics at Comenius University in Bratislava to become a workplace of international academic excellence in Robotics and Artificial Intelligence. To achieve this, the project aims to (1) prepare conditions for systematic development of research capacity in Artificial Intelligence and Robotics, (2) establish sustainable networking and collaboration links with partner institutions Comenius University in Bratislava, Universität Hamburg and the Italian Institute of Technology, in order to boost institutional international research at Comenius University.

The partnership will comprise, in addition to the Department of Applied Informatics at Comenius University in Bratislava, the Knowledge Technology team at Universität Hamburg - with excellent expertise in neural networks, deep learning and crossmodal learning for developmental robotics - and two units at Italian Institute of Technology in Genoa - with excellent experience in humanoid robotics, control using physical and simulated robots and human-robot interaction. The project is expected to contribute to promoting research excellence in AI and Robotics in Comenius University in Bratislava based on the expertise from the Knowledge Technology Team in Hamburg and the IIT Team in Genoa.

Researchwise, the TERAIS project spans the areas of advancement of cognitive robotics and human–robot interaction which are considered aiming to improve the quality of life. Constructivist computational approaches (understanding cognition by building artifacts) are expected to play an increasingly more important role in the future. Artificial neural networks, as a successful machine learning approach, are primarily used as building AI blocks for robots. Cognitive robotics stands on three pillars (research areas) - artificial intelligence (AI), cognitive science and robotics. In this process, cognitive science will very likely pave its way to help build more explainable, trustworthy and sense-making AI solutions. Flexibility and versatility is even more articulated in cognitive robotics, enabling truly multimodal flexible robots deployable for human-oriented applications and human-robot interaction.

PI: Prof. Dr. Stefan Wermter

Associate: Hassan Ali, Dr. Matthias Kerzel, Dipl.-Ing. Erik Strahl, Dr. Cornelius Weber

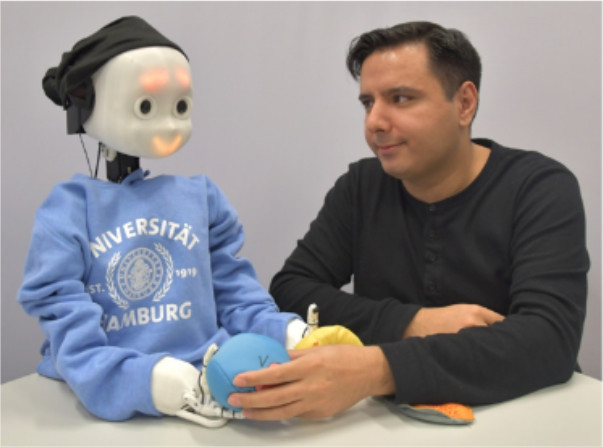

Modeling a Robot’s Peripersonal Space and Body Schema for Adaptive Learning and Imitation (MoReSpace)

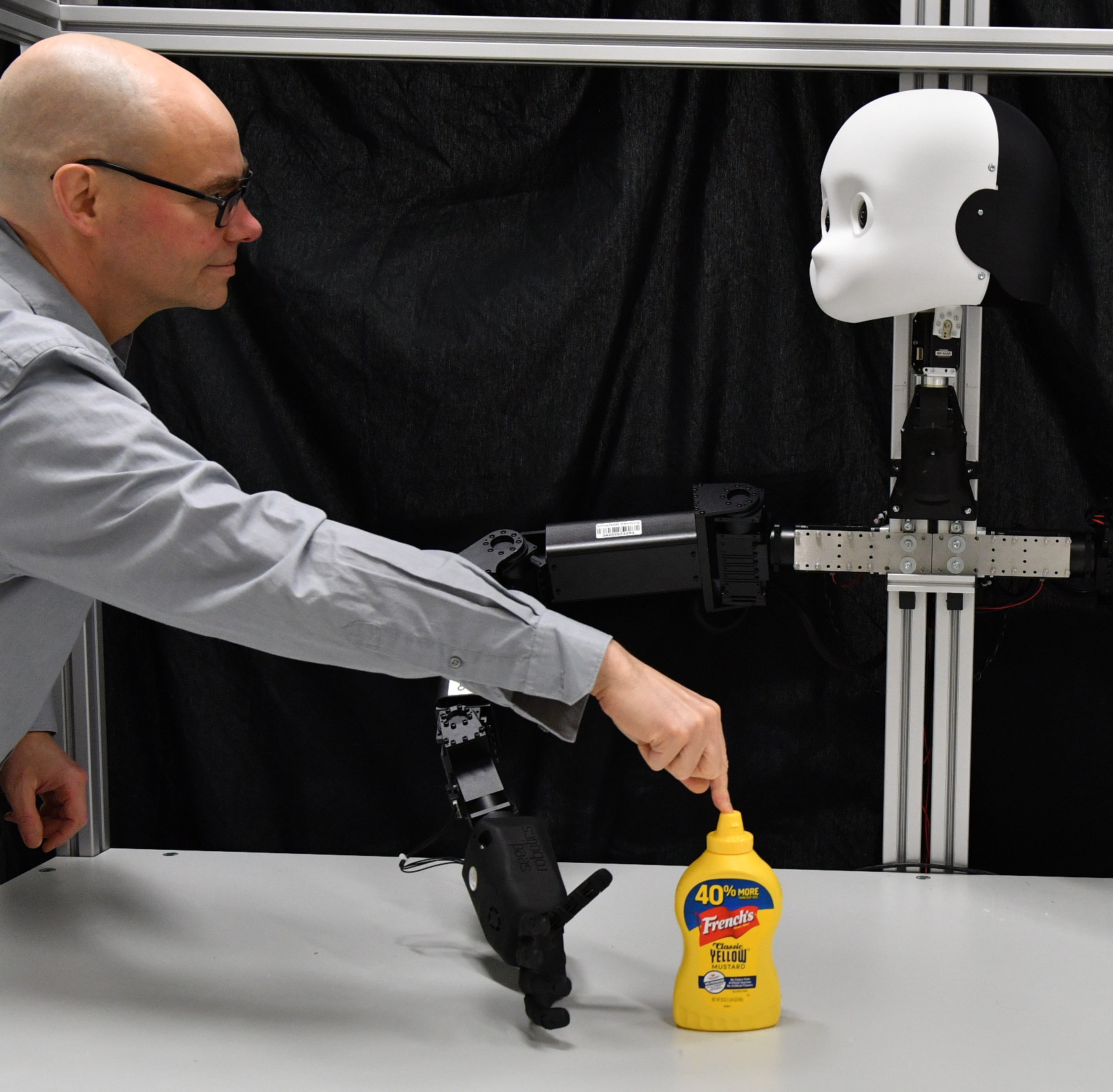

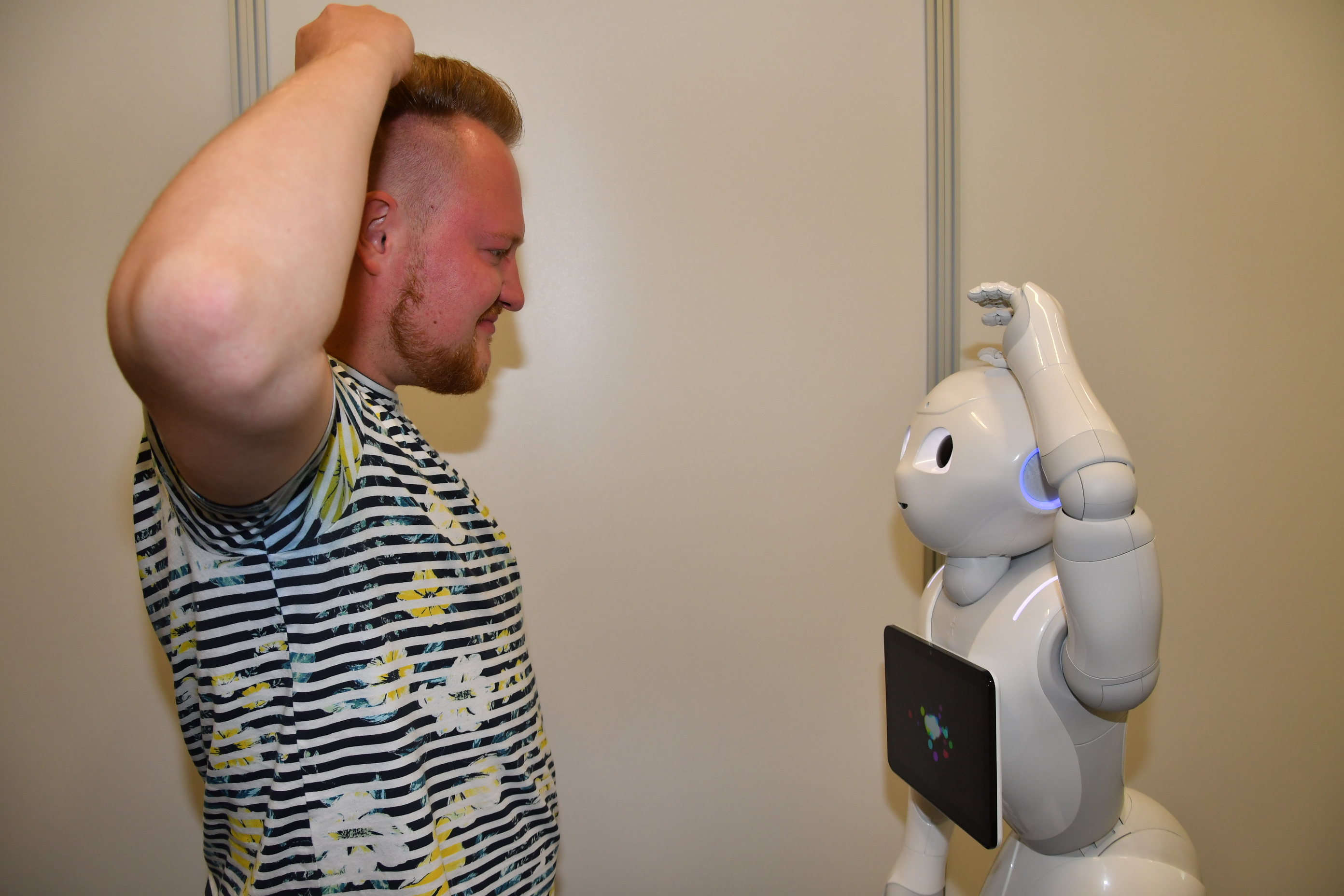

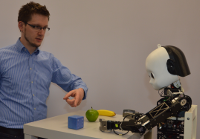

The DFG-funded project MoReSpace concentrates on research, design, development and evaluation of neurocomputational deep learning methods for intelligent robot assistants to explore human-robot interaction. In particular the project includes research into modeling a robot’s peripersonal space and body schema for adaptive learning and imitation. Our research questions focus on a) adaptive decision-making with conflicting sensations and b) on self-other transfer and imitation learning. We will develop a novel conflict-driven attention mechanism by considering psychological phenomena that involve conflicting sensations. Furthermore, we will develop learning from observation by developing a projection mechanism to map an observer’s body schema to an observed agent.

The DFG-funded project MoReSpace concentrates on research, design, development and evaluation of neurocomputational deep learning methods for intelligent robot assistants to explore human-robot interaction. In particular the project includes research into modeling a robot’s peripersonal space and body schema for adaptive learning and imitation. Our research questions focus on a) adaptive decision-making with conflicting sensations and b) on self-other transfer and imitation learning. We will develop a novel conflict-driven attention mechanism by considering psychological phenomena that involve conflicting sensations. Furthermore, we will develop learning from observation by developing a projection mechanism to map an observer’s body schema to an observed agent. We expect the resulting framework to improve the capabilities of robotic agents to handle conflicting sensor data and to improve human-robot interaction scenarios in the context of the different morphologies of the NICO and NICOL robots. Our experiments will take place in a table-top scenario and mainly involve object manipulation experiments, including block-stacking and tool-use tasks. Together with our collaborators, we will first conduct robot-robot interaction and later human-robot-interaction experiments.

PI: Prof. Dr. Stefan Wermter

Associates: Josua Spisak, Dr. Matthias Kerzel, Dipl.-Ing. Erik Strahl, Dr. Cornelius Weber

Details: MoReSpace project

Sicheres Sprachdialogsystem zur multimodalen Integration von Dienstleistungen (SIDIMO)

SIDIMO is an R&D cooperation project within the ZIM-network “DEMOBIS”. In collaboration with our project partner CIBEK, this project aims to develop a robust German speech recognition system for elderly people in a smart-home environment.

SIDIMO is an R&D cooperation project within the ZIM-network “DEMOBIS”. In collaboration with our project partner CIBEK, this project aims to develop a robust German speech recognition system for elderly people in a smart-home environment. Systems that support elderly people in their home environments offer their target group an extension on an independent and self-determined way of living. However, existing speech recognition systems for home environments usually transfer recorded data to third-party servers, which raises a series of privacy issues. Additionally, while language as an intuitive means of communication can facilitate interactions of inexperienced users with assistance systems, ASR systems often struggle with elderly voices due to irregularities in speech patterns or indistinct pronunciations.

The goal of SIDIMO is to develop a speech recognition system, which 1) adjusts existing network architectures for German ASR to work locally, keeping private data in the possession of the user, and 2) can continually adapt to the changing speech of elderly users to increase speech recognition accuracy.

Duration: 01.10.2021 - 30.09.2023

PI: Prof. Dr. Stefan Wermter

Associate: Theresa Pekarek Rosin, Dr. Matthias Kerzel

Details: SIDIMO project

VeriKAS – Verification of learning AI applications

Pl: Prof. Dr. Stefan Wermter

Associates: Dr. Sven Magg, Dr. Matthias Kerzel, Wenhao Lu

Details: VeriKAS project

LemonSpeech: Next Generation Speech Recognition and Speech Control for German

LemonSpeech is a project government-funded by the EXIST program of the Bundesministerium für Wirtschaft und Energie. It is based on seven years of research on domain-dependent automatic speech recognition (ASR) performed by the Knowledge Technology group. It aims to transfer the research from English ASR to German ASR and evaluate the possibility for a market entry. Also, further research is performed to reach state-of-the-art performance in German ASR. The novel models aim to achieve equivalent performance as computationally intense Cloud-based ASR models, while being runnable on inexpensive local hardware. The models developed in the last years are evidently runnable on this hardware, future models will be adapted to it.

LemonSpeech is a project government-funded by the EXIST program of the Bundesministerium für Wirtschaft und Energie. It is based on seven years of research on domain-dependent automatic speech recognition (ASR) performed by the Knowledge Technology group. It aims to transfer the research from English ASR to German ASR and evaluate the possibility for a market entry. Also, further research is performed to reach state-of-the-art performance in German ASR. The novel models aim to achieve equivalent performance as computationally intense Cloud-based ASR models, while being runnable on inexpensive local hardware. The models developed in the last years are evidently runnable on this hardware, future models will be adapted to it. Duration: August 1st, 2021 - July 31th, 2022

Mentor: Prof. Dr. Stefan Wermter

Associates: Dr. Johannes Twiefel, Matthias Möller, Felix Hattemer

Learning Conversational Action Repair for Intelligent Robots (LeCAREbot)

What are the principal mechanisms required to capture the robustness and interactivity of human communication, given the situational, noisy and often ambiguous nature of natural language? And how, and to what extent, can we integrate these mechanisms within an embodied functional model that is computationally and empirically verifiable on a physical robot?

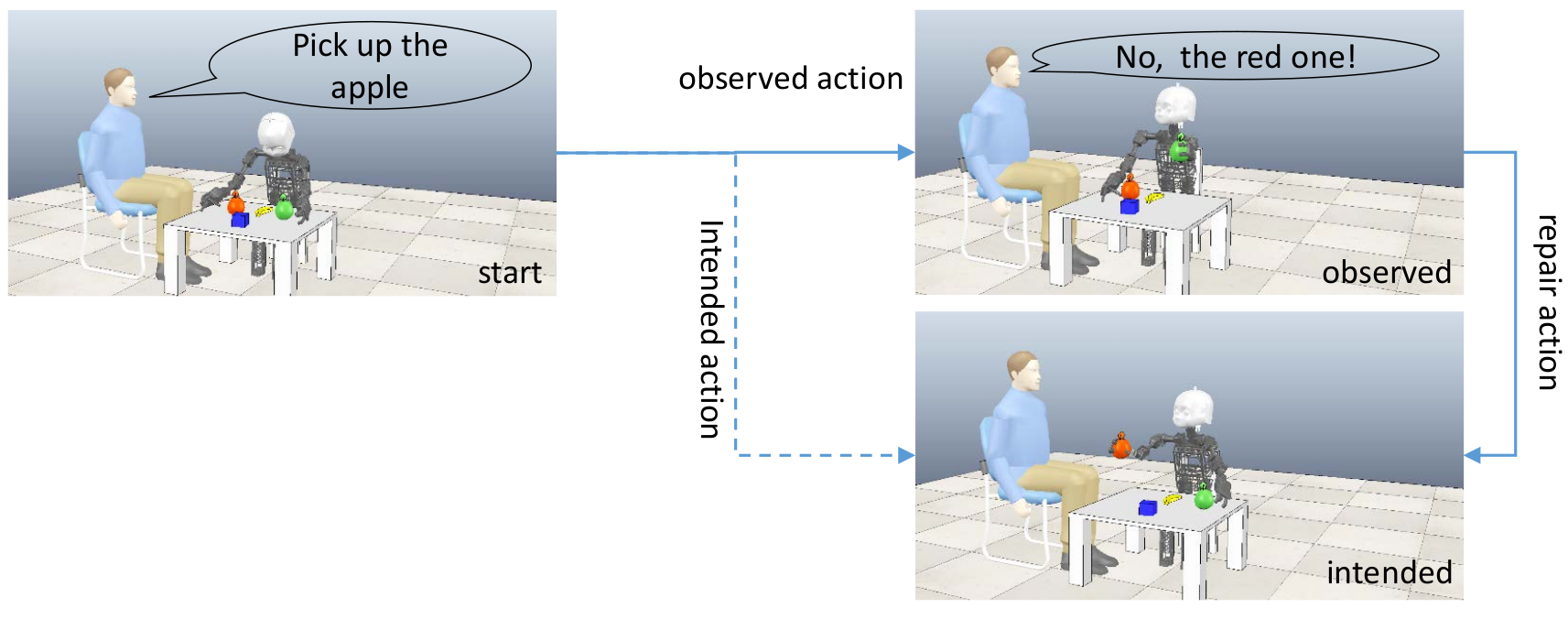

We address these research questions by investigating the linguistic phenomenon of conversational repair (CR) – a method to edit and to re-interpret previously uttered sentences that were not correctly understood by the hearer. As an example, consider the following scenario:

A human operator issues the underspecified command “Pick up the apple”. The operator intends to let the robot pick up the red apple, but the robot assumes that the human refers to the green apple (possibly because it is closer to the robot). As it picks up the green apple, the observed course of actions deviates from the user’s intended course of actions. Shortly after the human recognizes this error, he utters a repair command that lets the robot pick up the red apple.

Our own and other existing computational models for human-robot dialog consider conversational repair in the case of non-understandings, but they do not consider misunderstandings. In the LeCAREbot project, we will investigate how misunderstandings can be addressed in human-robot dialog. Herein, we develop compositional state representations for mixed verbal-physical interaction states, and we will develop a hybrid neuro-symbolic approach to learn such state abstractions. We will combine the state abstraction with model-based hierarchical reinforcement learning to realize robotic scenarios where conversational repair plays a critical role.

PIs: Dr. Manfred Eppe, Prof. Dr. Stefan Wermter

Details: LeCAREBot project

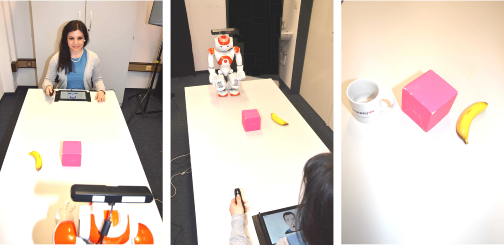

LingoRob - Learning Language in Developmental Robots

LingoRob is a DAAD funded project between our project partners at Inria, Bordeaux and the Knowledge Technology group. The scientific objective of the collaboration is to better understand the mechanisms underlying language acquisition and enable more natural interaction between humans and robots in different languages, while modeling how the brain processes sentences and integrates semantic information of scenes. Models developed in both labs involve artificial neural networks, and in particular Echo State Networks (ESN), also known as pertaining to the Reservoir Computing framework. These neural models allow insights on high-level processes of the human brain, and at the same time are well suited as robot control platform, because they can be trained and executed online with low computational resources. The object localization and recognition is implemented using computer vision approaches as a baseline and using deep learning methods to progress on the generalisation of the scenario.

LingoRob is a DAAD funded project between our project partners at Inria, Bordeaux and the Knowledge Technology group. The scientific objective of the collaboration is to better understand the mechanisms underlying language acquisition and enable more natural interaction between humans and robots in different languages, while modeling how the brain processes sentences and integrates semantic information of scenes. Models developed in both labs involve artificial neural networks, and in particular Echo State Networks (ESN), also known as pertaining to the Reservoir Computing framework. These neural models allow insights on high-level processes of the human brain, and at the same time are well suited as robot control platform, because they can be trained and executed online with low computational resources. The object localization and recognition is implemented using computer vision approaches as a baseline and using deep learning methods to progress on the generalisation of the scenario. Programme: DAAD, Programm Projektbezogener Personenaustausch Frankreich 2017

Duration 01.01.2017 - 31.12.2018

PIs: Dr. Xavier Hinaut, Dr. Cornelius Weber, Prof. Dr. Stefan Wermter

Staff: Johannes Twiefel, Dr. Dr. Doreen Jirak

Details: LingoRob Project

Ideomotor Transfer for Active Self-emergence (IDEAS)

Humans possess very sophisticated learning mechanisms that allow them, for example, to learn a sports discipline or task, and to transfer certain movement patterns and skills from this discipline to improve their performance in another discipline or task. This is possible, even if the transfer task is not immediately related to the initially learned task. It is this important transfer learning ability that enables humans to solve problems they have never encountered before, in ways that are beyond the current capabilities of robots and artificial agents. However, the neural foundations of transfer learning and its role in the emergence of a self are still mostly unknown, and there exists no generally accepted functional neural model of transfer learning.

Dr. Manfred Eppe and Prof. Stefan Wermter from the Knowledge Technology group have been awarded a grant within the DFG priority programme The Active Self to address this issue.

PIs: Dr. Manfred Eppe, Prof. Dr. Stefan Wermter

Details: IDEAS project

KI-SIGS: AI Space for Intelligent Health Systems - AP390.1: Detection of Whole-body Posture and Movement

KI-SIGS is a project government-funded by the Bundesministerium für Wirtschaft und Technologie that aims to create an AI space for intelligent health systems in collaboration with the University of Bremen, University of Lübeck, University of Kiel and the University of Hamburg as well as medical technology companies and university clinics in Northern Germany. Examples for intelligent health systems are adaptive medical systems and robot assistance systems, but also systems for smart living at home. The Knowledge Technology group focusses on the robot assistance systems, especially on the detection of whole-body posture and movement.

KI-SIGS is a project government-funded by the Bundesministerium für Wirtschaft und Technologie that aims to create an AI space for intelligent health systems in collaboration with the University of Bremen, University of Lübeck, University of Kiel and the University of Hamburg as well as medical technology companies and university clinics in Northern Germany. Examples for intelligent health systems are adaptive medical systems and robot assistance systems, but also systems for smart living at home. The Knowledge Technology group focusses on the robot assistance systems, especially on the detection of whole-body posture and movement.Duration: April 1st, 2020 - March 31th, 2023

Pls: Prof. Dr. Stefan Wermter

Associates: Dr. Philipp Allgeuer, Dr. Matthias Kerzel

General Project Context:

KI-SIGS project page

https://ki-sigs.de

The Neural Microcircuits of Problem-solving and Goal-directed Behavior

What are the neuro-computational principles of the advanced problem-solving capabilities that we observe in higher animals like corvids and primates, and how can we use those principles as the basis for a computational model in an acting robot?

Dr. Manfred Eppe and Prof. Stefan Wermter from the Knowledge Technology group have been awarded a grant within the Experiment! programme of the Volkswagen Stiftung (acceptance rate for this programme is below 5%) to address this question within the project "The Neural Microcircuits of Problem-solving and Goal-directed Behavior".

The aim of this high-risk/high-gain project is to design and build a computational model of neural problem-solving

PIs: Dr. Manfred Eppe, Prof. Dr. Stefan Wermter

Details: Neural p

Topological Deep Multiple Timescale Recurrent Neural Network for Cognitive Learning and Memory Modelling towards Human-level Synthetic Intelligence (NeuroSynthelligence)

The Knowledge Technology group and Prof. Dr. Chu Kiong Loo from the University of Malaya in Malaysia have been selected and been awarded a Georg Forster Research Fellowship for Experienced Researchers by the Alexander von Humboldt Foundation. Chu Kiong Loo will be hosted by the Knowledge Technology team in Hamburg for 17 months over consecutive visits between September 2018 and March 2021 starting in July 2018. This research project is in the area of Hybrid Neural Systems, Unsupervised Learning, and in particular on Topological Deep Multiple Timescale Neural Networks for Cognitive Learning and Memory Modelling towards Human-level Synthetic Intelligence. This "NeuroSynthelligence" project combines several different neural methodologies developed by Prof. Loo and by the Knowledge Technology team, such as multimodal stimulus processing, recurrent memory, symbolic and language processing, in order to achieve intelligent interactive behaviour on the Knowledge Technology NICO robot.

The Knowledge Technology group and Prof. Dr. Chu Kiong Loo from the University of Malaya in Malaysia have been selected and been awarded a Georg Forster Research Fellowship for Experienced Researchers by the Alexander von Humboldt Foundation. Chu Kiong Loo will be hosted by the Knowledge Technology team in Hamburg for 17 months over consecutive visits between September 2018 and March 2021 starting in July 2018. This research project is in the area of Hybrid Neural Systems, Unsupervised Learning, and in particular on Topological Deep Multiple Timescale Neural Networks for Cognitive Learning and Memory Modelling towards Human-level Synthetic Intelligence. This "NeuroSynthelligence" project combines several different neural methodologies developed by Prof. Loo and by the Knowledge Technology team, such as multimodal stimulus processing, recurrent memory, symbolic and language processing, in order to achieve intelligent interactive behaviour on the Knowledge Technology NICO robot. PIs: Prof. Chu Kiong Loo, Prof. Dr. Stefan Wermter, Dr. Cornelius Weber

Details: NeuroSynthelligence Project

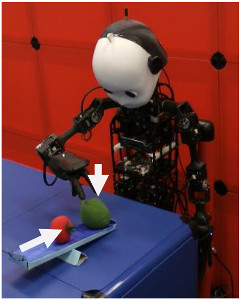

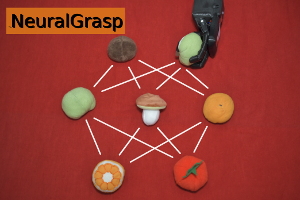

Neural Network-based Robotic Grasping via Curiosity-driven Reinforcement Learning (NeuralGrasp)

Reinforcement Learning (RL) helps robots learn behaviors including complex manipulation and navigation tasks autonomously, purely from trial-and-error interactions with the environment and resulting in an optimal policy, which represents the desired behavior of the robot. However, exploring the environment to collect useful experience data is often difficult, especially for grasping tasks where high-dimensional visual data and multiple degrees of freedom are involved. The project proposes to address this problem in RL by looking into exploration strategies inspired by infant cognition and sensorimotor development, particularly the use of curiosity as an intrinsic motivation.

Reinforcement Learning (RL) helps robots learn behaviors including complex manipulation and navigation tasks autonomously, purely from trial-and-error interactions with the environment and resulting in an optimal policy, which represents the desired behavior of the robot. However, exploring the environment to collect useful experience data is often difficult, especially for grasping tasks where high-dimensional visual data and multiple degrees of freedom are involved. The project proposes to address this problem in RL by looking into exploration strategies inspired by infant cognition and sensorimotor development, particularly the use of curiosity as an intrinsic motivation.PIs: Prof. Dr. Stefan Wermter, Dr. Cornelius Weber

Associates: Burhan Hafez

Details: NeuralGrasp Project

Bio-inspired Indoor Robot Navigation (BIONAV)

Bio-inspired robot navigation is inspired by neuroscience and navigation behaviors in animals and makes hypotheses about how navigation skills are acquired and implemented in an animal's brain and body. This project aims at testing some of these hypotheses, and to develop the underlying mechanisms towards a biologically plausible navigation system for a real robot platform, including spatial representation, path planning and collision avoidance.

Bio-inspired robot navigation is inspired by neuroscience and navigation behaviors in animals and makes hypotheses about how navigation skills are acquired and implemented in an animal's brain and body. This project aims at testing some of these hypotheses, and to develop the underlying mechanisms towards a biologically plausible navigation system for a real robot platform, including spatial representation, path planning and collision avoidance. PIs: Prof. Dr. Stefan Wermter, Dr. Cornelius Weber

Associates: Xiaomao Zhou

Details: BIONAV Project

DFG-Transregio "Cross-Modal Learning" (CML) Project A5 - Neurorobotic models for crossmodal joint attention and social interaction

The goals of project A5 are to realize human behaviour studies and neurorobotic models in order to better understand the influence of social behaviour on joint attention, in particular focused on affective behaviour and deictic communication. We will design and implement novel neurocomputational models based on recurrent self-organizing networks to model, represent, and process affective behaviour related to joint attention. We will also develop and evaluate continual learning in deep neural networks to process spatial information from deictic communication and to examine its relation to joint attention. Finally, we will develop a natural Human-Robot Interaction scenario to evaluate the impact of the proposed models on the behaviour of a robot when interacting with humans.

The goals of project A5 are to realize human behaviour studies and neurorobotic models in order to better understand the influence of social behaviour on joint attention, in particular focused on affective behaviour and deictic communication. We will design and implement novel neurocomputational models based on recurrent self-organizing networks to model, represent, and process affective behaviour related to joint attention. We will also develop and evaluate continual learning in deep neural networks to process spatial information from deictic communication and to examine its relation to joint attention. Finally, we will develop a natural Human-Robot Interaction scenario to evaluate the impact of the proposed models on the behaviour of a robot when interacting with humans.PIs: Prof. Dr. Stefan Wermter, Prof. Dr. Xun Liu

Associates: Dr. Hugo Carneiro, Fares Abawi, Di Fu

Details:

A5 on www.crossmodal-learning.org

www.crossmodal-learning.org

DFG-Transregio "Cross-Modal Learning" (CML) Project C4 - Neurocognitive models of crossmodal language learning

Project C4 will focus on the research question of how to build a neural cognitive model that integrates embodied and knowledge-based crossmodal information into language learning. The goals include the realisation of large-scale embodied and disembodied data collections, the investigation of neurocognitive mechanisms in computational models as well as data-driven representation models for language learning, and the integration and study of crossmodal models for large-scale technical systems. In particular, the project will examine a variety of language functions inspired by the processing in the human brain, study representation learning architectures for language learning from large-scale data and knowledge bases, and transfer them into prototypes for human-robot interaction.

Project C4 will focus on the research question of how to build a neural cognitive model that integrates embodied and knowledge-based crossmodal information into language learning. The goals include the realisation of large-scale embodied and disembodied data collections, the investigation of neurocognitive mechanisms in computational models as well as data-driven representation models for language learning, and the integration and study of crossmodal models for large-scale technical systems. In particular, the project will examine a variety of language functions inspired by the processing in the human brain, study representation learning architectures for language learning from large-scale data and knowledge bases, and transfer them into prototypes for human-robot interaction.PIs: Prof. Dr. Zhiyuan Liu, Dr. Cornelius Weber, Prof. Dr. Stefan Wermter

Associates: Dr. Jae Hee Lee, Tobias Hinz

Details:

C4 on www.crossmodal-learning.org

www.crossmodal-learning.org

DFG-Transregio "Cross-Modal Learning" (CML) Project Z3 - Integration initiatives for model software and robotic demonstrators

Project Z3 will actively guide and service the implementation, adaptation, and integration of crossmodal learning models resulting from individual projects within the DFG-Transregio "Cross-Modal Learning" (CML). To this aim, Z3 will develop and maintain robotic architectures and laboratory environments. The integrated models will be embedded into robots and systems for training, evaluation, and demonstration. Together, these robots and systems will support the demonstration of the scientific performance of the TRR that can only result from the integration of interdisciplinary research.

Project Z3 will actively guide and service the implementation, adaptation, and integration of crossmodal learning models resulting from individual projects within the DFG-Transregio "Cross-Modal Learning" (CML). To this aim, Z3 will develop and maintain robotic architectures and laboratory environments. The integrated models will be embedded into robots and systems for training, evaluation, and demonstration. Together, these robots and systems will support the demonstration of the scientific performance of the TRR that can only result from the integration of interdisciplinary research.PIs: Prof. Dr. Jianwei Zhang, Prof. Dr. Stefan Wermter, Prof. Dr. Fuchun Sun

Associates: Dr. Matthias Kerzel, Dr. Burhan Hafez

Details:

Z3 on www.crossmodal-learning.org

www.crossmodal-learning.org

SOCRATES - Social Cognitive Robotics in The European Society

To address the aim of successful multidisciplinary and intersectoral training, SOCRATES training is structured along two dimensions: thematic areas and disciplinary areas. The thematic perspective encapsulates functional challenges that apply to R&D. The following five thematic areas are identified as particularly important, and hence are given special attention in the project: Emotion, Intention, Adaptivity, Design, and Acceptance. The disciplinary perspective encapsulates the necessity of inter/multi- disciplinary and intersectoral solutions. Figure 1 illustrates how the six disciplinary areas are required to address research tasks in the five thematic areas. For instance, to successfully address an interface design task in WP5, theory and practice from all disciplinary areas typically must be utilised and combined. SOCRATES organises research in five work packages WP2-6, corresponding to the thematic areas Emotion, Intention, Adaptivity, Design, and Acceptance.

To address the aim of successful multidisciplinary and intersectoral training, SOCRATES training is structured along two dimensions: thematic areas and disciplinary areas. The thematic perspective encapsulates functional challenges that apply to R&D. The following five thematic areas are identified as particularly important, and hence are given special attention in the project: Emotion, Intention, Adaptivity, Design, and Acceptance. The disciplinary perspective encapsulates the necessity of inter/multi- disciplinary and intersectoral solutions. Figure 1 illustrates how the six disciplinary areas are required to address research tasks in the five thematic areas. For instance, to successfully address an interface design task in WP5, theory and practice from all disciplinary areas typically must be utilised and combined. SOCRATES organises research in five work packages WP2-6, corresponding to the thematic areas Emotion, Intention, Adaptivity, Design, and Acceptance. PIs: Prof. Dr. Stefan Wermter

Project Managers: Dr. Sven Magg, Dr. Cornelius Weber

Associates: Alexander Sutherland, Henrique Siqueira

Details: www.socrates-project.eu and http://www.socrates-project.eu/research/emotion-wp2/

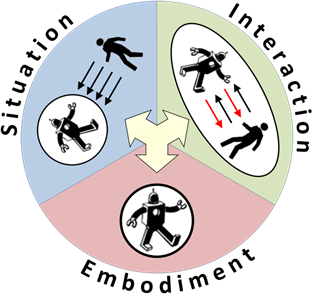

European Training Network: Safety Enables Cooperation in Uncertain Robotic Environments (SECURE)

The majority of existing robots in industry are pre-programmed robots working in safety zones, with visual or auditory warning signals and little concept of intelligent safety awareness necessary for dynamic and unpredictable human or domestic environments. In the future, novel cognitive robotic companions will be developed, ranging from service robots to humanoid robots, which should be able to learn from users and adapt to open dynamic contexts. The development of such robot companions will lead to new challenges for human-robot cooperation and safety, going well beyond the current state of the art. Therefore, the SECURE project aims to train a new generation of researchers on safe cognitive robot concepts for human work and living spaces on the most advanced humanoid robot platforms available in Europe. The fellows will be trained through an innovative concept of project- based learning and constructivist learning in supervised peer networks where they will gain experience from an intersectoral programme involving universities, research institutes, large and SME companies from public and private sectors. The training domain will integrate multidisciplinary concepts from the fields of cognitive human-robot interaction, computer science and intelligent robotics where a new approach of integrating principles of embodiment, situation and interaction will be pursued to address future challenges for safe human-robot environments.

This project is funded by the European Union’s Horizon 2020 research and innovation programme

Coordinator: Prof. Dr. S. Wermter

Project Manager: Dr. S. Magg

Associates: Chandrakant Bothe, Egor Lakomkin, Mohammad Zamani

Details: European Training Network "SECURE"

Cross-modal Learning (CROSS)

CROSS is a project aiming to prepare and initiate research between the life sciences (neuroscience, psychology) and computer science in Hamburg and Beijing in order to set up a collaborative research centre positioned interdisciplinarily between artificial intelligence, neuroscience and psychology while focusing on the topic of cross-modal learning. Our long-term challenge is to understand the neural, cognitive and computational evidence of cross-modal learning and to use this understanding for (1) better analyzing human performance and (2) building effective cross-modal computational systems.

CROSS is a project aiming to prepare and initiate research between the life sciences (neuroscience, psychology) and computer science in Hamburg and Beijing in order to set up a collaborative research centre positioned interdisciplinarily between artificial intelligence, neuroscience and psychology while focusing on the topic of cross-modal learning. Our long-term challenge is to understand the neural, cognitive and computational evidence of cross-modal learning and to use this understanding for (1) better analyzing human performance and (2) building effective cross-modal computational systems. Coordinators: Prof. Dr. S. Wermter, Prof. Dr. J. Zhang

Collaborators: Prof. Dr. B. Röder, Prof. Dr. A. K. Engel

Details: CROSS Project

Echo State Networks for Developing Language Robots (EchoRob)

The project "EchoRob" (Echo State Networks for Developing Language Robots) aims at teaching different languages to robots using Recurrent Neural Networks (namely, Echo State Networks, ESN). The general aim has the two sub-goals to provide the ability to usual (non-programming) users to teach language to robots, and to give insights on how children acquire language.

The project "EchoRob" (Echo State Networks for Developing Language Robots) aims at teaching different languages to robots using Recurrent Neural Networks (namely, Echo State Networks, ESN). The general aim has the two sub-goals to provide the ability to usual (non-programming) users to teach language to robots, and to give insights on how children acquire language. Leading Investigator: Dr. X. Hinaut, Prof. Dr. S. Wermter

Associates: J. Twiefel, Dr. S. Magg

Details: EchoRob Project

Cooperating Robots (CoRob)

Leading Investigator: Prof. Dr. S. Wermter

Associates: Dr. M. Borghetti, Dr. S. Magg, Dr. C. Weber

Details: CoRob Project

Neural-Network-based Action-Object Semantics for Assistive Robotics

The ongoing development of robotics and the growth in number of elderly individuals have led to an increasing interest on applying assistive robotics in order to maintain or even improve the quality of care. Human behaviour understanding is the first and the most important step for the successful and safe execution of robot tasks like providing assistance, serving and interacting with humans. This research project investigates self-organizing neural network architectures for the recognition and learning of human daily activities, and focuses on actions that involve interactions with objects.

The ongoing development of robotics and the growth in number of elderly individuals have led to an increasing interest on applying assistive robotics in order to maintain or even improve the quality of care. Human behaviour understanding is the first and the most important step for the successful and safe execution of robot tasks like providing assistance, serving and interacting with humans. This research project investigates self-organizing neural network architectures for the recognition and learning of human daily activities, and focuses on actions that involve interactions with objects.Gestures and Reference Instructions for Deep robot learning (GRID)

Leading Investigator: Prof. Dr. S. Wermter

Associates: P. Barros, Dr. S. Magg, Dr. C. Weber

Details: Grid Project

Teaching With Interactive Reinforcement Learning (TWIRL)

Reinforcement Learning has been a very useful approach, but often works slowly, because of large-scale exploration. A variant of RL, that tries to improve speed of convergence, and that has been rarely used until now is Interactive Reinforcement Learning (IRL), that is, RL is supported by a human trainer who gives some directions on how to tackle the problem.

Leading Investigator: Prof. Dr. S. Wermter

Associates: F. Cruz, Dr. S. Magg, Dr. C. Weber

Details: TWIRL Project

Cross-modal Interaction in Natural and Artificial Cognitive Systems (CINACS)

Spokesperson CINACS: Prof. Dr. J. Zhang

Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber

Associates: J. Bauer, J. D. Chacón, J. Kleesiek

Details: CINACS Project

Cognitive Assistive Systems (CASY)

The programme “Cognitive Assistive Systems (CASY)” will contribute to the focus on the next generation of human-centred systems for human-computer and human-robot collaboration. A central need of these systems is a high level of robustness and increased adaptivity to be able to act more natural under uncertain conditions. To address this need, research will focus on cognitively motivated multi-modal integration and human-robot interaction.

The programme “Cognitive Assistive Systems (CASY)” will contribute to the focus on the next generation of human-centred systems for human-computer and human-robot collaboration. A central need of these systems is a high level of robustness and increased adaptivity to be able to act more natural under uncertain conditions. To address this need, research will focus on cognitively motivated multi-modal integration and human-robot interaction. Spokesperson CASY: Prof. Dr. S. Wermter

Leading Investigator: Prof. Dr. S. Wermter, Prof. Dr. J. Zhang, Prof. Dr. C. Habel, Prof. Dr.-Ing. W. Menzel

Details: CASY Project

Neuro-inspired Human-Robot Interaction

The aim of the research of the Knowledge Technology Group is to contribute to fundamental research in offering functional models for testing neuro-cognitive hypotheses about aspects of human communication, and in providing efficient bio-inspired methods to produce robust controllers for a communicative robot that successfully engages in human-robot interaction.

Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber

Associates: S. Heinrich, D. Jirak, S. Magg

Details: HRI Project

Robotics for Development of Cognition (RobotDoC)

The RobotDoC Collegium is a multi-national doctoral training network for the interdisciplinary training on developmental cognitive robotics. The RobotDoc Fellows will develop advanced expertise of domain-specific robotics research skills and of complementary transferrable skills for careers in academia and industry. They will acquire hands-on experience through experiments with the open-source humanoid robot iCub, complemented by other existing robots available in the network's laboratories.

The RobotDoC Collegium is a multi-national doctoral training network for the interdisciplinary training on developmental cognitive robotics. The RobotDoc Fellows will develop advanced expertise of domain-specific robotics research skills and of complementary transferrable skills for careers in academia and industry. They will acquire hands-on experience through experiments with the open-source humanoid robot iCub, complemented by other existing robots available in the network's laboratories. Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber

Associates: N. Navarro, J. Zhong

Details: RobotDoC Project

Knowledgeable SErvice Robots for Aging (KSERA)

KSERA investigates the integration of assistive home technology and service robotics to support older users in a domestic environment. The KSERA system helps older people, especially those with COPD (a lung disease), with daily activities and care needs and provides the means for effective self-management.

KSERA investigates the integration of assistive home technology and service robotics to support older users in a domestic environment. The KSERA system helps older people, especially those with COPD (a lung disease), with daily activities and care needs and provides the means for effective self-management.The main aim is to design a pleasant, easy-to-use and proactive socially assistive robot (SAR) that uses context information obtained from sensors in the older person's home to provide useful information and timely support at the right place.

Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber

Associates: N. Meins, W. Yan

Details: KSERA Project

What it Means to Communicate (NESTCOM)

What does it mean to communicate? Many projects have explored verbal and visual communication in humans as well as motor actions. They have explored a wide range of topics, including learning by imitation, the neural origins of languages, and the connections between verbal and non-verbal communication. The EU project NESTCOM is setting out to analyse these results to contribute to the understanding of the characteristics of human communication, focusing specifically on their relationship to computational neural networks and the role of mirror neurons in multimodal communications.

What does it mean to communicate? Many projects have explored verbal and visual communication in humans as well as motor actions. They have explored a wide range of topics, including learning by imitation, the neural origins of languages, and the connections between verbal and non-verbal communication. The EU project NESTCOM is setting out to analyse these results to contribute to the understanding of the characteristics of human communication, focusing specifically on their relationship to computational neural networks and the role of mirror neurons in multimodal communications. Leading Investigator: Prof. Dr. S. Wermter

Associates: Dr. M. Knowles, M. Page

Details: NESTCOM Project

Midbrain Computational and Robotic Auditory Model for focused hearing (MiCRAM)

This research is a collaborative interdisciplinary EPSRC project to be performed between the University of Newcastle, the University of Hamburg and the University of Sunderland. The overall aim is to study sound processing in the mammalian brain and to build a biomimetic robot to validate and test the neuroscience models for focused hearing.

This research is a collaborative interdisciplinary EPSRC project to be performed between the University of Newcastle, the University of Hamburg and the University of Sunderland. The overall aim is to study sound processing in the mammalian brain and to build a biomimetic robot to validate and test the neuroscience models for focused hearing.We collaboratively develop a biologically plausible computational model of auditory processing at the level of the inferior colliculus (IC). This approach will potentially clarify the roles of the multiple spectral and temporal representations that are present at the level of the IC and investigate how representations of sounds interact withauditory processing at that level to focus attention and select sound sources for robot models of focused hearing.

Leading Investigator: Prof. Dr. S. Wermter, Dr. H. Erwin

Associates: Dr. J. Liu, Dr. M. Elshaw

Details: MiCRAM Project

Biomimetic Multimodal Learning in a Mirror Neuron-based Robot (MirrorBot)

This project develops and studies emerging embodied representation based on mirror neurons. New techniques including cell assemblies, associative neural networks, Hebbian-type learning associate visual, auditory and motor concepts. The basis of the research is an examination of the emergence of representations of actions, perceptions, conceptions, and language in a MirrorBot, a biologically inspired neural robot equipped with polymodal associative memory.

Details: MirrorBot Project