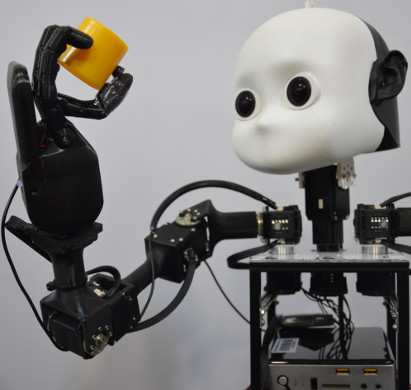

NeuralGrasp: Neural Network-based Robotic Grasping via Curiosity-driven Reinforcement Learning

PIs: Prof. Dr. Stefan Wermter, Dr. Cornelius Weber

Associates: Burhan Hafez

Summary

|

Reinforcement Learning (RL) has been successfully applied to solve a broad range of robotic learning problems, including navigation, grasping and object manipulation. Recent works on learning deep neural network representations have also further extended the capabilities of RL in complex domains. A common limitation of standard RL approaches is that they strongly rely on external rewards received by the agent through interacting with its environment. Fully autonomous agents often live in environments lacking such external rewards or where these rewards are very sparse. This raises a challenge of how to optimally direct the agent choice of gathering experience data to learn from in the absence of external feedback, especially for grasping tasks where high-dimensional visual data and multiple degrees of freedom are involved. |

| We propose to address this problem in RL by looking into exploration strategies inspired by infant cognition and sensorimotor development. When infants start interacting with the world, they engage in activities for their own sake and not for achieving an extrinsic goal, mapping sensorimotor patterns to actions and rapidly shifting from motor babbling to organized exploratory behavior. This behavior is, according to studies from psychology and neuroscience, believed to be in response to an intrinsic motivation to reduce the uncertainty in action outcomes and not randomly selected. Such intrinsic motivation as curiosity plays an important role in child development and we believe it has similar impact on robot learning of grasping skills. |

Project objectives

- Investigate neural network-based mechanisms for generating artificial curiosity signals.

- Evaluate exploration strategies for collecting useful experiences.

- Develop a curiosity-driven RL approach to the grasping problem in continuous state-and-action space.

Related Publications

- M.B. Hafez, C. Weber, M. Kerzel, and S. Wermter, “Efficient Intrinsically Motivated Robotic Grasping with Learning-Adaptive Imagination in Latent Space,”in 9th Joint IEEE International Conference on Development and Learning and on Epigenetic Robotics (ICDL-EpiRob), pp. 240–246, Oslo, Norway, 2019.

- M.B. Hafez, C. Weber, M. Kerzel and S. Wermter, “Curious Meta-Controller: Adaptive Alternation between Model-Based and Model-Free Control in Deep Reinforcement Learning,”in IEEE International Joint Conference on Neural Networks (IJCNN), pp. 1–9, Budapest, Hungary, 2019.

- M.B. Hafez, C. Weber, M. Kerzel and S. Wermter, “Deep Intrinsically Motivated Continuous Actor-Critic for Efficient Robotic Visuomotor Skill Learning,” Paladyn, Journal of Behavioral Robotics, vol. 10, no. 1, pp. 14–29, 2019.

- M.B. Hafez, M. Kerzel, C. Weber and S. Wermter, “Slowness-based neural visuomotor control with an Intrinsically motivated Continuous Actor-Critic,”in 26th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN), pp. 509–514, Bruges, Belguim, 2018.

- M.B. Hafez, C. Weber and S. Wermter, “Curiosity-Driven Exploration Enhances Motor Skills of Continuous Actor-Critic Learner,”in 7th Joint IEEE International Conference on Development and Learning and on Epigenetic Robotics (ICDL-EpiRob), pp. 39–46, Lisbon, Portugal, 2017.