New Paper Published at ACM-THRI

11 February 2025

Our group has a new paper published in ACM Transactions on Human-Robot Interaction (THRI). Here's more information about the paper:

Title: Influence of Robots’ Voice Naturalness on Trust and Compliance

Authors: Dennis Becker, Lukas Braach, Lennart Clasmeier, Teresa Kaufmann, Oskar Ong, Kyra Ahrens, Connor Gaede, Erik Strahl, Di Fu, Stefan Wermter

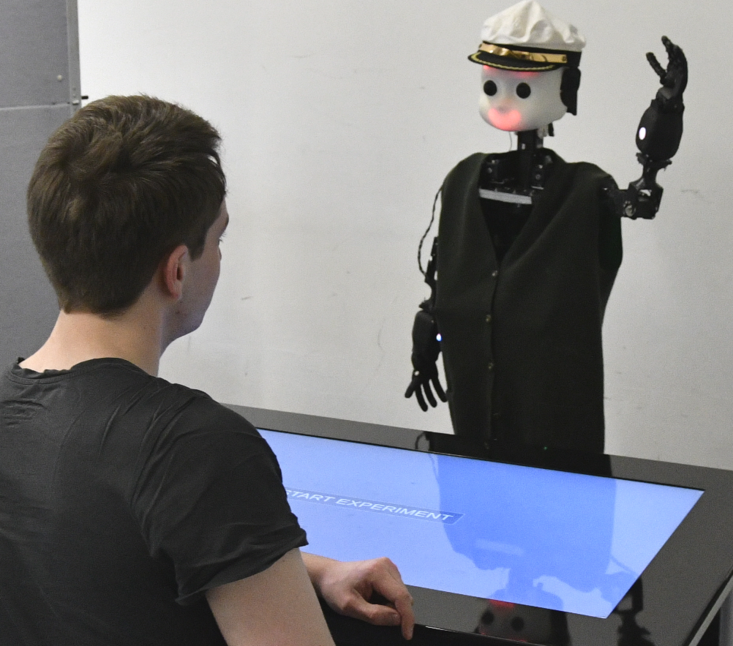

Abstract: With the increasing performance of text-to-speech systems and their generated voices indistinguishable from natural human speech, the use of these systems for robots raises ethical and safety concerns. A robot with a natural voice could increase trust, which might result in over-reliance despite evidence for robot unreliability. To estimate the influence of a robots voice on trust and compliance, we design a study that consists of two experiments. In a pre-study (N1=60) the most suitable natural and mechanical voice for the main study are estimated and selected for the main study. Afterward, in the main study (N2=68), the influence of a robots voice on trust and compliance is evaluated in a cooperative game of Battleship with a robot as an assistant. During the experiment, the acceptance of the robots advice and response time are measured, which indicate trust and compliance, respectively. The results show that participants expect robots to sound human-like and that a robot with a natural voice is perceived as safer. Additionally, a natural voice can affect compliance. Despite repeated incorrect advice, the participants are more likely to rely on the robot with the natural voice. The results do not show a direct effect on trust. Natural voices provide increased intelligibility, and while they can increase compliance with the robot, the results indicate that natural voices might not lead to over-reliance. The results highlight the importance of incorporating voices into the design of social robots to improve communication, avoid adverse effects, and increase acceptance and adoption in society.

You can reach the full paper here.