TERAIS Project Meeting in Bratislava

24 June 2025

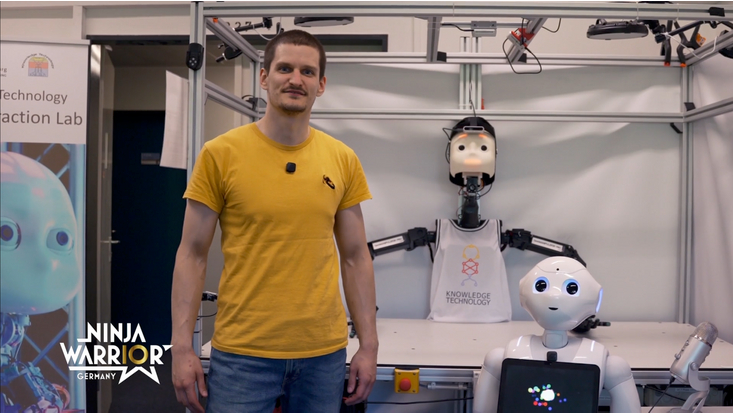

On June 16, 2025, members of the Knowledge Technology group participated in the TERAIS event “Supporting Excellence in AI and Robotics Research”, hosted by Comenius University in Bratislava. This full-day event brought together leading experts in AI, robotics, and technology transfer. The program featured invited lectures on developmental robotics and trustworthy large language models, strategic discussions on TERAIS research, spotlight presentations, as well as panel sessions on research support, organizational development, and tech innovation. The day concluded with informal networking. Cornelius Weber contributed with a presentation on “Cognitive Robotic Reasoning”, while Hassan Ali presented his work in a talk titled “Grounding Language for Action: LLM Integration in a Robotic Bartender”. Hassan Ali’s presentation was based on the latest TERAIS research paper recently accepted at IROS 2025. Here's more information about it:

Title: Shaken, Not Stirred: A Novel Dataset for Visual Understanding of Glasses in Human-Robot Bartending Tasks

Authors: Lukáš Gajdošech, Hassan Ali, Jan-Gerrit Habekost, Martin Madaras, Matthias Kerzel, Stefan Wermter

Abstract: Datasets for object detection often do not account for enough variety of glasses, due to their transparent and reflective properties. Specifically, open-vocabulary object detectors, widely used in embodied robotic agents, fail to distinguish subclasses of glasses. This scientific gap poses an issue to robotic applications that suffer from accumulating errors between detection, planning, and action execution. The paper introduces a novel method for the acquisition of real-world data from RGB-D sensors that minimizes human effort. We propose an auto-labeling pipeline that generates labels for all the acquired frames based on the depth measurements. We provide a novel real-world glass object dataset that was collected on the Neuro-Inspired COLlaborator (NICOL), a humanoid robot platform. The data set consists of 7850 images recorded from five different cameras. We show that our trained baseline model outperforms state-of-the-art open-vocabulary approaches. In addition, we deploy our baseline model in an embodied agent approach to the NICOL platform, on which it achieves a success rate of 81% in a human-robot bartending scenario.

You can read the full paper here.