Intelligent Virtual Agents

HCI Master Project WS 25/26: Intelligent Virtual Agents

Goals and Motivation

In this project, the goal was to work with intelligent virtual agents - AI-controlled entities mimicking human behavior and communication style. We wanted to find out how to increase the usability, social presence or user experience of IVAs in virtual reality. For this, four project groups with 3-4 participants worked on different topics, all related to IVAs. Over the course of the semester, the students received input on how to conduct user studies in HCI, how to identify suitable literature to strenghten their research, and how to implement a functional prototype. Finally, the students conducted a user study with their prototype, collected quantitative and qualitative data and documented their work in the form of a research paper. A short version of their research, as well as images and videos, will be presented on this website. The four groups researched the following topics:

- Deictic Interactions with an IVA: Evaluating Eye Gaze, Finger Pointing and Verbal Description in VR

- Virtual Agents vs. Static Picture Instructions in VR-Based Therapeutic Exercises: A Comparative Study

- Non-verbal Communication of Virtual Assistants

- Interrupting an AI: Effects of Verbal Interruption on Naturalness of Conversation and Agent Perception

Supervision

Deictic Interactions with an IVA

Evaluating Eye Gaze, Finger Pointing and Verbal Description in VR

Goal

Intelligent virtual agents (IVAs) integrated into smart glasses and mixed reality headsets offer innovative and intuitive ways to interact with virtual environments. This study investigates how users most effectively reference objects in virtual spaces—using gaze, pointing, or speech. A total of 39 participants completed tasks in virtual reality to evaluate the performance and usability of these interaction methods.

Procedure

The study was conducted in a controlled virtual reality (VR) environment developed using the Unity engine. Participants wore a Meta Quest Pro head-mounted display (HMD) equipped with eye-tracking capabilities and were instructed to reference objects using one of three methods: gaze, pointing, or speech. To familiarize themselves with each method, participants first practiced in a "playground" scene. They then completed a specific task in a virtual room, such as identifying the oldest image among a set of images. To ensure reliable results and mitigate learning effects, the order of tasks and interaction methods was randomized. After completing each of the three conditions, participants removed the HMD and filled out a questionnaire. Task performance was measured based on completion time and the number of prompts required. Additionally, usability feedback was collected using tools such as the User Experience Questionnaire (UEQ) and NASA Task Load Index (NASA-TLX).

Results

The study encountered several unforeseen challenges, including eye tracker malfunctions, inaccurate speech-to-text transcriptions, and ChatGPT loading issues. Despite these obstacles, significant differences and trends emerged among the interaction methods, highlighting opportunities for further research. Notably, most participants reported frequent use of ChatGPT, underscoring the relevance of this study within the context of growing conversational AI. Interaction using gaze proved to be the fastest method and produced the fewest unclear prompts, while verbal communication was the least precise. Although gaze-based interaction had the lowest accuracy rate (66.7%), this difference was not statistically significant. Usability scores indicated that all methods performed well overall; however, efficiency was lower for both verbal communication and pointing, while gaze scored highest in dependability and clarity. Workload levels were generally moderate, but verbal communication required the most effort. Pointing reduced mental workload but imposed a higher physical workload. Participants expressed distinct preferences: gaze-based interaction was considered the most enjoyable, pointing was seen as the most natural, and many participants favored multimodal interaction to combine the strengths of different methods.

Outlook

While gaze-based interaction shows promise, technical issues may have influenced the results and should be addressed in future research. Enhancing feedback mechanisms could improve usability across all input methods, reducing mental strain and increasing control. Multimodal interaction is a key avenue for future work, as user preferences vary and a flexible system could improve accessibility. This study highlights the strengths and limitations of each method, paving the way for more natural and effective IVA interactions in VR.

Project Team

- Lina Kaschub

- Ugur Turhan

- Bado Völckers Philipp Huesmann

Virtual Agents vs. Static Picture Instructions in VR-Based Therapeutic Exercises

A Comparative Study

This video shows the agent and pictorial instructions, as well as the audio cues during execution.

Motivation

Virtual Reality (VR) combined with Virtual Agents (VAs) offers new possibilities for home-based rehabilitation, particularly for patients with vestibular disorders such as Benign Paroxysmal Positional Vertigo (BPPV). Traditional instructional methods, such as static picture-text guides, may lack engagement and clarity, making correct execution of therapeutic exercises challenging. This study explores whether VA-guided instructions in VR can enhance exercise execution accuracy, improve User Experience, and increase engagement compared to conventional picture instructions.

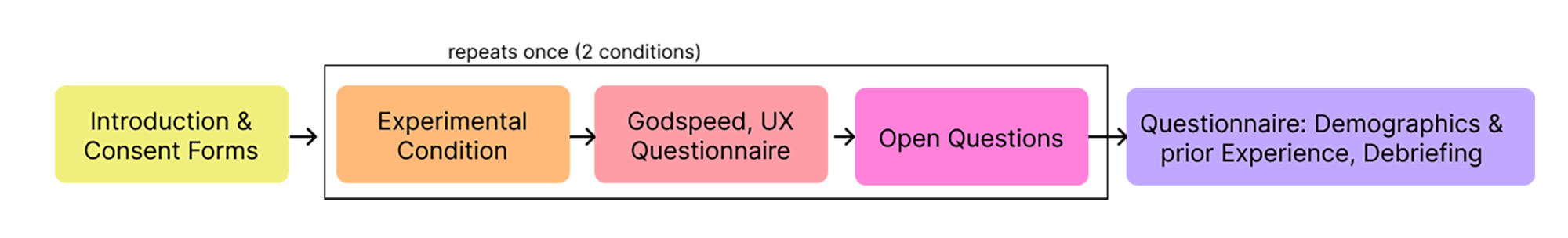

Procedure

Participants performed two standard BPPV rehabilitation exercises — the Sémont manoeuvre and the Brandt-Daroff exercise — using the Meta Quest 3 VR headset. A within-subject design was implemented, where each participant experienced both conditions: one guided by a VA providing step-by-step demonstrations and one using static picture instructions. Motion tracking data was collected to assess movement accuracy, while validated questionnaires measured User Experience (UEQ), mental workload (NASA-TLX), Simulator Sickness (SSQ), and Social Presence. Additionally, qualitative feedback was gathered to provide deeper insights into participants’ experiences.

Results

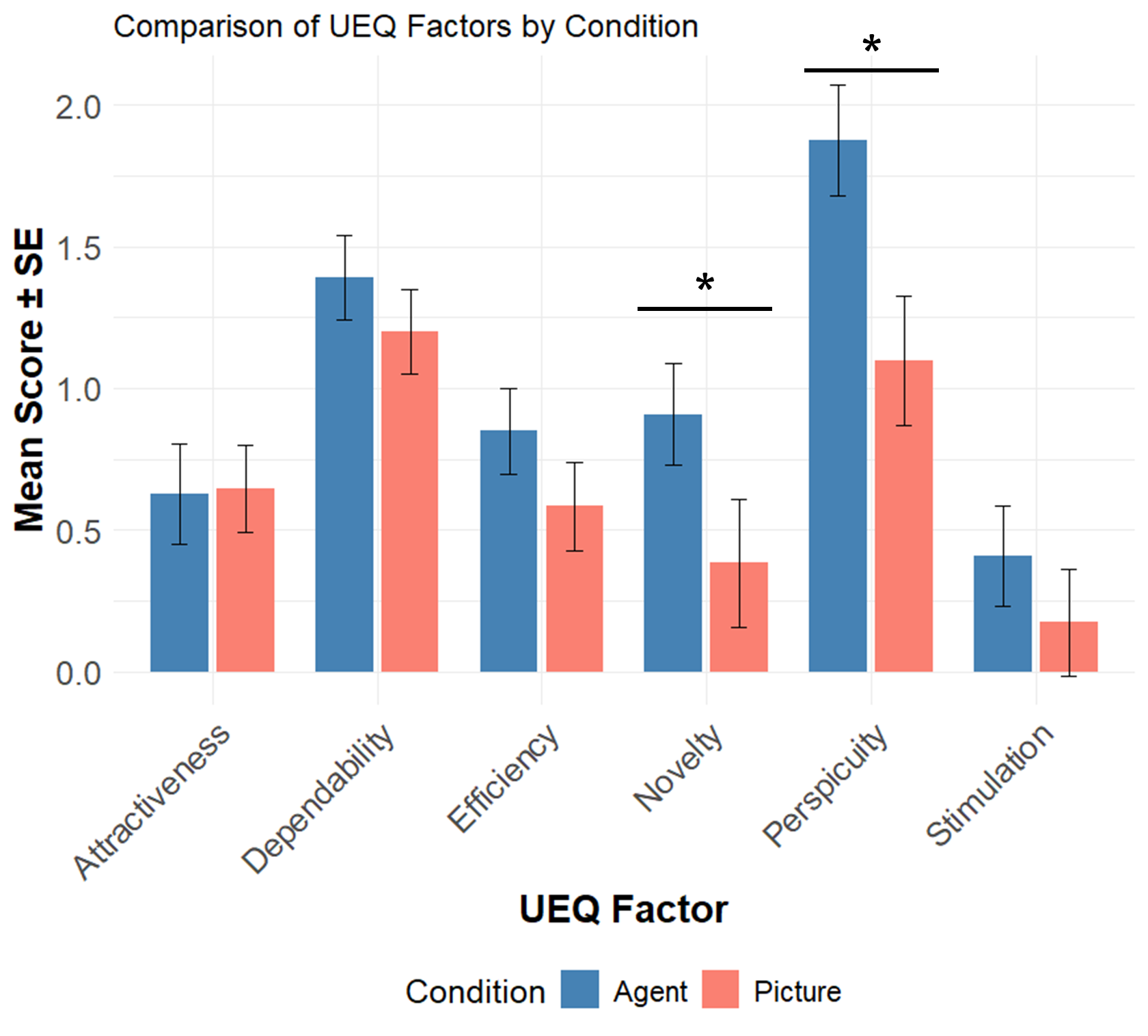

While no significant difference in movement accuracy was found between the VA and picture conditions, participants clearly preferred the VA-guided instructions. The VA condition was rated higher in terms of comprehensibility, engagement, and immersion, with the perspicuity and novelty dimensions of the UEQ scoring significantly better. Additionally, participants experienced significantly fewer symptoms of cybersickness, as measured by the SSQ, in the VA condition compared to the picture condition. These results suggest that VA-based instruction in VR can improve the overall User Experience and engagement in vestibular rehabilitation, potentially leading to better adherence in home-based therapy.

Project Team

- Helen Kuswik

- Anastasia Poletaykina

- Annika Rittmann

Non-verbal Communication of Virtual Assistants

Motivation

The motivation for this study was to gain a deeper understanding of the importance of non-verbal communication (NVC) of an intelligent virtual agent (IVA) for human conversational partners in a conversation. The aspects of perceived realism, trust, empathy and psychological well-being are particularly important here.

Perceived realism refers to the degree to which users feel that the IVA behaves like a human; trust refers to the user's confidence in the IVA's reliability and capabilities; empathy refers to the ability of users to relate emotionally to the IVA and finally, psychological well-being refers to the overall mental health impact of interacting with the IVAs. Understanding these elements can lead to a more effective development of IVAs and thus improve conversations in VR.

Goal

The aim of the study was to investigate whether the perception of both the conversation as a whole and the IVA differs according to the available emotional non-verbal expressions of the IVA and how this affects the user's well-being.

The assumption was that the increase of the graduation of the basis emotions will lead to a improvement of the perceived realism of the IVA.

Procedure

For the study, we designed a virtual space resembling a therapeutic conversation setting. The test subject sits on a couch in a comfortable, but not overly crowded room. The IVA sits opposite them on an armchair, with a view out the window behind them.

The participants conduct two conversations in english with an identical IVA, each lasting three minutes. The difference in the conversations is that in one of the conversations, the agent is in the simple condition, and in the other, the agent is in the advanced condition. The sequence of conversations is randomized with a balanced distribution.

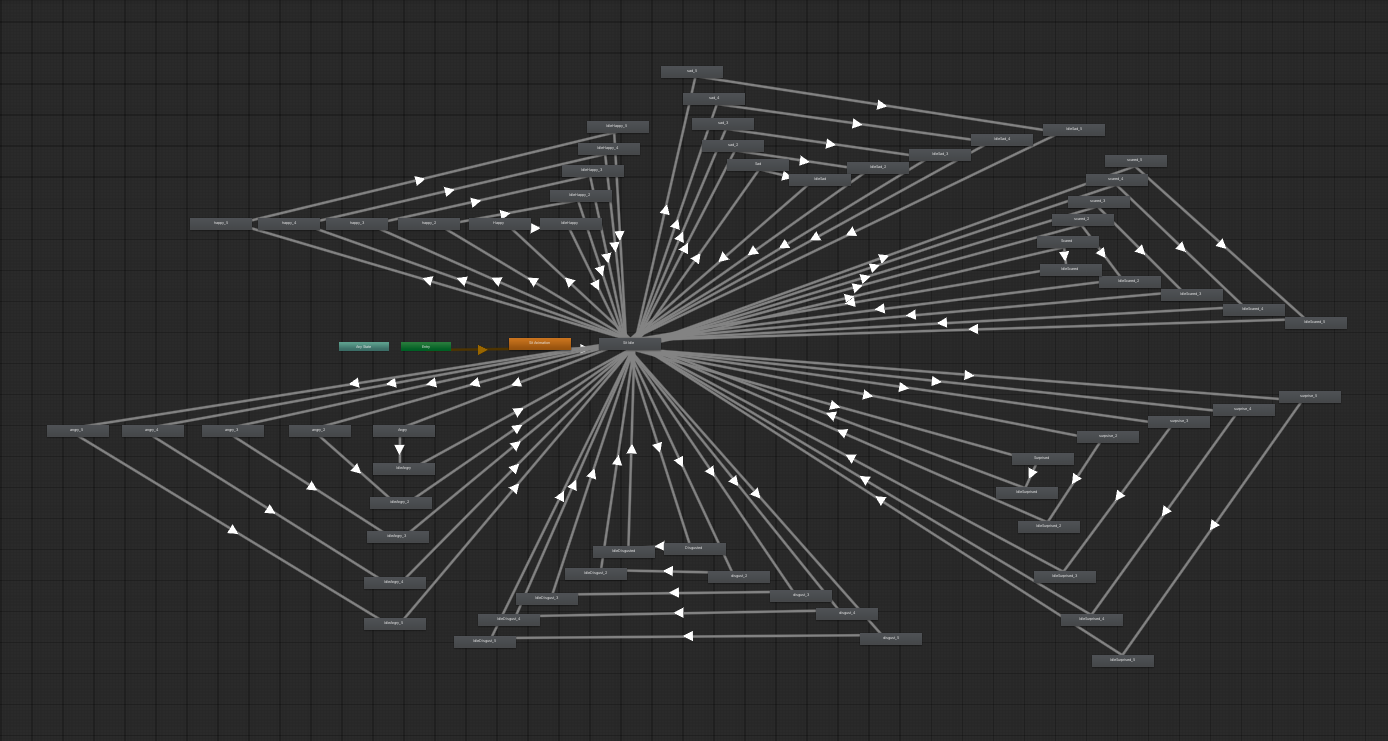

The simple agent is designed to express only six basic emotions—happiness, surprise, disgust, anger, fear, and sadness—at maximum intensity. The advanced agent possesses the same emotions, but each in five levels. The level of emotion with which it reacts depends on the agent's perceived intensity of the emotion. In addition, the agent has an idle animation for each emotion, appropriate to the emotion. The flow of the conversation is only steered by guiding the agent towards an emotional narrative, but no concrete questions are given. The agent will try to steer the conversation towards all emotions.

Results and Outlook

The results show that overall, no significant differences were found across all metrics examined.

Looking ahead, several aspects of the application would need to be improved to achieve significant differences. In particular, the agent would need to be more realistic in terms of conducting a guided conversation with the agent.

Project Team

- Ayko Schwedler

- Leon Korkmaz

- Rateb Karanzie

- Celestina Hermida da Costa

Interrupting an AI

Effects of Verbal Interruption on Naturalness of Conversation and Agent Perception

Motivation

AI agents such as Chat GPT often produce a lot of text as output. While web interfaces give users the ability to interrupt the agent via a button, this was not previously possible for the verbal interface. Without the ability to interrupt the agent, conversations feel more ritualised and structured, and lack the dynamism normally expected from natural conversations. Therefore it is essential to give users intuitive ways to interrupt the verbal output of the AI agents, such as using breakwords like "stop" or simply talking over the agent

The aim of this project is to create this functionality and test its influence on the user experience and the users perception of the virtual agent. In the study we examine that if the user can verbally interrupt the agent's flow of speech and redirect the conversation, the user experience is improved (H1) and if the ability to interrupt the AI will make the conversation seem more natural (H2).

Implementation

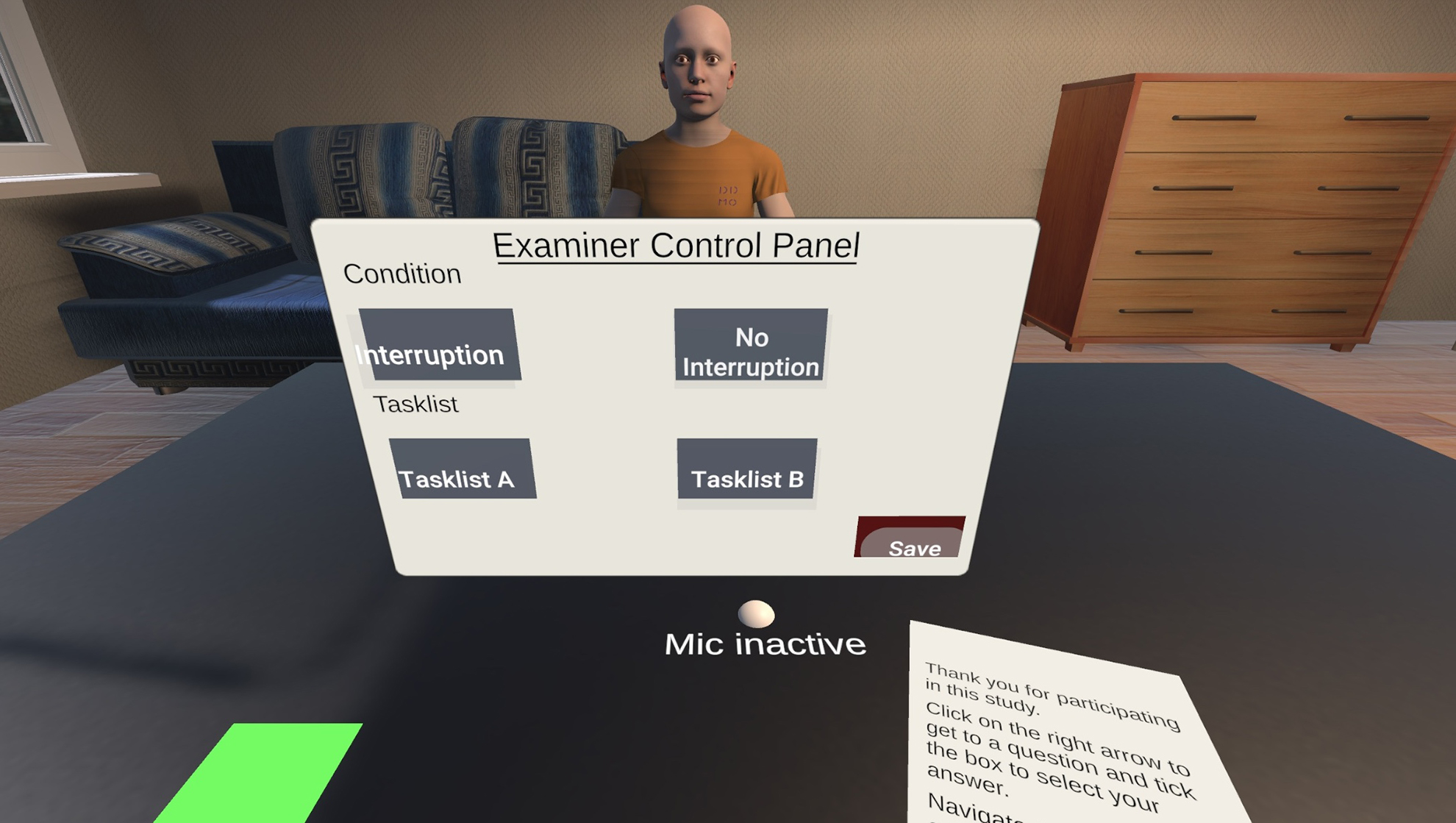

Starting from the Intelligent Virtual Agent SDK, which already allows basic human-agent communication we further developed a system, which allows the user to interrupt the agent's output at any time. For this we implemented an always-on microphone whenever the agent is speaking. The user input will then be processed from speech to text using the Windows Speech Recognition. Unlike using Google Speech-to-text API this allows us to set timeout parameters to extend the length of user input being processed.

Study

The study is a within-subjects design examining two different conditions, one where it was possible to interrupt the agent and the other where interrupting was not an option. The order of the conditions was randomized. The participant's task was to answer 4 single-choice questions as fast as possible asking the agent. In order to restrain the learning the learning effect, the task list was switched after the first condition. Between the condidtions participants had to fill out two different questionnaires, the Godspeed and the User Experience Questionnaire. There were also some open questions about the interaction with the agent. In total 30 students participated.

Results

Our findings suggest that interruptions play a crucial role in verbal interactions with agents in VR. They significantly improve the user's overall impression of the system, as well as perceived efficiency and stimulation. The user's impression of the virtual agent does not seem to change significantly. While verbal interruptions are beneficial and should be included in such VR systems, there are technical hurdles to overcome, such as back-channeling and interruption detection. These findings highlight potential areas for improvement in conversational agents to optimise efficiency and user experience. For example, interactive agents in customer service or education can be equipped with interrupt capabilities, particularly in scenarios where users need to retrieve information efficiently. On a broader level, this feature allows for a more fluid conversation and improved user experience.

Project Team

- David Egelhofer

- Nils Heinsohn

- Jiafan Gao

- Sherwin Khabari