Theses

Here you can find what which topics our students are currently working on. If you are interested in one of these topics, please reach out to the listed contact person. If you have your own idea for a thesis topic in the field of signal processing, please contact us and we will see how we can develop your idea together.

Proposal 1: Audio-Visual Signal Processing

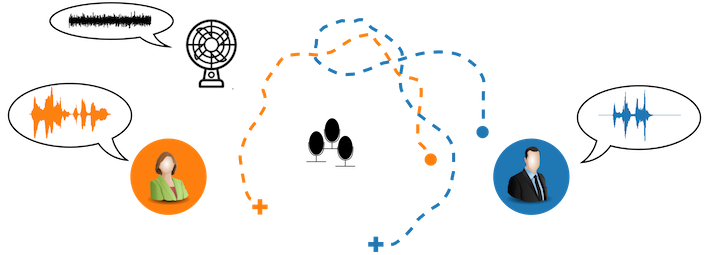

Speech processing algorithms are an integral part of many technical devices that are ubiquitous in the lives of many people, like smart phones (e.g. speech recognition and telephony) or hearing aids.

Most of these algorithms rely on audio data alone, i.e. microphone recordings. In very noisy situations, e.g. in a crowded restaurant or on a busy street, however, the desired speech signal at the microphone can be severely corrupted by the undesired noise and the performance of speech processing algorithms may drop significantly. In such acoustically challenging situations, humans are known to also utilize visual cues, most prominently lip reading to still be able to comprehend what has been said. So far, this additional source of information is neglected by the vast majority of mainstream approaches.

In this thesis, we will explore ways to improve speech processing by also utilizing visual information especially when the audio signal is severely distorted. The major question in this context is, how the information obtained from the two modalities can be combined. While there are many sophisticated lip reading algorithms as well as audio-only speech processing algorithms out there, there are only few methods that use information from the one modality to benefit the processing of the other. In this thesis, you will first implement and thoroughly evaluate one or more reference approaches, identifying their strengths and weaknesses. Based on this analysis, we will strive for novel ways to improve the performance of theses approaches.

Basic knowledge of signal processing as well as programming skills, preferably Python or Matlab, are a definite plus.

Contact: Danilo de Oliveira, Prof. Timo Gerkmann

Proposal 2: Phase-Aware Speech Enhancement

The ease of speech understanding in noise degrades greatly with decreasing signal-to-noise ratios. This effect is even more severe for hearing impaired people, hearing aid users and cochlear implant users. Noise reduction algorithms aim at reducing the noise to facilitate speech communication. This is particularly difficult when the noise signal is highly variant.

Many speech enhancement algorithms are based on a representation of the noisy speech signal in the short-time Fourier transform domain (STFT) domain. In this domain, speech is represented by the STFT magnitude and the STFT phase. In the last two decades research in speech enhancement algorithms mainly focused on improving the STFT magnitude, while the STFT phase was left unchanged. However, more recently, researchers pointed out that phase processing may improve speech enhancement algorithms further.

This thesis aims at implementing, analyzing and developing algorithms for phase processing. The overall goal is to obtain intelligible, high quality speech signals even from heavily distorted recordings in real-time. Of special interest in this context are the robust estimation of the clean speech phase from the noise-corrupted recording, the interplay between the traditional enhancement of spectral amplitudes and the recent developments in spectral phase enhancement, as well as the potential of modern machine learning techniques like deep learning.

First, existing approaches will be implemented and analyzed with respect to their strengths and weaknesses. Starting from this, new concepts to push the limits of the current state-of-the-art will be developed and realized. Finally, the derived algorithm(s) will evaluated and compared to existing approaches by means of instrumental measures and listening experiments. Depending on the outcome of this work, we encourage and strive for a publication of the results at a scientific conference or journal.

Experience and basic knowledge of signal processing are definitely helpful but not mandatory.

Contact: Tal Peer, Prof. Timo Gerkmann (UHH)

Proposal 3: Sound Source Localization and Tracking

Humans are remarkably skilled at localizing sound sources in complex acoustic environments. This ability is largely due to spatial cues, such as time and level differences, that our brain can interpret. In technical systems, microphone arrays enable similar spatial processing, allowing algorithms to estimate the positions of speakers and track them over time. Accurate and low-latency speaker tracking is a key component in many downstream applications, such as steering spatially selective filters (SSFs) that enhance a specific target speaker in multi-speaker scenarios.

Humans are remarkably skilled at localizing sound sources in complex acoustic environments. This ability is largely due to spatial cues, such as time and level differences, that our brain can interpret. In technical systems, microphone arrays enable similar spatial processing, allowing algorithms to estimate the positions of speakers and track them over time. Accurate and low-latency speaker tracking is a key component in many downstream applications, such as steering spatially selective filters (SSFs) that enhance a specific target speaker in multi-speaker scenarios.

Traditional localization methods rely on statistical models and techniques such as time difference of arrival (TDOA) estimation, steered response power (SRP), or subspace-based methods like MUSIC. While these techniques can be effective, they are often based on oversimplified statistical assumptions and struggle under real-world conditions. On the other hand, data-driven approaches can learn complex mappings between spatial features and speaker positions, thereby improving robustness in challenging noisy and reverberant environments. However, since they are usually very resource intensive, their practical applicability is limited. Hybrid methods address this issue by incorporating lightweight neural networks (NNs) into traditional estimation frameworks to refine the underlying statistical models while retaining a small computational overhead.

In this thesis, we will investigate low-complexity speaker localization and tracking algorithms that can reliably provide target direction estimates in real-time. These estimates are intended to guide deep SSFs in order to continuously enhance a target speaker in dynamic multi-speaker scenarios. Instead of completely replacing classical spatial filtering with deep learning, the focus will be on developing hybrid approaches as a lightweight front-end. The aim is to explore the trade-off between localization accuracy, robustness, and algorithmic complexity in realistic acoustic settings.

Basic knowledge of statistical signal processing, machine learning, and programming experience in Python is required for this thesis. Familiarity with machine learning frameworks such as PyTorch is an advantage but not strictly necessary.

Contact: Jakob Kienegger, Alina Mannanova, Prof. Timo Gerkmann

Proposal 4: Multichannel Speech Enhancement

Humans have impressive capabilities to filter background noise when focussing on a specific target speaker. This is largely due to the fact that humans have two ears that allow for spatial processing of the received sound. Many classical multichannel speech enhancement algorithms are also based on this approach. So-called beamformers (e.g. delay-and-sum beamformer or minimum variance distortionless response beamformer) enhance a speech signal by emphasizing a signal from the desired direction and attenuating signals from other directions.

In the field of single-channel speech enhancement, the use of machine learning techniques and in particular deep neural networks (DNNs) led to significant improvements. These networks can be trained to learn arbitrarily complex nonlinear functions. As such, it seems possible to improve the multichannel speech enhancement performance by using DNNs to learn spatial filters that are not restricted to a linear processing model as beamformers are.

In this thesis, we will investigate the potential of DNNs for multichannel speech enhancement. As a first step, existing DNN-based multichannel speech enhancement algorithms will be implemented and evaluated. Of particular importance is the question under which circumstances the ML-based approaches outperform existing methods. The gained insights and experience can then be used to improve the existing DNN-based algorithms.

Basic knowledge of signal processing, machine learning, and programming experience in any language (most preferable Python) is required for this thesis. Experience with a machine learning toolbox (e.g. PyTorch or TensorFlow) is helpful but not mandatory.

Contact: Alina Mannanova, Jakob Kienegger, Prof. Timo Gerkmann

Proposal 5: Speech Dereverberation for Hearing Aids

Overview

Reverberation is an acoustic phenomenon occurring when a sound signal reflects on walls and obstacles in a specular or diffuse way, which boils down to the so-called reverberated sound being the output of a convolutive filter whose Impulse Response depends on the geometry of the scenery and the materials constituting the different reflectors. Although it is a linear process, it is difficult to extract the original anechoic sound from its reverberated version in a blind scenario, that is, without knowing the so-called Room Impulse Response. Reverberation is perceived as natural in most scenari for unimpaired listeners, however, a strong reverberation will dramatically impact the speech processing performances of hearing-impaired listeners (speaker identification and separation, speech enhancement...)

Objectives

The objective of this PhD thesis is to design AI-based dereverberation algorithms for the hearing industry: in particular these algorithms should be real-time capable and robust to conditions encountered in real-life situations such as heavy reverberation, non-stationary noise, multiple sources and other interferences. They should also be lightweight enough to run on embedded devices such as cochlear implants or hearing aids.

Skills

-

Required skills

- Basic knowledge of signal processing

- Mathematical background in probabilistic theory and machine learning

- Programming experience (any language, preferably Python or Matlab)

-

Useful skills

- Theoretical background in room acoustics

- Mastery of a machine learning framework e.g. Pytorch, Tensorflow

Contact

Proposal 6: Audio-Visual Emotion Recognition (Social Signal Processing)

Overview

Emerging technologies such as virtual assistants (e.g. Siri and Alexa) and collaboration tools (e.g. Zoom and Discord) have enriched a large part of our lives. Traditionally, these technologies have focused majorly on understanding user commands and supporting their intended tasks. However, more recently, researchers in the fields of psychology and machine learning have been pushing towards the development of socially intelligent systems capable of understanding the emotional states of users as expressed by non-verbal cues. This interdisciplinary research field combining psychology and machine learning is termed Social Signal Processing [1].

Emotions are complex states of feeling, positive or negative, that result in physical and psychological changes that influence our behavior. Such emotional states are communicated by an individual through both verbal and non-verbal behavior. Emotions are abound across modalities- audio prosody, speech semantic content, facial reactions, and body language. Empirical findings in the literature reveal that different dimensions of emotion are best expressed by certain modalities. For example, the arousal dimension (activation-deactivation dimension of emotion) is best recognized by the audio modality over the video modality, and the valence dimension (pleasure-displeasure dimension of emotion) is best recognized by the video modality.

A review of emotion recognition techniques can be found in [2]. Recent work from our lab on speech emotion recognition can be found in [3].

Objectives

Recent literature reveals progress towards multimodal approaches for emotion recognition, thereby co-jointly and effectively modeling different dimensions of emotions. However, several challenges in a multimodal approach exist, such as,

- usage of appropriate modality fusion strategies,

- automatic alignment of different modalities,

- machine learning architectures for respective modalities, and,

- disentanglement or joint-training strategies for respective emotion dimensions.

In this project, we will explore multimodal approaches for emotion recognition, by aptly fusing different modalities and jointly modeling different dimensions of emotion.

First, existing approaches will be implemented and analyzed with respect to their strengths and weaknesses. Building on this, novel concepts to push the limitations of the current state-of-the-art will be developed and realized. Finally, the developed algorithm(s) will be evaluated and compared to existing approaches by means of quantitative and qualitative analysis of the results. Depending on the outcome of this work, we encourage and strive for a publication of the results at a scientific conference or journal.

Skills

- Programming experience (any language, preferably Python or Matlab)

- Mastery of a machine learning framework e.g. Pytorch, Tensorflow

- Experience and basic knowledge of signal processing are definitely helpful but not mandatory

References

- A. Vinciarelli, M. Pantic, and H. Bourlard. Social signal processing: Survey of an emerging domain. Image and vision computing, 27(12):1743–1759, 2009.

- B. W. Schuller, “Speech emotion recognition: two decades in a nutshell, benchmarks, and ongoing trends,” Communications of the ACM, vol. 61, no. 5, pp. 90–99, Apr. 2018.

- N. Raj Prabhu, G. Carbajal, N. Lehmann-Willenbrock, & T. Gerkmann. (2021). End-to-end label uncertainty modeling for speech emotion recognition using Bayesian neural networks. arXiv preprint arXiv:2110.03299, https://arxiv.org/abs/2110.03299.

Contact

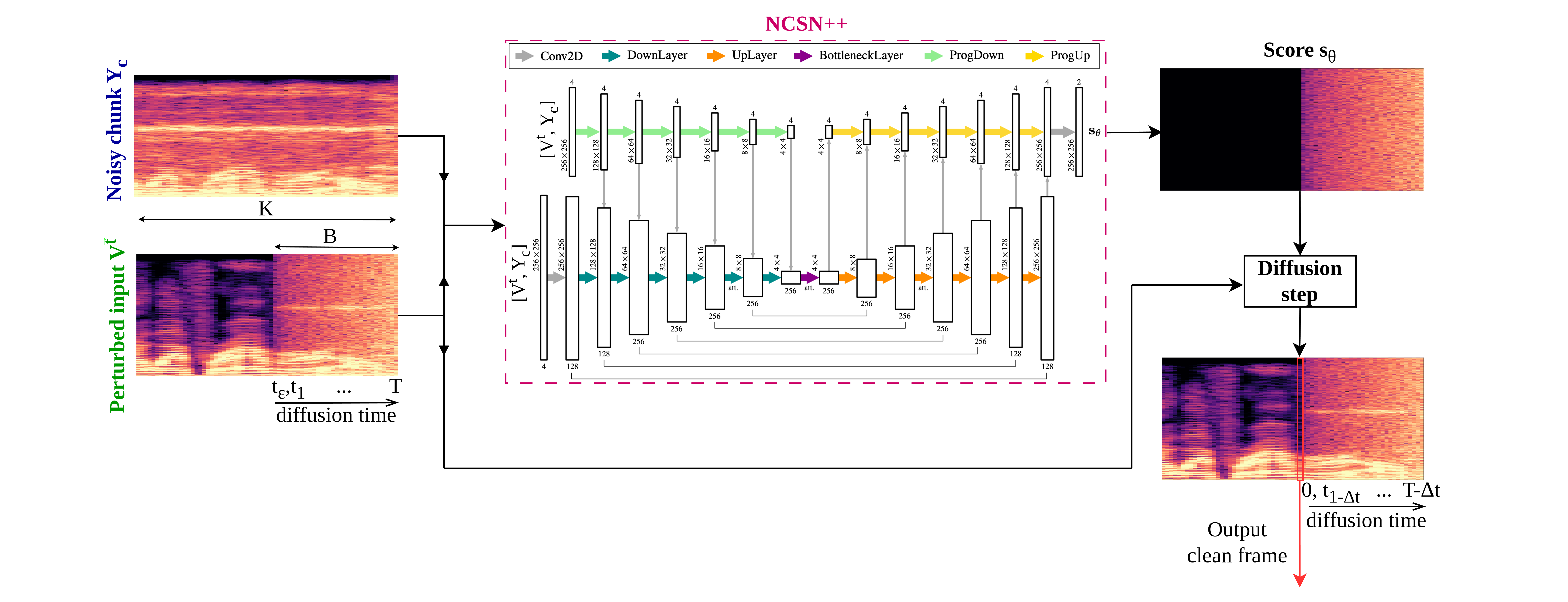

Proposal 7: Real-Time Diffusion-based Speech Enhancement

Diffusion models represent a leading class of generative methods and have recently advanced the state of the art in speech enhancement. The Signal Processing Group has contributed several publicly recognised systems, including SGMSE+, STORM, and BUDDY.

Despite their excellent perceptual quality, current diffusion‑based approaches are unsuitable for online operation because they require many neural network evaluations per time frame.

The objective of this project is to develop and evaluate techniques that enable real‑time diffusion speech enhancement. Potential avenues include Diffusion Buffer , knowledge distillation, and latent‑space diffusion;

The proposed algorithms will be implemented and demonstrated in our laboratory on a laptop GPU. We are aiming for further optimization for laptop CPU or smartphone class hardware.

Please be familiar with Python and PyTorch; basic knowledge of digital signal processing, Bayesian statistics and deep learning is necessary, but passion and curiosity are most important.

Contact: Rostislav Makarov, Bunlong Lay, Prof. Timo Gerkmann