Journal Article Available Online On Continual Robot Learning

21 June 2024

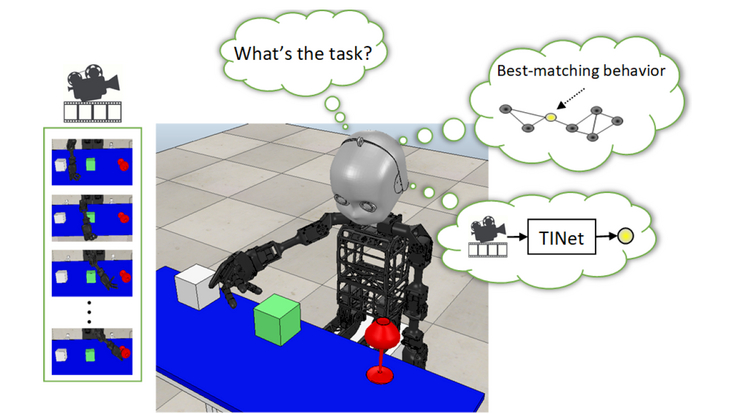

Our group has a new paper published in IEEE Transactions on Cognitive and Developmental Systems. The paper introduces a novel approach for continual, multi-task robot learning, enabling a humanoid robot to efficiently infer and execute tasks from unlabeled and incomplete demonstrations. Our approach is shown to generalize to unseen tasks based on a single demonstration in one-shot task generalization experiments. Here is more information about the paper:

Title: Continual Robot Learning using Self-Supervised Task Inference

Authors: Muhammad Burhan Hafez, Stefan Wermter

Abstract: Endowing robots with the human ability to learn a growing set of skills over the course of a lifetime as opposed to mastering single tasks is an open problem in robot learning. While multitask learning approaches have been proposed to address this problem, they pay little attention to task inference. In order to continually learn new tasks, the robot first needs to infer the task at hand without requiring predefined task representations. In this article, we propose a self-supervised task inference approach. Our approach learns action and intention embeddings from self-organization of the observed movement and effect parts of unlabeled demonstrations and a higher level behavior embedding from self-organization of the joint action–intention embeddings. We construct a behavior-matching self-supervised learning objective to train a novel task inference network (TINet) to map an unlabeled demonstration to its nearest behavior embedding, which we use as the task representation. A multitask policy is built on top of the TINet and trained with reinforcement learning to optimize performance over tasks. We evaluate our approach in the fixed-set and continual multitask learning settings with a humanoid robot and compare it to different multitask learning baselines. The results show that our approach outperforms the other baselines, with the difference being more pronounced in the challenging continual learning setting, and can infer tasks from incomplete demonstrations. Our approach is also shown to generalize to unseen tasks based on a single demonstration in one-shot task generalization experiments.

You can access the full paper here.